Many thanks to Brad Dyck for contributing this interview. You can follow Brad on Twitter @Brad_Dyck

It was my pleasure to speak with Rob Blake, Audio Director best known for his work on the Mass Effect trilogy. Plants vs Zombies: Garden Warfare is available now for PC and will be available for PS3/PS4 on August 19th.

BD: What originally made you move away from the UK to come over to Canada?

RB: Actually, before I came to Canada I was working at a small start-up in Spain (Tragnarion Studios). It was a really fascinating place to work because they were really passionate gamers who just wanted to make something they wanted to play themselves.

After I’d been with them for nearly a year I got offered the lead position on Mass Effect. I just finished the project I was working on in Spain so the timing worked out well. It was a dream job for me at the time – I’d been an Audio Lead before in the UK but working on something like Mass Effect was very special.

BD: Was it a pretty big jump?

RB: Oh yeah, I was at Bioware for 5 years and at the most we had about 13 sound designers on Mass Effect 3 so by most accounts it was a huge franchise. It was a lot of crazy sound design, organization, direction, working with people, mixing, plus I was designing the tools that we were all using as well. It was a great opportunity and I was happy with the way the audio turned out – especially with the third one. I think by that point we got to where we wanted to aesthetically and technically.

BD: Was it on Mass Effect 2 that you switched to Wwise?

RB: Yes, Mass Effect 1 was made with ISACT, which is an old audio system made by Creative Labs that got discontinued. Bioware were already working with Fmod on Dragon Age so it was a choice between Fmod and Wwise. When I joined, they’d already made the decision to switch to Wwise, so it was my job to figure out how to get Mass Effect working in a totally different audio engine. At that point, I don’t think any games had even been released on Wwise so the tool was still unproven and none of us knew how to get the best out of it. It was difficult for me coming in as a new lead with a new studio, new team, new project and a new toolset that nobody knew how to use. It was just a pretty crazy time. So that first Wwise project – Mass Effect 2 – was just us trying to get Mass Effect working in this new engine, although we ended up creating entirely new sound content along the way too. The third one was more, “Ok, what can we do with it now that we’ve got it all working well – we’re all comfortable with the tech, let’s push it further and see where we can take it“. We were pretty happy with how the third one sounded.

They’re actually not using Wwise any-more over at Bioware, they’re using Frostbite so it’s kind of funny, we got to a point where we were really happy with our toolset then we all switched over to Frostbite. That’s just the way it works in games though, it constantly changes; it makes things very exciting and forces you to rethink how to do things, which is a really beneficial process.

BD: Frostbite does get a lot of praise though.

RB: Absolutely! It’s what we’ve been using on Garden Warfare and it’s been another interesting experience – coming into a new team, a new project, a totally new toolset and having to learn all of that with very little support as we had no audio programmer. It was kind of tough for us, but so much fun as well.

BD: Was it an intentional decision to move away from a big, epic franchise to what you’re doing now?

RB: Definitely. I really liked my position at Bioware. I like giving direction, I like working with people but ultimately I’m a sound designer and it got to a point where I was a little further away from the content than I would have liked. It’s one of the challenges you get when you’ve been around for a while; how do I stay true to my craft and passion while advancing in seniority.

I created a lot of content on ME2 and ME3 but I just missed being truly hands-on on a day to day basis, and eventually a great opportunity came up here. I love Plants vs Zombies, it’s a fantastic franchise and a lot of fun. It couldn’t be more different from what I was working on before so it was an exciting opportunity to create something different.

BD: How many people worked on the audio for Garden Warfare?

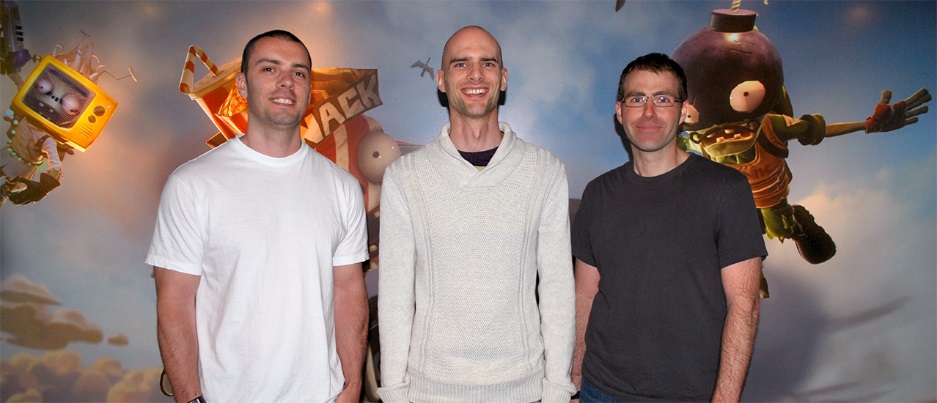

RB: Not many, about 2 and a half people. Anton Crnkovic worked on most of the project; he’s a super talented and creative guy and has worked on loads of projects like Skate and Resident Evil. Michael Berg is also a veteran, working on loads of EA games like Need For Speed; he worked on the project when things got busy towards the end and for our E3 demo. They’re both awesome guys that bring a lot of experience and imagination to the project, I was very lucky to work with them.

The three of us did all of the VO from here, all the sound effects – a lot of the sounds are recorded too, there’s not many library sounds. I think we ended up with about 10,000 sound effects, it was a great deal of work for the time we had.

BD: What were the earliest sound effects, did you get anything from the original game?

RB: Yeah we had the original sounds but they are obviously pretty old now and not really designed for sparkly next gen consoles. However, they were very well realized, really iconic stuff, so it was great to jive off of that and use it as inspiration.

Actually, we originally started out with Battlefield sounds in the game. When I originally joined the team, the majority of the sounds were just straight Battlefield sounds that came with the engine. It was kind of hilarious having this really wacky, cartoony game with the really over the top, cranked-to-11 military sounds from Battlefield.

BD: Is there anything from Battlefield left in the game?

RB: No, everything’s new but it was interesting hearing the game like this because you think “Battlefield’s a good sounding game, how would it feel over our game?” and it just totally, totally didn’t work. There was a big push from some sectors at the beginning of the project, creatively speaking, for us to create something that sounded very powerful and accessible to people that primarily play first person shooters and these sounds quickly proved that this would be a bad fit for the game. I’m glad I didn’t have to fight that battle after that.

We really wanted to have a quirky, fun and slightly abstract experience. But I guess there was a little bit of concern about whether we were going to stand up against all these big shooters. Especially at the start of production when the big games of the time were the likes of Gears of War, Halo, even Mass Effect. They’re hyper real, everything’s over the top and chunky. I’m glad that we proved right off the bat that it just didn’t fit well with the general aesthetic.

BD: I think that’s one of the game’s biggest strengths – because of its more colorful atmosphere it can pull in people who might not normally be interested in shooters.

RB: Yeah, it was one of our goals to appeal to a broad range of people. We wanted to make sure that it ticked the boxes for the players that like first person shooters but we also wanted it to appeal to those that traditionally wouldn’t play them. A lot of the developers on the team have kids so one of the key things that we wanted to do was to make a game that you could play with your own kids and not feel like it was too violent or too dumbed down for your own enjoyment. Trying to get that middle ground was our goal and I think that influenced a lot of the decisions that we made, including the audio. We really wanted to have a balance of satisfying power and empowering player feedback but also to contrast that with the light, wacky, comical dimension, while keeping true to the original game. It was kind of an interesting mix.

BD: Did you do a lot of the voice sounds for characters like the Peashooter, Chomper and the zombies?

RB: Yeah, that was one of the most fun parts for us. In the original P Vs Z, the zombies occasionally said ‘brains’ but the only character that really had a voice was Crazy Dave. In Garden Warfare we had all these really expressive characters that you interacted with in much more dynamic ways, so not having voices for them would feel really empty and flat. The game is all about the characters, that’s where our substance and comedy comes from. So we had to figure out what on earth a Peashooter sounds like for example. I mean, this thing is just like a bizarre walking green, conical mouth. We had no reference material as they’d made no sounds in the previous games but we really wanted to respect the franchise and do something that felt right for each character.

BD: You mentioned that there were a lot of random toys used, could you elaborate on what exactly some of them were?

RB: For example, with the Peashooter we tried a whole bunch of different things. We tried a variety of horns and other instruments that looked similar to the peashooter mouth to try and capture his appearance. Naturally horns aren’t as expressive and lack the organic quality that a voice has. A lot of children’s toys aren’t very expressive, they generally do one sort of sound but often resonate really with the youthful vibe we were aiming for. The mouth is very expressive and dynamic so we wanted to capture the organic-ness of the mouth but through a toy-like, youthful sort of sound.

For the Peashooter we ended up starting with a specific type of metal kazoo which allows you to create the punctuation and natural pattern of a voice. We then vocoded that through a German nose flute, it’s this weird contraption that you stick between your mouth and nose to create a whistling vocal sound.

BD: So you breathe out through both at the same time?

RB: You breathe out your nose and it uses the shape of your mouth to make the tones, so you get this sort of breathy, whistley vowel sound. By vocoding them together the nose flute gave the kazoo the mouthy vowel characteristics that the straight kazoo lacks and created the expressiveness that we really needed. So each line needed a double performance, one for the rhythmic ‘words’ and the other for the intonation and vowels.

There was a whole bunch of stuff that we recorded though. Lots of splats, twangy things and toys…the sort of noises you’d make when you were bored in school as a kid, like with a ruler on a desk. For example, a core element of the RPG is a popgun that we just distorted the hell out of. We recorded it with a high definition microphone so there was loads of high frequency. Then you pitch it down so you get this explosion that sounds massive but still bright. I record pretty much all of my sounds on this microphone that records up to 100khz. I usually record at a 192khz sample rate and then when you slow it down it still has all of these really high frequency details but with this real deep and powerful bass.

BD: I think you once mentioned doing that on Mass Effect as well, correct?

RB: Yeah, I did. We used the same mic to do a lot of the creature sounds and a bunch of other stuff too, it’s pretty interesting to use. It doesn’t work well for everything but because this game is so vocally focused, it was really helpful on this too.

The interesting thing when recording with these mics at high frequencies is that there’s a lot of stuff up in those higher registers but the end product strips out all that information as we’re playing back at data compressed 48Khz. We do a LOT of limiting/compression for creative effect when making sound effects so often the limiter in your session will be heavily reacting to these frequencies that aren’t going to be audible in the final assets and you can get some weird behavior from limiters reacting to ultimately inaudible sounds. So I’ve recently taken to recording at high frequencies, vari-speeding down the recordings to half speed (or whatever sounds good) and then dropping them into 48KHz sessions so that my crazy limiting is only reacting to what’s going to be heard by the end user. I’ve done a lot of testing on this and using this approach makes a huge difference to the sound if you’re doing aggressive processing.

BD: Do you recall what you used for the Zombot drone? The propeller sounded like it was vocally performed.

RB: Ha ha, yeah. I used a helicopter to get the choppy sounds and vocoded that with a robotized version of me making ‘ahoowoohwoo’ noises. I wanted a vocal version of the rhythm and pattern of a helicopter sound.

BD: It sounds almost like when you breathe through a fan.

RB: Yeah, that’s kind of what I wanted, I wanted it to have that choppy propeller sound but because he’s basically just this big face, I wanted to make sure it had a sort of vocal quality. I would’ve given the actual character a voice itself but it doesn’t really interact in any way, it just fires. By giving the propeller a vocal quality I felt like I was imbuing some sort of character to it.

BD: What strengths does Frostbite have over other engines you’ve used?

RB: It’s got a number of things, but the two biggest for me are its integration of audio and the open ended audio tools. It’s got a really steep learning curve, I can tell you that, but it’s certainly worth the effort.

I love having the unification of game and audio engine. There’s no distinction really, whereas with systems like Wwise and Fmod you have a separate tool outside the game engine and you have to somehow integrate them together and there’s always this sort of barrier to…

BD: Getting them to talk together.

RB: Exactly, just the fact that they’re not fully integrated makes it slower or limited in a lot of ways. With Frostbite, if I want to hook up a new sound to a weapon I can do it within seconds. If I want to modify the player’s weapon sound based on their health, it would take me seconds to do that. If I want to change the speed of the gunshot because it’s making it difficult to work with, I can go in and change that value directly in the same tool and then show the designers. Same thing with level sounds, I can just add a sound right there into the map and have easy access to whatever gameplay data I want. It’s been a lot of fun passing data backwards and forwards between game and audio, it’s all one big system. That, for me, is the most valuable strength of an integrated approach compared to, say, an Unreal/Wwise cross.

The audio tools themselves are awesome as well. Frostbite has a graphical interface like Reaktor or Max/MSP where you essentially designing schematics and systems visually rather than through code. It’s a lot more accessible for sound designers like me who don’t have a strong programming background. It’s super open-ended too, much of it is math, you’re really only limited by your imagination; there’s normally numerous ways to tackle any problem which I find incredibly liberating.

BD: Battlefield is well known for its use of HDR, how was mixing Garden Warfare with it using Frostbite?

RB: HDR really helps create an extra feeling of focus and, obviously, dynamic range. I can’t imagine not using it anymore, having a reactive mix is expected in games these days.

I like to mix iteratively. I’m not particularly keen on everyone just dumping everything in and then doing a pass at the end. I like to slowly build up the mix over a period of time, so I would go through the content and try things out; try changing HDR values, try remixing all of the weapons and just seeing how it felt.

For me, mixing is all about relevance and what that means to any specific project. On this game the mix was an interesting journey because I’m used to Mass Effect and it being a predominantly single player game. Mass Effect is all player focused, you’re the center of the universe in that game technically and narratively, it has a cinematic approach and therefore normally you only care about what you are looking at; that’s the relevance in that game. Often on Mass Effect I’d turn down sounds that weren’t in your field of view. That was quite vital to the mix, especially on ME3. I really focused the mix on what was significant at the time rather than, say, something loud you’re walking past that isn’t important narratively or emotionally speaking.

I went into Garden Warfare with that sort of mixing philosophy in mind – I want to reduce things that are off screen to emphasize your visual experience as it’s so vivid – but because it is entirely multiplayer, everything’s potentially important. That person behind you could be sneaking up for the kill and it’s vital that you hear that, maybe that’s more important than something in front of you; the sound is a tool to inform the player of threats and opportunities. So we instead focused on not just what you’re doing but also what people are doing to you. Anything not concerning you isn’t as relevant.

RB: I brought quite a heavy reduction on things that aren’t facing you… I might have gone a bit overboard (laughs) but I found that I had to do it in order to clean the mix up because otherwise it’s chaos. I mean, there’s 24 players running around, maybe another 50 turrets and AI zombies. You’ve got stuff exploding all over the place and it’s quite a small, contained area with usually one bottleneck or base that’s attracting everyone and their noise. It was really difficult to create some sort of coherency in that amount of sonic confusion. So by having really strong directional cones on sounds meant that we could sort of filter out some of the chaos that isn’t relevant to you. We also do lots of other mix tricks to focus on relevance, like turning down your team-mates weapons, as it’s not actionable information, turn up the player’s bullet impacts as the surface type will tell you when you’re hitting targets. And all this stuff is dynamically modified by the HDR settings, all the systems work together to make more sense of the battle.

For the future I want to enhance our mix so that it dynamically adjusts the amount of directional cone reduction based on the chaos level of the mix. Essentially tightening up the focus based on how insane things are or easing off when things are quiet. Not only would that sound cleaner but it corresponds to our own experience; when stuff gets crazy humans tend to focus on immediate threats. I would love to have a lot more adaptiveness like this in the mix; a more fluid mix reaction based on relevance to your experience, that’s next gen to me.

BD: Who did the music for the game?

RB: Peter McConnell was our composer, he also did the music on Plants Vs Zombies 2. He was awesome to work with; he was very open to trying out all sorts of wacky ideas. Originally, the music for P Vs Z 1 and 2 is kind of a quirky, retro style. It’s got a really nice, happy bounce to it and our game is a little bit more edgy. We had various initial concepts ranging from heavily stylized 80’s hair metal to 60’s surf rock like Dick Dale…

BD: Where they have like the tremolo guitar?

RB: Yeah, exactly. That stuff’s super cool and it ties into the whole nuclear family, 60’s suburbia style that P Vs Z touches on. I really liked that angle but we ended up going with more of a crossover mix of military percussion with 80s synth pop with a nice retro feel to it. So we had the P Vs Z feel but with a little bit more edge and crossed with the military style drums, percussion and brass that helped reinforce the combat angle.

BD: I liked the orchestral material in the boss battles too.

RB: Outside of the core music we used different styles for different contexts. For the Gargantuar boss piece, who’s this giant stupid troll character, I wanted it to feel like a zombie choir. I wanted to have something really dark and foreboding but still comical. A whole bunch of us from the dev team sang in it, it was a lot of fun…it’s the first time I’ve actually deliberately needed bad singing!

The music implementation was interesting because we have 4 different game modes and each of them have totally different music requirements because, again, the focus is different.

The Co-op mode, which is the closest to traditional P Vs Z, has a more standard interactive music system with interleaved streams that switch depending on game play. In Gardens & Graveyards Mode I wanted to use the music as a mechanic to tell the player who controlled the bases. For plant bases we had the scarecrow singing a song similar to “If I Only Had a Brain” track from Wizard of Oz. When the zombies take over the base they’re singing like in the David Bowie film The Labyrinth. It’s more mischievous, like a gang of zombie gremlins. It’s all pitched and filtered based on how ‘captured’ the base is, so the player can instantly tell the status of the base, but in way that’s still fun. We could have done this with sound effects, but with the music it was much more immediate and doesn’t require as much ‘learning’ on the player’s part. On death match we focused the music treatment on the time remaining and Gnome Bomb was all about control of the bomb and its impending destruction.

BD: Anything upcoming that you can tell us about, like DLC or anything else?

RB: We have lots of cool ideas for the future. I can’t talk about any of it unfortunately but there’s been a really good response to the game, people seem to be loving it so that bodes really well for the future. We’ve got a big screen out by the coffee station where we can see how many people are playing the game and it’s amazing that despite so many big and great games like Titanfall coming out recently, it really hasn’t impacted our numbers. People are really playing the game like crazy. Within a week someone had put in well over a hundred hours, that’s just crazy, you know?

There’s so many things we can do with it though, I think that’s the great thing about the franchise. We loved working on it, it’s been so much fun and I think that comes through when you play it. It’s really resonated with people and it’s been so nice working on something with a focus on fun and enjoyment. Sonically it’s given me a totally different perspective on tonality and focus, plus it was a blast to work on!

Many thanks to Brad and Rob for taking the time to put together this interview.

Fantastic interview, Rob continues to be a massive inspiration!

Excellent interview, thank you for this! What a switch, going from the cinematic, hyperreality of the ME franchise to the over-the-top, goofy and comical PvZ. Rob has a really impressive skillset and it shows. Awesome work to both.

Really enjoyed reading this interview, particularly the points comparing to your Mass Effect days. The FrostBite setup does indeed sound liberating, in an industry where often you have to argue for audio code support. Nice to think about using those heavy weight tools in this cartoon context. Fun stuff!