Guest Contribution by Neil Cullen

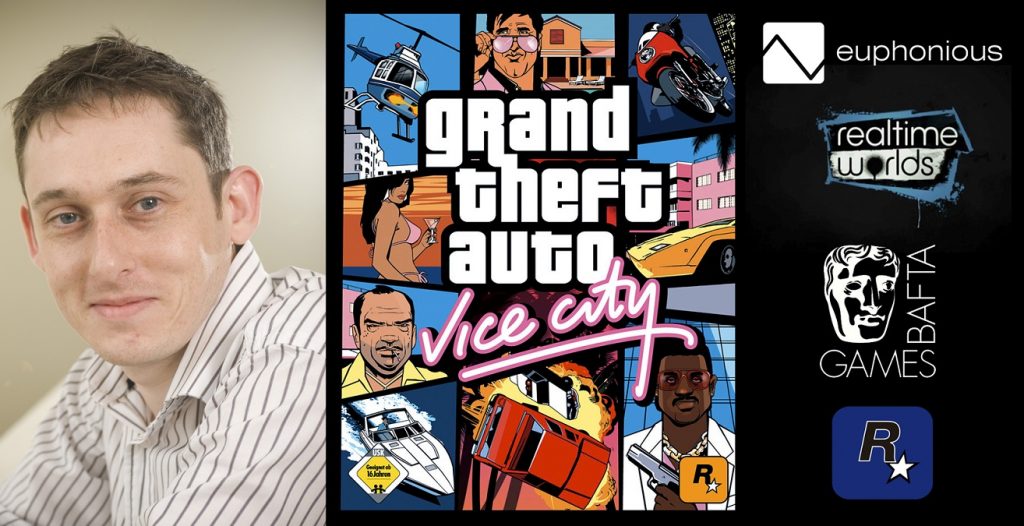

Raymond Usher started work in the games industry in 1992 as a sound designer at DMA Design, working on a number of titles, most notably the original Grand Theft Auto games. He became the company’s senior audio programmer and through the release of GTA III, was part of the metamorphosis into Rockstar North. Raymond stayed at Rockstar until the end of development on GTA: Vice City before moving to the newly formed Realtime Worlds to act as Audio Director for the title Crackdown. After that company’s collapse, Raymond founded Euphonious, an independent audio production company providing direction, sound design, audio programming and music licensing services for developers. Double BAFTA award winner for Vice City and Crackdown, Raymond has been at the forefront of game audio for over 20 years.

Neil Cullen: Your company Euphonious handles audio outsourcing for the games industry, could you describe your services and how they fit into the development cycle?

Raymond Usher: Euphonious has been going about 2 and a half years officially, after Realtime Worlds collapsed, there was a lot of small studios doing small mobile and Facebook games and we sort of started doing sound for them as a favour. It made me think we can make a go of this, so 2 and a half years later I’ve lost count of the number of projects we’ve done. We tend to do about 20 or 30 a year, ranging from mobile stuff right through to AAA titles such as the Lego Games developed by Travellers Tales who we did a lot of the cut-scene work for. In general a lot of the work comes from people that I know, but a lot of it is also recommendations, going out and meeting people at various events. It can vary from project to project, some of them we come on the project quite early while on others the project’s almost complete. It’s really a case of playing the builds, getting a list of requirements together and going off and getting that done, working with video captures, and just keeping in touch with the companies. A lot of the folk I work with are quite local while others are not so we Skype.

My main job is directing the audio development and business development, and we have a couple of guys who do most of the audio production work. Some projects last a few days, some a few months and we always have about 3 or 4 on the go at once and a lot of the projects we’re working on now, after they are released, they are then frequently updated. Off the top of my head there must be about a dozen companies that we do regular work for and as more companies come online we just build up relationships with them. I suppose with the kind of projects that we do, it can be quite tempting for the development team to want to keep costs low so we’ve always got that in mind, and we try to give value for money. The majority of the work is for small and medium sized companies working mostly on mobile or web based games. If you have somebody in house, you need to pay their salary, buy the equipment and in small offices space is at a premium, so having enough room for a sound guy to monitor comfortably can be difficult. Travellers Tales have a sizeable audio department but they have a lot of projects on so they outsource the cut-scene work as a self contained block which has it’s own tight deadlines. With the small and medium sized companies, we’re of the idea that as they grow and become more popular, then so do we.

There are our Audioskins range as well, sound packs that we call shrink wrapped audio for a game. We see that as an opportunity for anyone involved in audio to produce these packs and we’ll put them up and sell them, we make internal ones but we’re trying to create opportunities for folks too.

We also do technical development and R&D projects. With Protoytpe funding from the University of Abertay we are looking at doing an audio middleware solution for mobile. We have a system up and running but we’re now looking at Actionscript and Adobe Flash, with the Adobe Air platform and HTML5. Working as an outsourcing company, you produce the audio for the game and then hand it off, then you might get a build back and something’s not quite right. So we’ve got a version of an audio engine that we’re just finishing off now, and the idea is that we would then be able to get a build of the game and make adjustments to the audio without having to bother the developers.

For me this is a bit like history repeating itself. When I used to do sound design and music, I’d hand it over and it’d never be quite right the first time. That’s why I got into audio programming originally but it’s now come full circle and I’m doing that again. DMA, when I joined, it was relatively small, and there was nobody specifically programming the audio; just whoever was available. I’ve always said that implementation of the audio is half of the audio experience, and there’s no point in having great sound if it’s not implemented well. It’s like making a record, you can play the instruments but you need a sound engineer to make the sound great.

NC: What effect do you think the rise of audio middleware like FMod and Wwise has had on the industry?

RU: It’s all about putting more power into the hands of the audio designer, letting them choose which WAV files to randomly choose between or set pitch / volume off-sets. That’s been done in middleware for years now, but when I was working with DMA and Rockstar, it was all done manually and calling one cue could trigger four of five WAV files all randomly pitched. If we can get that logic in the hands of the audio designer, the quality of the audio goes up and it makes things easier for the development as well.

FMod and Wwise nowadays are the kind of solutions I could have really done with when I was working on the GTA games, and even to an extent Crackdown for Realtime Worlds. By Crackdown, FMod was around but it wasn’t the Designer or Studio package we’re now familiar with, and XACT (Microsoft’s audio engine) didn’t have all of the features that we wanted. I was writing these sort of engines from scratch. These middleware tools are fantastic solutions, they’ve increased not just the quality but the whole development cycle, the pipeline, the iteration. You still need someone to handle triggers, but as a sound designer you can now get sounds into the game and work on the volume mix etc yourself. Part of the reason I’m looking at mobile and Flash middleware, is because these solutions are primarily aimed at console/PC bigger budget titles and there is a different set of requirements.

All of the set-up work, getting systems that allow designers to load their sounds and be creative is what audio middleware is all about. When I was an audio programmer, part of the role was creating these engines, but the other part of the role was going through the game code and knowing where to put the function calls in and trigger sound effects. Designers would ask, can we put this cue here and change the volume or pitch and add filtering based on the time of day or the speed of the car or the surrounding geometry. I always encourage folks interested in sound design with a little bit of technical understanding to do a bit of audio coding. You don’t need to be the best programmer. You just need to know how to define the steps and that opens up a lot more opportunities for the audio experience when you can physically go into the code and place one line of code that plays an audio event at a certain time, and middleware makes that easier. Without the ability to get into the code and add a line or change something, you’re always going to be at the mercy of the programmer. If you have a great audio programmer or someone who understands it, fantastic, but if you’ve got someone who’s also doing the front end or the A.I then it might not be as high on their priority list.

Game sound designers still need to know about the technical aspects of what the hardware can do, things like knowing you can only decompress one MP3 at a time in iOS etc. When I was doing console stuff for instance, GTA III and Vice City on the Playstation 2, there was a fixed memory budget for audio so I’d work with the audio designer to create a memory map that’d define which bits could be swapped out. Not all of the sound needed to be loaded in at once and we had to figure out how to manage that. For sound designers it’s advantageous for them to have a technical understanding to get the best out of the hardware and know what’s possible.

NC: Before Euphonious, you worked on massive AAA titles across multiple generations with the GTA series and Crackdown, how did the process change from project to project and between console generations?

RU: On big multi million dollar projects there can be a lot of repetitive stuff. When I was doing GTA III for instance, I’ll say there was about 18,000 lines of dialogue needing triggered, then with Vice City there was 50-60,000 lines of dialogue needing triggered. For Vice City, one of my friends was a consultant for DTS surround sound, and on the PS2 at the time it was Dolby Pro Logic I think. It wasn’t discreet sound. Then DTS came along and we got discreet sound implemented. That was a bit of an experiment and we also did some experiments with real time sound reflections, analysing the surrounding geometry and figuring out how far away various walls were etc. It was an illusion, it basically created a virtual sound then time delayed it and volume attenuated it so that when a sound played you got this early reflection coming in that was directional rather than just going for the standard reverb that was on the PS2 at the time. It was a simple implementation in GTA III, then in Vice City we didn’t use the system’s hardware reverb at all, and just used our own reflection system.

Crackdown for Realtime Worlds is where that really took another step up. With GTA in the PS2 generation there were various challenges you had to get over in order to get the best out of the system. There was a lot of music, we even had to think about the layout of the DVD, putting cues at various different locations on the disc to handle seek times for instance. Moving onto the following generation of hardware, we were getting to a point where we were no longer as limited by the technology. There was more we could do with it and we were thinking “well, just because we can doesn’t mean to say that we should”.

For example, there’s been great debates about footsteps. In the past everybody used footsteps just because, but with the next generation of hardware we started to think more creatively about the sound design. We could have footsteps all of the time, in a street scene for example, but what if you didn’t use them all the time? Say there was a shot with a SWAT van for instance and the SWAT team burst out of the van and there’s suddenly lots of footsteps. If there were footsteps there before from passers by, I don’t think the effect would be as powerful, so I think this whole selective mixing and selective audio design implementation is the next level. The audio middleware stuff deals with all of the technological set-up but I think the creative process of when to play sounds and when not to play sounds is important. Silence is powerful too. Looking at film for examples, some of the techniques used there like the Walter Murch stuff, I think that’s where a lot of the work will be in the future, deciding what to play and not what to play. There’s been this drive towards realism and I’ve been guilty of it as well, getting hung up about having music coming from NPC cars etc and I’m now looking back on it and thinking well it was great but we don’t have to make everything realistic.

It depends on your audience as well. With Crackdown there was scope thanks to the story, these agents with super abilities, and because of that there are little surrealist touches here and there. We were still in a realism mode for most of it but towards the end of the project we were doing things like the agency base in the middle that had tunnels leading to each of the three cities. There we added an ambient effect in the tunnel of a gang taunting you, it wasn’t realistic but it added to the atmosphere of it all.

NC: Finally, you have conducted some research into the effect of audio in games, how would you rate it’s importance?

RU: We did some research with a couple of guys at Abertay using biometric harnesses that’s been published in the Computer Games Journal. [ed. This link goes directly to the volume in question.] From my perspective, over the years being the audio guy you’re trying to explain it to a producer or someone who’s allocating budgets and say it makes a difference, it makes the experience more enjoyable, realistic or whatever. I guess with audio if you do the job nobody notices which it is as it should be. The aim of our research was to get some usable data, some numbers in order to show that sound enhances the gameplay experience. There was no form filling, it was purely linked to heart rate and breathing rate. When you’re playing a game you can’t disguise that stuff and the readings were significant. The audio experience does make a difference. We plotted the heart rate and respiration rate playing three different kinds of games, a driver, a casual game and a suspenseful thriller, and you notice things like the heart rate changing or someone holding their breath, things that just weren’t present when subjects played without sound.

That, however, was only scratching the surface, we’ve had a lot of good feedback and maybe one day I (or someone else) can take that on and go further. There’s been a lot of people saying to me it’s been really helpful as you’d hear stories of a development team testing a game and suddenly their testers increased their lap time or something similar. Their instinct was attribute to this to a change in the physics or AI code when the only thing that had changed was the sound. The audio works on a subconscious level, so I don’t think an audio designer should primarily be trying to create a good audio experience, it’s about how it all works together. At the end of the day it’s a game, it’s the overall experience that’s important.

Neil Cullen is Digital Projects Coordinator for the Royal Scottish National Orchestra (@RSNO) and was recently nominated for a BAFTA “Ones To Watch” award for his work developing a video game with a team from Beijing’s CUC. Neil has a keen interest in interactive audio and has worked for the BBC R&D audio team during his masters thesis and on the EU funded FascinatE project looking into future interactive live broadcast. You can follow Neil on Twitter @thatneilcullen, or check out his website at www.neilcullenprojects.com.

Thanks go out to both Neil Cullen and Raymond Usher for contributing this interview to the site. If you have something you’d like to share with the community, whether if be part of one of our monthly topics or not, please give us a shout!

Great article I always love to visit such posts thanks for sharing it with us.