Virtual reality is a new frontier for audio and sound design. The industry is buzzing with potential and new technology, though many elements have yet to be figured out or agreed upon. Travis Fodor is an audio engineer, editor and sound designer who has worked with a variety of VR startups and 360 video projects as well as the Music and Audio Research Group at NYU. Andrew from Pro Sound Effects spoke with Travis about his thoughts, experiences, and predictions surrounding VR audio.

PSE: What is your typical workflow like when working on VR or 360 video projects?

TF: When creating ambiences for a realistic environment, I bring ambient elements into Pro Tools just like I would for a film or TV spot. Rather than having a two-minute ambience backing up a scene in a film, I go through and piece together smaller ambiences of 15-20 seconds that are looped and intertwined by script and the Unity Game Engine. Playing smaller clips when needed, rather than large audio files all at once, is a lot less strain on the engine. I’ll also use hard effects, such as cars, birds, etc., but they are placed within the 3D space in Unity and implemented in a similar way as a video game.

For more of the sound design elements, I pretty much work exclusively in Massive and Absynth, two flagship synths that come inside NI Komplete 10. A particular challenge of VR audio is making sure the sound works well across the broad range of available and compatible platforms: Oculus Rift, Samsung Gear VR, HTC Vive, gaming PCs, MacBooks… Because of this, I’ve spent a lot of time mixing for different headsets and setups. I reference everything through a variety of headphones, with a focus on the Oculus Rift headset, in order to best recreate the listening environment of VR users.

What are your thoughts on the current state of VR (not necessarily audio-related)?

VR is here and it’s not going anywhere. The general hypothesis is that VR is going to take another couple of years before it takes off. I’m a strong believer that communication, film and gaming will take a strong turn toward VR much sooner.

Have you had any VR experiences yourself that really affected you?

Recently I’ve had two experiences that will stick with me for a long time. As a huge Rick & Morty fan, being able to stand next to Justin Roiland inside AltspaceVR as he drew characters from the show was absolutely awesome. Just last week, I was playing the HTC Vive game Battle Dome. Battle Dome is a first person shooter where two teams shoot a variety of guns at each other in order to take the opposing team’s base. I happened to be playing as the sniper one particular round, and in order to get the best shot, I had to lay on the ground and peek around some cover. Once my knees and elbows touched the ground (in the real world) I quickly realized ‘Travis, you’re laying on your living room floor’ rather than optimizing my position to take out my opponent. It was quite a surreal moment for me because I truly felt I was somewhere where I wasn’t. I always tell people about “that moment” when you get dropped into the immersion inside VR. I’ve personally witnessed over 100 people experience this, and it never gets old.

What trends are you seeing in the VR community?

Everyone in the VR community is so passionate about pushing VR forward. It’s important to realize that nobody is really a “VR expert”; they are a strong designer, game developer, or tech evangelist. The VR community is diving into uncharted territory. There’s a mix of feelings ranging from exploration, risk, and innovation. In general, I would say that unlike any other creative medium people are pushing the importance of sound from top to bottom. This is exciting for audio engineers. We’re going to start seeing the attention to audio that we’ve been looking for.

What is are some typical challenges you’ve faced handling 3D audio for VR?

You have to be conscious of CPU resources for audio and even more conscious with 3D audio resources. Sometimes I have to turn off the 3D elements of a sound source and resort to traditional panning/amplitude to create the 3D spatialization, or even drop the sample rate of the audio file. Third party plugins are getting really good at spatialization, occlusion (almost), and other necessary tools for audio immersion. We’re going to need some more processing power before we can implement these tools from top to bottom. It gets tricky when you have to ship the same product made for a $1,500 gaming PC and a mobile phone. Optimization can be done towards both ends of the spectrum, but this brings an interesting dynamic that we haven’t really encountered before in gaming/tech.

When do you use mono vs. multi-channel sound effects in VR? Do you see any implications with ambisonics for VR audio?

First, it’s important to differentiate between 360 video experiences and VR. 360 videos essentially wrap a screen around you (in every direction), and you as the viewer can sit back and turn your head all around to experience the film/game. VR on the other hand allows you to move and walk through the environment changing your perspective to both the visual elements and the sound sources. 360 allows you to change your perspective, but only on the axis of your head. You can’t travel through a 360 video.

When shooting a 360 video, you should consider pulling some ambisonic recordings, or drop in some mono sources to be spatialized inside a game engine. Combined with head tracking, you can quite literally replicate the audio experience that was recorded on set with this type of audio. At the Music & Audio Research Lab at NYU, we’ve recorded concerts in 360 with a variety of B-format recordings and then brought them into VR headsets. If you bring the audio into software such as Two Big Ears, you can add head tracking to the recorded production audio.

In my experience so far, I’ve mainly worked with mono sources for VR which are placed in 3D space by the game engine. However, we’re now at a point where we can begin to experiment with Ambisonics within a variety of VR experiences. This year we have a huge push of tech on both the hardware and software side. Google established B-format (First Order Ambisonics) as the default supported spatial audio spec for YouTube 360 & VR videos and their Jump camera rig. There are a lot of advantages to the format. I predict Ambisonics will continue to play a huge role in creating immersive VR experiences.

What is an aspect of VR audio that is still up for debate?

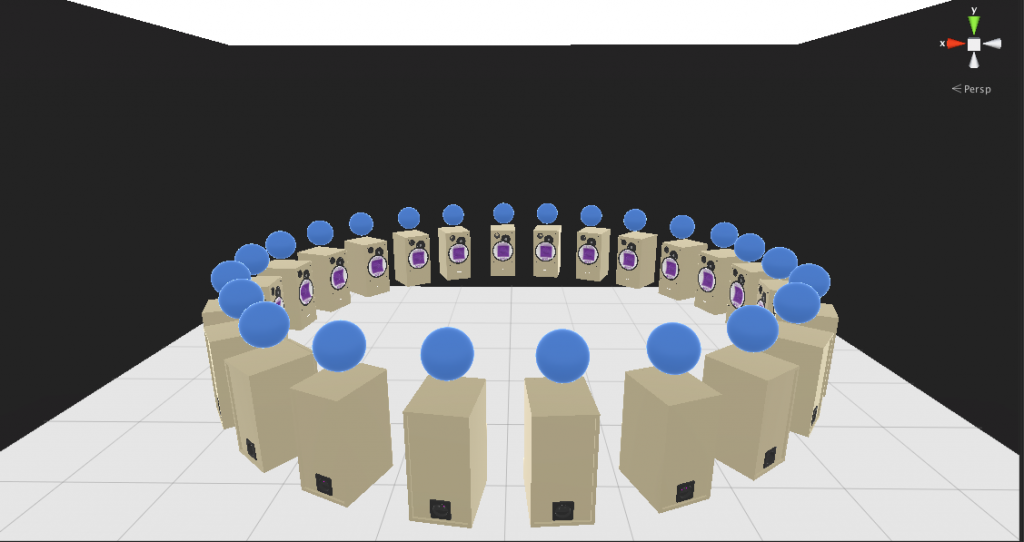

Occlusion—the way sound changes because of an object in its way. A ton of 3rd party plugins have been working on it, but nobody has nailed it yet. We don’t have a standardized HRTF engine fit for every user. Oculus and some third-party companies have released some HRTF localization tools for developers to use, but they work better for some people than others. I’m trying to work on that a bit at the NYU Music & Audio Research Lab (see image). Once we have occlusion working really well, and once every user can experience accurate localization, I think immersive audio will be in a great place.

How do you anticipate the VR audio field will change?

VR has made a lot of big companies realize the importance of audio. We are going to see a lot of audio engineers and sound designers hopping into VR and a lot of software engineers working on optimizing their tech for CPU-intensive audio experiences. If we look at the history of game consoles, we’re on a pretty steep increase in CPU allocation to audio. VR is only going to fuel that fire.

What excites you the most when you consider the future of VR?

Well I’m excited from two angles. Through my work with various social VR platforms, I’ve seen firsthand that social VR is going to be the next platform for communication. I’m really looking forward to the day where the majority of my interactions with my friends switches from mobile apps (such as Facebook and Instagram) to VR.

On the more personal and creative side, I’m really excited for all the new challenges that VR brings to audio engineers. VR is carving out an entirely new field within Music Technology—almost acting like a cross between a sound designer and 3D Audio Engineer. It’s really exciting to me that we can pioneer this new field.

Thank you to Travis Fodor for sharing his thoughts and Andrew Emge at Pro Sound Effects for conducting this interview. You can connect with Travis on Twitter at @travisfodor and on LinkedIn. You can also connect with Andrew Emge on LinkedIn and read more of his articles and interviews on the Pro Sound Effects blog.

Great post! I’m glad you differentiated between 360 and VR, many productions I’m on seem to be confusing the 2 (I am a location sound recordist and audio post engineer). Question: do you think we will have to continue using 3rd party video game engines to create 3d sound design (I.E. should I learn unity or unreal and if so which would you recommend?) or will we eventually have the ability to do all this within protools?

Hey There! Thanks for reading. Oculus has released some .aax plugins this past year for use within Pro Tools. I use them for prototyping soundscapes before bringing them into the Game Engine pretty often. I think we will be using Game Engines for a while on the integration side, but there’s definitely a push in DAW integration. I also use Reaper pretty much exclusively for 360 video because of the awesome Reaper ATK Toolkit.

Oculus Plugins:

https://developer3.oculus.com/documentation/audiosdk/latest/concepts/os-aax-usage/

Reaper ATK Toolkit:

http://www.ambisonictoolkit.net/

thx

Great question !

I don’t think game engines are going to fly in the audio world and Protools. I have tried them out.

There is a need to be able to sync hard fxs with video frames in VR and fast.

I know that Dolby is working on something. Its the Wild West !!!!

and audio is always LAST haha

check out

http://www.meetup.com/Los-Angeles-VR-and-Immersive-Technologies-Meetup/

Hey Mark,

Thanks for reading! I’m going to have to kindly disagree with you on this one. Sure, in VR I agree that we’re pretty far from any Pro Tools/ VR system codevelopment, but today we can sync 360 Videos with tracking to audio sources in Reaper using Kolor Eyes and a connection over UDP. We can exclusively work inside Reaper to create immersive environments for 360 videos. Check it out here:

https://www.youtube.com/watch?v=lzLxmEIYBl4

I think you’re talking about two different things here, Travis. It looks to me like Mark was talking about utilization of game engines for linear media, and how they don’t integrate well with most DAWs.. Whereas you’re talking about 360 media workflows within DAWs. There are all kinds of workflows for 360 linear media though; whether you’re working in Pro Tools, Nuendo (which does integrate with Wwise) or Reaper. None of them are overly expensive. So with the exception of interactive VR (that require game engines such as Unity or Unreal), I say work in the environment you’re comfortable with.

yes for sure it works !!!! and very well

but I am just looking into the future and where the market place will take immersive multimedia

and what tools are available for the audio engineer

like …..a commercial client comes to you and wants you to post a VR in 4 hours or less and make it come alive.

what can I use ……????

I don’t know right now ….

I would like to use the tools I have been using

perhaps SMPTE will bring all to the table and get some standard to get this into the bit-stream so we all can talk to each other

follow the money …..thats where we will end up

cheers

m

http://www.aes.org/conferences/2016/avar/

http://postperspective.com/arvr-audio-conference-piggy-backing-on-aes-show-in-fall%E2%80%A8/

Thanks designed for sharing such a nice thought,

piece of writing is good, thats why i have read it fully