Guest contribution by Matthew Marteinsson. Audio Director at Klei Entertainment. Klei recently released Invisible Inc.

Restrictions. Usually it’s a bad thing. Something we fight against and

work around. I certainly look back at the restrictions of old consoles

with no fondness. But then you look at what The Beatles did with a 4

track (well a couple of 4 tracks and some bouncing) and you start to see

some magic in restrictions. These days with unlimited power in our tools

(relatively) putting some restrictions on ourselves can be a good way

to force yourself into some creative solutions.

I think the first and easiest would be to limit your track counts.

Either when writing music or making SFX limit how many tracks are in

your session and try not to go over them. When making SFX I stick a lot

to between 5 and 8 tracks. Once I’m up around 8, I start to go back over

my layers and usually find things that are no longer being effective or

are being masked and not heard. Time to cut those things out. As well I

find a track limit speeds me up as I’m no longer feeling the need to

keep adding. Paraphrasing Doug Martsch maybe you’re just layering things

to hide things.

One of the areas I always struggle with when doing SFX is HUD and UI

sounds. And putting my own restrictions on it really helps me get what I

want in that area. I’m the kind of person that obsesses over building a

cohesive unified HUD/UI soundscape. (That, VO processing chains and what limiter to use on a game.) I really aim for HUD/UI SFX to connect with

the tone and feeling of a game and fit into the story being told. For me

a click is not just a click. So with the wide open everything I put a

box around things to get all of that into the space I think fits. When a

game starts out I brainstorm out with the designers what the look/feel

of the game is going to be and where we’re headed with the story and

tone. From there I start building a small source library that I’ll build

all my HUD/UI sounds from. I’ll pull from libraries, record objects,

record synths from all over the place. Be it virtual synths, analog,

modular or iPad based. Just gather up anything I feel can be turned into

the feeling I’m looking for. Then for the rest of the project I only

pull from that source folder anytime I need a HUD or UI sound. This

helps me pull together having all the sounds fit the world I’m building

and fit together.

When I’m gathering a game’s HUD/UI source library together I’ll start

out by making a DAW session for just that. If I’m going to pull from

libraries I’ll pull all the sounds I think fit into that session so

they’re all in one place and I’m not tempted by other sounds in the

library. But mostly I gather my own sounds. This might be recording new

sounds into that session or capturing synths. Softsynths are a great

tool but I prefer hardware or external soft synths for this task. By

external soft synths I mean things like iPad synths and the like. I like

to throw a track in record and just mess around, recording everything.

Using external synths also allows me to get my guitar pedals involved

which can help pre-process and create more unique sounds. Anything that

allows for tweaking while I’m recording. The more hands on things are at

this stage the more likely happy accidents will happen. Creating this

source isn’t about being perfect. It’s about giving you a small unique

set of sounds to build from. You don’t want your palette to be every

colour in the Pantone book. You want to restrict it to focus you.

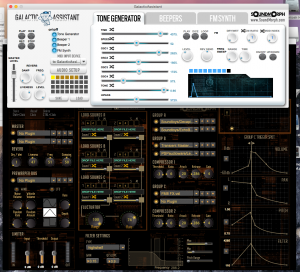

So using Invisible, Inc. as an example I started out thinking about what

the feeling of the game was and how the HUD/UI sounds should relate to

that. Earlier on it had a more noir edge to it so I was looking for

sounds routed in the past that I could process to take them into the

future. I felt a combination of old analog and 8bit source would lead me

to the feel I wanted. I recorded a bunch of tones from a Korg Monotron

and an Electro-Harmonix RTG guitar pedal for my analog sounds. For more

8bit source I recorded a bunch of random elements out of Soundmorph’s

Galactic Assistant. That turned into the pool of sounds I would draw

from for the rest of the project.

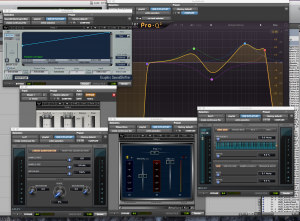

The other big restriction I’ll put on myself is only certain plugins can

be used for creating the sounds of a certain area of a game. For the

Mainframe mode in Invisible, Inc. I wanted everything to sound like you

were in a computer. I decided that bit crushing and ring modulation fit

the bill. Those plugins then became used only for sound in that area of

the game. That restriction unified the sounds of that area and separated

them from the rest of the games soundscape. So while I generally pulled

from the same pool for UI sounds the plugin choices allowed them to

sound unique to that area but related to the rest of the game.

Of course there’s lots more self-imposed restrictions you can put on

yourself too. Time constraints. Implementation restraints. All kinds of

stuff. The important thing to do is to use them to spur greater

creativity from yourself. Take the restrictions and build something

greater because of them.

Great article Matt! I am definitely feeling inspired, and looking forward to trying some of these workflow tips!

Great article! I think a lot of this advice is fantastic and not something I’d heard before. Love the new sample folder for each project and also keeping effects consistent. I also use external soft synths on iPad and am finding it liberating to just record from there instead of dealing with midi. Thanks for the tips and would love to hear more about your other limitations, advice for sampling yourself or how you use iPads in your setup. Thanks so much!!

Great article!

Super excited about my newest project; I will take your tips for a spin and see how they go with my workflow:)

Thanks!