We’ve had some inspiring, insightful and practical thoughts about procedural audio in the past few interviews. We’ve not only looked at what can ideally be possible with it, but also what its limitations can be. For those of you who keep up with game audio news, AudioGaming must not be new to you. They are a few years old with the sole purpose of developing next generation audio tools in the realm of procedural audio. After spending these past few years researching and developing their infrastructure, they have just released AudioWeather – a procedural wind and rain solution available both as VSTs/AUs and within FMOD Studio.

The interview below is a transcription of a conversation I had with Amaury LaBurthe, the founder and CEO of AudioGaming. What was immediately apparent through our conversation was that they are a group of highly driven and talented people who want to empower sound designers with better workflows and tools, while being very realistic and open about what they do.

For those of you who are interested in trying out their VSTs/AUs, scroll down to the end. They were kind enough to give us all a discount code!

DS: Let’s start this off with a background of AudioGaming. What led you to form the company?

Amaury: We started the company in 2009, at that time I was still working for Sony R&D (Sony-ComputerScienceLAboratory, a small Sony lab in Paris dedicated to research), which was mainly DSP and interactive audio research. Before that I was working for Ubisoft and before that I was working for Sony in research so I had experience in both sides – research at Sony and creative/sound design when I switched to Ubisoft as I was audio lead on several games. At some point when I was working for Ubisoft I thought it was a shame that I needed tools that didn’t exist. Because of my background in R&D I knew some of it was possible. In 2009 we mainly thought about the project as I was still working for my former lab (Sony) through AudioGaming. We started full time at the end of 2009. We also won two national contests for innovation in France where we got grants in 2009 and 2010 that helped us a lot in starting the company.

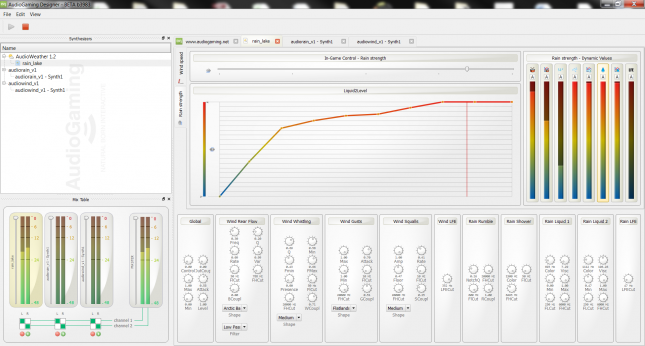

We spent almost two years working on the technology and the R&D side. We finished with a pipeline that allows us to create efficient interactive audio tools. This led us to create our first plugin – AudioWeather. It is a real time synthesiser dedicated to the generatation of wind and rain sounds. There are no samples and you can control most of the parameters of the sound that is generated because it is a model. This is becoming available through FMOD (and as a VST), so it runs on PC, Mac, PS3 and the XBOX. Before that we created AudioGesture as a first large scale test of our pipeline on consoles. It generates sounds in real-time based on the movements made with a Wiimote.

DS: What is your approach? Do you follow the age old cyclic method of recording -> analysing -> synthesising -> listening?

Amaury: There is definitely a lot of recording, analysis, synthesising and as you said there is a circle where we always start from the recordings. But, we also start from the usage. What do you want to do and how do you want to interact with it? This is very important because you can either take the sampling approach – like sampling a piano where you would sample all the keys with different velocities and you end up with ten gigabytes of sounds and you have a great sounding thing or you can look at it from the interaction perspective and think about what you want to do with it. For the wind and rain we decided we would like something that is dynamic and interactive by using a simple slider – from no wind and rain to full wind and rain. Then we asked ourselves what kind of parameters we would like to control dynamically and what kind of parameters we would like to control statically.

Once we had a model of what we’d like to have, we switched on the analysis phase, where we recorded a lot of wind and analysed them. We also approached the French meteorology department and asked them if they had recordings of wind. They didn’t have audio recordings but what was good was that they had lots of data about the way it behaves. They gave us precise recordings of the evolution of the speed of the wind amongst other things. We analysed this statistical data and we modelled not only the sound but also the statistical evolution of the wind. How does it evolve over time? What is it that makes it sound like the wind and not the sea? The sea has a back and forth movement while the wind has a very precise statistical behaviour with various characteristics like gusts and squalls. We analysed all of that data to get behaviour models that would help differentiate the behaviour between the sea and the wind. When you are dealing with interactive behaviour its not only the sound itself but how it behaves over time.

DS: As it has been discussed previously, with procedural techniques it seems like you have limitless possibilities and you have to setup limitations for yourself to understand what you really want.

Amaury: Its a bit like the ocean of sound when the first synthesisers appeared. You have so many possibilities that it’s a bit scary (laughs). You can do anything and in the end you do nothing. It is like Max/MSP or Pure Data, if you don’t set any goal and very clear limitations you probably won’t end up anywhere. That is why we usually start from the sound, behaviour and interaction. That gives a very precise idea of what we want to achieve. If you don’t do that it is very hard and we have learnt that the hard way. With AudioGesture for example, we had no clear outcome in mind so we spent a lot of time working on it but it did not end up as something very creative. We had a lot of different models but they were not very easy to use. A part of the difficulty with procedural audio is that because a lot of people don’t know about it, they don’t project any usage and you have to find the usage for them. But, you might be wrong. You have to prove it is useful in scenarios that people don’t have in mind. It is tricky. This is why we went from broad things like gesture to more focussed things like wind and rain and now we are even more focussed and working on the footstep machine where the usage is very very clear and obvious.

DS: A lot of questions have built up about procedural audio over time – can it sound good? Will it sound good? Does it take up too much CPU? Have you faced any of these questions?

Amaury: Not really! (laughs) But, its very hard. We are being judged in comparison with images right now. Nobody is saying that image synthesis quality is bad and we are going to use pictures or videos instead. Nobody asks these questions. When the first games started to use image synthesis the quality was poor. But nobody ever questioned that. On the other hand, when audio samples became useable in games and when CD quality audio arrived with the PlayStation, there was no reason for people to care about synthesis anymore. So it has been hard for audio synthesis to compete with CD audio quality when we should have evolved just like images. It would have sounded crap in the beginning and then would have sounded better and better. People would then never question procedural audio, it would be natural. But the process did not happen. And now people question it, which is very valid.

We have tools like FMOD and Wwise or internal engines that are becoming very good at making things that are static by nature very dynamic. We have established workflows with a very good amount of control over the final audio experience you propose. The tools and the pipeline exists so why use procedural audio? There are several arguments that push for procedural audio. If you have big open worlds it is a nightmare to sound everything. When you have gestures it is difficult to sound them in real time. But, do you really want to sound gestures in real time? Sounding animations on big games is a big thing. What if you could sound anything that is moving almost automatically but not lose the sound design control? Just that, part of the hard work will be done automatically and you will control the generation of the sound and its adaptation to the movements. I think the good way to push it now is not in terms of opposition but in terms of complementarity. It can bring new things to existing pipelines and it can save time. But, it won’t replace several parts of the work. If you take the example of recording, even with procedural audio you always need to record things, either to analyse them or to use them directly in the synthesis because some synthesis techniques use samples. It’s just new tools that break some of the limitations. I agree with Nicolas Fournel when he says It’s not meant to replace anything or break what exists already. I think it’s the correct way to push it. But it’s true, it’s quite hard and people expect a perfect result right from the start.

If you think about music, at some point we had sound banks made of real waves and used through midi partitions. It’s crazy the flexibility such a pipeline gives. You can write hours of music in midi with many layering possibilities, on the fly alteration of the partition, slowing or speeding up music with no CPU cost or bad sound quality. Your whole set of music with its variations, its special events or alterations, etc… would be stored in a few kilobytes. You can interrupt the music very gracefully because you know the intrinsic property of the composition, etc… But rather than that, because it was sounding more poor than a real recording at that time we went for the full audio route. Which is insanely harder to make dynamic in real-time. But every game is doing image rendering using textures (sound bank?) with real-time processing. And now companies like Allegorithmic propose procedural textures which seems very natural. Hopefully the same can now happen for audio. We’re only 20 or 30 years late.

[youtube width=”480″ height=”390″]http://www.youtube.com/watch?v=70j_Fp5l4bo&feature=player_embedded[/youtube]

DS: Of course. It is also not something to replace everything we know about sound.

Amaury: Our goal is really to create a community and catalyse different approaches where interactive/real-time audio is at the heart of it. You can get away from the time line and think more of the interaction and become more of a crafter where your experiences with how a sound behaves and how you control it makes it evolve and fit with what is happening on the screen rather than browsing through thousands of files and finding one that fits. It can’t replace people. It just gives us more ways to create and manipulate sound

Apart from that, As Nicolas Fournel says in a very cool and precise interview on DS, we really need to think about the business model and link that with what sound designers need and want. If we take the example of AudioWeather, the original intent is really not to provide a closed model with only a few exposed parameters. We don’t “own” the truth of what wind is and how it should sound. But we’re not in the position right now where we can create an ultimate sound design tool, easy to manipulate as a simple VST but as powerful and limitless as Pd. We have to start somewhere. What we’ve tried with AudioWeather and AudioWind/AudioRain is to decompose the model in to separate layers and give access to meaningful parameters. This is adding a layer of difficulty because you need to map between synthesis parameters and low-level synthesis parameters, but we hope that can somehow circumvent the limitations that can arise from having a model pre-determined, as the variety of sounds you can get is pretty insane. We’ve added a randomize button by the way which sets random numbers on all the controls. This is the most fun tool I’ve been using recently! It’s crazy to browse randomly through the “ocean of sounds” it can create and I had a small Schaeffer-ien experience for a few minutes.

The good thing is that we’ll hopefully be able to build from that and propose tools that are more and more open. And we would be tremendously happy to get all sorts of feedback to help create tools that are more linked to everyday creative needs and can help get rid of some limitations.

DS: Introducing such solutions within existing tools like FMOD and even the release of VSTs seems like a good method to break down barriers. If it exists within your every day tools, you might just try it out.

Amaury: Yeah, thats what we hope. The guys at FMOD are really great. They have been very supportive and liked the idea. It is really great that we are able to work with them. Just like you said, if something exists from the start you will want to test it. If its behaving in a cool way, why not use it? We think its a good first approach. You can use it directly in the game and see if it fits or not. Our implementation in Fmod studio is rather limited for now, but hopefully we’ll release an updated version where you’ll have access to a lot of control parameters. Also in France we are participating in the video game master programme at a French university where we explain new ways of using and producing audio so that people will broaden their view on interactive audio and they will hopefully over time begin to consider this as another tool that can do things that other tools can’t to and conversely.

DS: Going back to your tools and workflow, do you still use tools like Pd or Max/MSP to prototype ideas or do you have complete control over the tools you use?

Amaury: Both actually. We use Pd a lot. It’s there, it’s working and it’s fast. You can put ideas together quickly. It’s a good tool in that perspective. We also spent quite some time building our own infrastructure, where it is a lot less friendly (unlike Pd), it’s more of a coding infrastructure where we’ve got optimised tools and we can take in to account the specificities of the platforms and everything. We have the framework to be efficient on the different hardware that we want to support. We need to have both because we need to be able to prototype and test ideas but then when it comes to the hard part you need to be able to control every bit and every sample. We need to have our own infrastructure.

DS: The VSTs are due to release soon. What else are you working on?

Amaury: The AudioWind and AudioRain VSTs should be released by the end of this month. We are finishing the beta so it should be there soon. We are writing manuals and debugging a few things. [Ed: This was at the time of the interview. The VSTs were released on 12 June] It is also a nice compliment to the video game version because that way people can try it in their DAW and if they find some usefulness in that environment it can become more natural to have that in game. The in-game version comes with the additional benefit of being able to control from a zero to hundred percent wind or rain. Whereas in the VST you don’t have the same interaction – you can control all parameters but to get from a zero to hundred percent you will need to automate some of the things.

I think it’s natural to have both options. You won’t be using them in the same context but if people are really used to having a VST they can use, then it is easier to think about its possibilities in a game. It is more natural this way. Other than us, most of the companies entering the video game market are people who are coming from the post production world – McDSP, iZotope.. all of them are big players in the post production world but they are new comers in the video game industry. Whereas, we are coming from the video game industry so it was natural for us to start there.

We also are working a lot on the footsteps engine. We’ve just finished a coproduction called Chicken Doom with a French studio (called DogBox). It’s a smaller game for mobile and tablets. We are using Unity to implement the procedural content and its working really well.

DS: Anything interesting to share about the future, or is all top secret?

Amaury: [laughs] We don’t have too many secrets. We are finishing the VSTs, we have just finished Chicken Doom and we are going to work on a game called Holy Shield, again by DogBox (crazily talented guys giving real importance to sound) where we will be implementing procedural audio with Unity. We are working a lot on the footstep machine. Apart from that we are still working on AudioGesture with the Kinect and Playstation Move and different devices. We also have several prototypes, but they are only prototypes and there is not much to show right now. We also have a musical interaction game prototype which we’d like to push, but it’s not something we can do on our own. You can control the composition of the music depending on how well you play the game, maybe this can emerge at some point (anybody open for a co-production with a kickstarter?? ;) . That’s pretty much it.

DS: Any plans of opening up such solutions to mobile devices?

Amaury: We’ve done an integration of AudioWeather in Unity. It’s working and we’ve got good performances, so it makes it available to most mobile platforms, but, because it is a .dll and not directly in C# it is not available in native clients. It can be used on all the other platforms though. We will probably release of version of AudioWeather in Unity’s asset store. Our pipeline enables that so it’s more a matter of needs for now. What we hope is that mobile games are going to evolve and we will eventually find more AAA games on mobile platforms. It’s going to happen and the needs are going to evolve so it probably will be a natural evolution for us too. This is also why we are going to work on Holy Shield because the goal is to have a AAA like production for mobile & tablets and we will be able to showcase the procedural stuff you can do in Unity.

You can watch videos and download trials/plugins here.

If you access the store, you can use this code to get both plugins at the price of one: DSEXTRABUNDLEPRICE

A plugin for wind sounds? Maybe this has some advanced features but with 3 – 4 instances of any subtractive (VA) synths I can make any kind of wind as well. NI Absynth can do this very well.