Guest Contribution by: Anastasia Devana

With the recent rise of virtual reality (VR), there is a growing interest in fully spatialized 3D audio. Several plugins are available for implementing 3D audio, and choosing between them can be difficult, especially if you’re tackling this technology for the first time.

While it may seem that all 3D audio plugins do the same thing, there are several factors to consider when choosing the right tool for your project, such as ease of use, performance, sound quality, and level of customisation.

The goal of this article is to perform an objective and thorough overview of five leading 3D audio plugins: 3Dception from Two Big Ears, AstoundSound RTI from GenAudio, Phonon 3D from Impulsonic, RealSpace 3D from VisiSonics, and Oculus Audio SDK. I’ll cover their features, compatibility, and pricing, as well as any unique aspects of each plugin. I’ll also report on my personal experience of integrating them into a Unity project, and provide a downloadable interactive demo app that will allow you to audition the plugins, along with video walkthroughs, and performance test results.

This resource is targeted towards sound designers, audio implementation specialists, developers, and anyone interested in using 3D audio in their project, and I hope that people find it helpful!

Why 3D audio?

Unless you’ve been living under a rock, you probably know that VR is on fire right now. There is a massive groundswell of enthusiasm, and several big companies have entered the arena with hardware announcements. However there is an understanding that the primary catalyst for bringing VR into our living rooms will be high quality, compelling content.

Arguably, the whole point of VR is immersion – that feeling of being there. In real life we get input from five traditional senses, but in VR we are (so far) limited to two: sight and sound. It seems rather obvious that it would be near impossible to achieve that coveted immersion with visuals alone, while neglecting the other 50% of available sensory input. Yet currently this is exactly the case in most VR content (surprise!).

The good news is that developers are slowly starting to realize the importance of sound. So there is a need and desire to make it happen. The question is how.

If I started from the basics of 3D audio, this article would be about five times as long. So I’m going to skip right over that, and instead point you to some helpful information to get you started, including this excellent talk by Brian Hook, Audio Engineering Lead at Oculus, and this wiki page.

Skeletons vs 3D Audio

![]() This overview would not be fair or complete if I didn’t personally put each plugin to the test and compare the results. While most developers do provide their own demos, those are not exactly “apples to apples”, since they are all using different scenes and sounds. This is how the idea of a demo app which offered a common experience for comparison was born.

This overview would not be fair or complete if I didn’t personally put each plugin to the test and compare the results. While most developers do provide their own demos, those are not exactly “apples to apples”, since they are all using different scenes and sounds. This is how the idea of a demo app which offered a common experience for comparison was born.

I decided to use Unity 4 as my engine for several reasons:

- all the plugins in question have Unity integration

- it would be the most straight-forward implementation and a very likely use case

- The Unity Asset store has a wealth of free assets that I could use to put my scene together

Side note: in Unity 4 all of these plugins require a Unity Pro license. But in Unity 5 (which is now officially out), everything in the demo can be done with the free license. Now is also a good time to thank Unity Technologies for supplying a temporary Pro license for the purpose of this article.

The idea was to make the app accessible to as many people as possible, so they could audition different plugins in a VR environment, and make their own conclusions about the sound. I decided to use Cardboard SDK, since Google Cardboard is currently the most accessible and affordable way to experience VR.

The result of this little experiment is Skeletons vs 3D audio VR – an interactive VR experience available on Google Play store. So if you have an Android phone, you can go ahead and download the app here. Even if you don’t have the actual Cardboard to experience VR, you can still hear 3D audio as you move the phone around.

The result of this little experiment is Skeletons vs 3D audio VR – an interactive VR experience available on Google Play store. So if you have an Android phone, you can go ahead and download the app here. Even if you don’t have the actual Cardboard to experience VR, you can still hear 3D audio as you move the phone around.

And if don’t have an Android device, I made playthrough videos of each plugin in action.

We’re not so different, You and I

Before I get into details about each plugin, and in order to avoid repeating myself, let me briefly cover what they all have in common.

3D audio spatialization

This is the basic premise of spatializing the sound, or placing it in space with azimuth (left / right / front / back) and elevation (up / down) cues. All the reviewed plugins provide this functionality.

Adequate documentation

Some documentation resources are better organized than others, but in all cases they provide all the information to get the job done.

Great support and passion to improve the product

I have met several of these developers in person, and I’ve communicated with all of them online. Everyone has been very honest and forthcoming with information about their plugin (including limitations), and very open to critique and suggestions. A few bugs that I encountered were fixed in the matter of hours. Also, in cases where a full-featured plugin wasn’t available as a free download (GenAudio, Two Big Ears, and VisiSonics), the developer provided me with a temporary full evaluation license for the purpose of writing this article.

Now let’s review each plugin in detail.

3Dception (Two Big Ears)

Version tested: 1.0.0

[ed: Full disclosure: Contributing Editor Varun Nair is a co-Founder of Two Big Ears. Varun did not have any involvement in the article beyond what the representatives of the other tools had.]

Two Big Ears had their plugin 3Dception available as beta for some time, and they recently announced the first stable release.

Two Big Ears had their plugin 3Dception available as beta for some time, and they recently announced the first stable release.

From my experience speaking and working with the team, I get the impression that they take 3D audio very seriously, and they are determined to keep pushing their product forward.

3Dception offers a wide range of features and customizations, and the team has put a lot of emphasis on performance and workflow. They also have been active writing and speaking about 3D audio, and have a blog with some interesting resources.

Unique Features

- Room modeling with spatialized reflections. You can create a room object, match it in position and size to your actual in-game room, and define its reflective properties. A couple other plugins in this list provide room modeling, but spatialized reflections is a feature unique to 3Dception at the moment. Meaning that the listener can move freely inside the room, and the reflections reaching the listener will change accordingly.

- Delayed time of flight. Direct sound is delayed based on distance, which enhances distance cues.

- Many features and adjustments exposed in Unity Editor

- Support for ambisonics. I will not get into ambisonics, since it’s a bit outside of scope of this article, but I will say that I’ve played around with ambisonic recordings, and if done right, they can produce stunning results. So support for ambisonic playback is a welcome feature.

- Environment simulation (limited). Currently there are options to change the world scale and speed of sound.

- Extensive API. Anything that can be tweaked in Unity Editor is also available through the API.

Upcoming Features

- Geometry based reflection modelling (the plugin will generate reflections based on actual in-engine objects)

- Occlusion and obstruction

- More advanced environmental simulation through parameters such as temperature and humidity

There are some playable demos available, and here is a video demo of some upcoming features in action.

Implementation

I highly recommend that you read the documentation before implementing 3Dception into your project. There is a required “setup” step in order to get everything working properly. It’s handled by a drop-down menu option in Unity, which creates the necessary objects in the scene, and makes some additions to the script execution order.

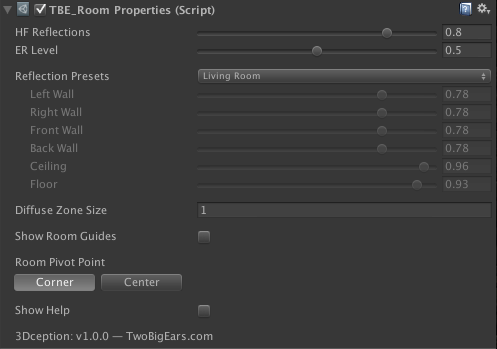

After the initial setup you will mostly be dealing with 2 components: TBE_Room and TBE_Source.

TBE_Room takes care of the room modeling feature. You drop the provided prefab in your scene and match it in size and position to your in-game room. Until we get efficient room modeling based on in-game mesh geometry, I feel like this is currently the most intuitive solution.

As you can see in the screenshot, there are several options to modify reflective properties of the environment. There are a few Reflection Presets, or you can tweak the individual reflection values manually. Show Room Guides will make your TBE_Room visible in the scene at all times, and Show Help will add some helpful instructions to each field.

As a side note, TBE_Room provides only some reflections in order to help spatialize the sound. The documentation recommends combining TBE_Room with a standard Unity Reverb Zone for better results. I did not add a Unity Reverb Zone in this specific test.

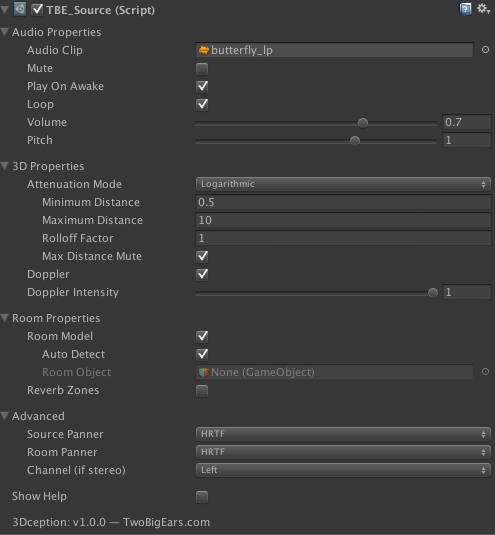

Once your room is ready to go, you add the TBE_Source component to your sound emitter, or if you already have a Unity AudioSource component in place, you can use TBE_Filter, which will add spatialization to your existing 3D sound. This option is handy if you’re converting an existing project with lots of sounds.

TBE_Source component provides most of the standard Unity AudioSource parameters, as well as some additional settings, such as Rolloff Factor (how fast the sound will drop off), and Max Distance Mute.

In the Room Properties and Advanced sections, you have some options to disable more advanced spatialization features – useful for performance optimization.

Overall the implementation went smoothly, with no issues or crashes. The documentation is well-organized and clear, and aside from the first setup step, workflow is intuitive. I used a few API features, and they worked as expected.

Results

[youtube]https://www.youtube.com/watch?v=bRpgh4UbnBw[/youtube]

I feel that the results are very convincing. There is a certain clarity of sound, and reflections sound natural, even without the addition of Unity Reverb Zone.

You can find performance test results, compatibility and pricing information at the end of the article.

AstoundSound (GenAudio)

Version tested: 1.1

AstoundSound has been around for some time now – according to this video, the technology patent was filed back in 2004. But with the recent interest in VR, it looks like the company has again become more active. For instance, they recently made the technology available as FMOD and Wwise plugins.

has been around for some time now – according to this video, the technology patent was filed back in 2004. But with the recent interest in VR, it looks like the company has again become more active. For instance, they recently made the technology available as FMOD and Wwise plugins.

In addition to a real-time 3D audio plugin, GenAudio provides several other solutions for working with 3D sound.

There is the Expander (enhances pre-mixed tracks), Fold-down (maintains surround sound mixes over stereo), and 3D RTI (the real-time 3D audio solution which I’m going to focus on).

Unique Features

- Spatialization over multiple output configurations (headphones, stereo speakers, and surround setups). From my admittedly limited understanding, this is achieved by using a different approach to spatialization. According to GenAudio, they use the psychological sound localization model, in addition to the physics-based one. The ability to achieve sound spatialization over stereo speakers may not be relevant to VR specifically, but it’s a great plus if you’re supporting a game or experience across multiple platforms.

- Ability to crossfade between spatialized and non-spatialized sound. This feature requires a bit of scripting, but it could be very useful in certain situations.

Upcoming Features

- Addition of 3D elevation cues to surround-sound setups

Implementation

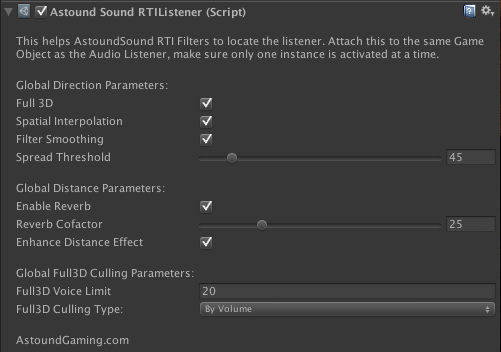

The plugin consists of two components: AstoundSound RTI Listener (add it to your main listener in the scene), and AstoundSound RTI Filter (add it to the sound emitter with an existing Unity AudioSource component).

The AstoundSound RTI Listener has a few handy settings, which let you trade some spatialization quality for performance, such as Enable Reverb (can be used for distance cues in lieu of Unity Reverb Zone) and Enhance Distance Effect (uses better quality reverb algorithm).

Spread Threshold is a nice option – if a sound’s Spread parameter is higher than the threshold, it will bypass the plugin spatialization altogether. This will also respond to changes in Spread in real-time, which allows for some creative solutions, especially with ambient sound sources.

Culling settings are there to limit the number of voices to be fully spatialized, and decide how those voices will be selected (options are Volume and Distance). Any sound sources outside of the maximum number of sounds will fall back to the regular stereo panning method.

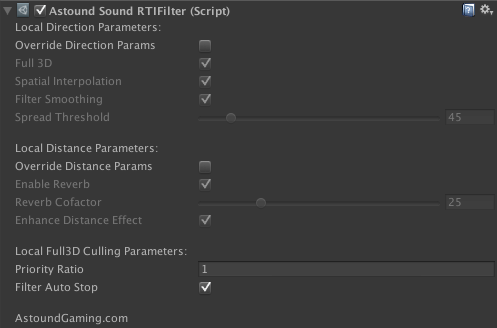

In the AstoundSound RTI Filter component you can override global settings on a per-sound basis, as well as set priority for culling. Other 3D sound settings (such as min and max distance) are set on the Unity AudioSource object.

Be sure to read the documentation regarding the Filter Audio Stop checkbox, especially if you are triggering sounds via script. Basically, if you’re playing any sounds via PlayOneShot method, this should be unchecked (relates to a limitation in the Unity API).

Overall, the implementation was smooth and fast, and documentation was clear and to the point. One suggestion for improvement would be exposing some APIs for controlling settings in real-time.

Results

[youtube]https://www.youtube.com/watch?v=dCC5rX8FUT4[/youtube]

I think that the results are quite convincing. The spatialization is very good, and overall sound has a clarity to it. Reverb sounds notably different to that in 3Dception, but I still find it convincing. Also, I did get the effect over stereo speakers, albeit not as clearly as over headphones.

You can find performance test results, compatibility and pricing information at the end of the article.

Oculus Audio SDK

Version tested: 0.9.2 (alpha)

![]() Oculus recently took note of the current lack of proper positional audio in VR content, and have been making a big push to change the situation. The first step towards that was licensing the RealSpace 3D technology for their Crescent Bay prototype.

Oculus recently took note of the current lack of proper positional audio in VR content, and have been making a big push to change the situation. The first step towards that was licensing the RealSpace 3D technology for their Crescent Bay prototype.

And just recently at GDC 2015 they’ve taken it a step further and released their own 3D audio plugin, Oculus Audio SDK (currently in alpha), based on the same licensed technology.

What sets this plugin apart is that it’s absolutely free to use, which is a pretty cool move from Oculus.

Since it is a free offering, there isn’t a fancy marketing website or a lot of demo videos. But there is a new Audio section in the Oculus Forum, and it’s a great place to ask questions and receive support. So far the forum has been active, and support has been very good.

Implementation

The implementation was mostly smooth with only one hiccup. My main dev machine still has Mountain Lion (OSX 10.8.5), and the Audio SDK refused to run on it, so I had to dust off my old laptop with a Yosemite install.

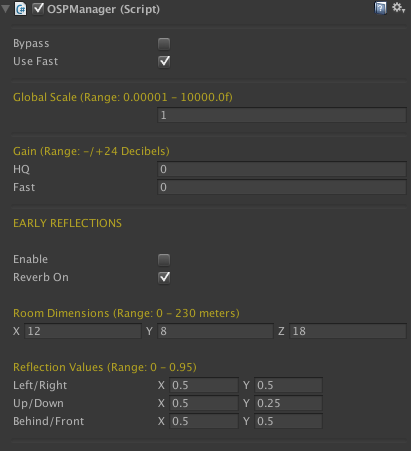

There are two main components in play: OSP Manager, which gets placed in the scene, and the OSP AudioSource, which gets added to a sound emitter with the Unity AudioSource component attached.

Let’s take a look at the OSP Manager.Oculus’ plugin offers two types of spatialization algorithms: HQ (high quality) and Fast, which doesn’t provide Early Reflections. The documentation strongly advises against using the HQ algorithm on the mobile platforms, in order to minimize CPU resources. As you can see, I heeded their advice and checked Use Fast.

With the Gain setting, you can adjust the overall volume of the mix. As I noticed with all the reviewed plugins, spatialization tends to bring down the overall volume, so ability to do a blanket gain bump is a nice touch, and something that I would like to see in other plugins.

One more thing to keep in mind is that the virtual room is currently tied to the main Listener, and will move and rotate with the Listener, so even when you move around, you are always in the center of the virtual room. My understanding is that an independent virtual room object is in the works.

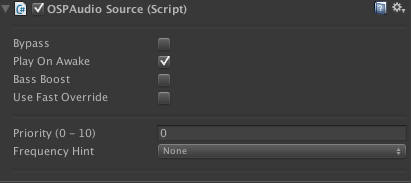

OSP AudioSource. The Bass Boost is supposed to compensate for bass attenuation, but according to documentation this feature is still unpolished (as well as the priority feature). Also you’re supposed to use Play on Awake in this component, instead of the Unity AudioSource one, to prevent an audio hiccup at the start of a scene.

The current limitation is 32 sound sources using the Fast algorithm, and 8 sound sources using the HQ one. Here you have an option to mix and match: enable the global Use Fast setting, then override it on the 8 most important sound emitters by checking Use Fast Override.

It’s not quite clear what the Frequency Hint dropdown does. I set my music and speech sounds to the Wide option, as suggested in the documentation, but didn’t notice a significant difference.

Overall, the implementation was pretty straight-forward, and the documentation was quite clear, especially considering that this is an alpha release. It definitely felt like an alpha though, with quite a few things still “in progress”. I felt that the Unity Editor interface could be more intuitive.

Results

[youtube]https://www.youtube.com/watch?v=B3jTe_fvLIE[/youtube]

I am not quite sold on the spatialization with this plugin. I do get the azimuth cues (left / right / front / back), but not so much with elevation (up / down) or distance. Also the sound seems to shift unnaturally between left and right ears with a slight turn of head. Lastly, when compared to 3Dception and AstoundSound, the overall sound quality is noticeably degraded and has an odd mid-rangey character to it. It is especially evident with the piano sound.

You can find performance test results, compatibility and pricing information at the end of the article.

[UPDATE] A few people have asked how the HQ setting compares to the Fast one, so I tested it out as well. I enabled the global HQ setting, and checked Use Fast Override on all the sounds, except for the 8 most prominent ones. I also enabled Early Reflections. I thought that the sound quality was clearer with the HQ setting, but it didn’t make a significant difference for the elevation or distance cues.

“UPDATE (29 April): The Audio SDK team at Oculus has discovered an issue that was causing the lack of spatialization. In my project I was using Unity API to Play a number of sounds via script, which was completely bypassing the Oculus plugin. In other words, some sounds were spatialized by the plugin, and others were just using Unity stereo panning. This explains why I wasn’t hearing all the spatialization cues in my test scene.”

Phonon 3D by Impulsonic

Version tested: 1.0.2

Impulsonic is a fairly recent newcomer to the field, and they are taking a unique approach to the 3D audio problem. They decided to break it into several smaller problems – HRTF-based spatialization, reverb, and occlusion/obstruction – and tackle each one independently. Hence, the three separate product offerings.

is a fairly recent newcomer to the field, and they are taking a unique approach to the 3D audio problem. They decided to break it into several smaller problems – HRTF-based spatialization, reverb, and occlusion/obstruction – and tackle each one independently. Hence, the three separate product offerings.

Phonon 3D is used to position sounds in space using HRTF algorithms, and it does no more and no less than that.

Phonon Reverb allows sound designers to create physically modeled reverb based on game geometry. The process involves tagging in-game geometry with acoustic materials and then “baking” the reverb – that is the process of calculating reverb tails at multiple locations in the scene. The baking process is done to optimize performance, by offloading real-time calculations to an offline process. Then in real-time, pre-calculated reverb will be applied to sounds based on listener position in the room.

Phonon SoundFlow is still in development, but it will eventually provide geometry-based occlusion/obstruction. The sound paths will also be “baked” during the design phase, and then applied to the listener’s position in real time.

There is also Phonon Walkthrough, which performs real-time sound propagation (including all the above features). It is in beta at the moment, and from my understanding it is quite resource-intensive. So unless you are working on a very specific type of experience and have the machine to run it, it’s currently not a viable solution for the majority of projects.

You can find both interactive and video demos of all Impulsonic products on their demo page.

Unique Features

- Phonon Reverb takes a unique and interesting approach to setting up reverb in the scene. It can certainly be very useful even outside of VR, since it replaces the need for multiple Reverb Zones. Just tag your mesh geometry with materials, and “bake” the reverb. However, it is still not applicable to the subject at hand (3D audio), since at the moment it’s not compatible with Phonon 3D.

Upcoming Features

- Compatibility between Phonon 3D and Phonon Reverb

- The release of Phonon SoundFlow beta in Spring 2015

- Support for more platforms and integrations

Implementation

In my tech demo I would have liked to use both Phonon 3D and Phonon Reverb in tandem, but unfortunately they’re still not compatible, so I just focused on Phonon 3D by itself.

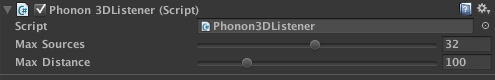

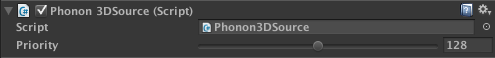

This plugin follows the already familiar implementation method. You add the Phonon 3D Listener component to your main listener in the scene, and Phonon 3D Source component to a sound emitter with an existing Unity AudioSource.

As you can see, the customization options are quite limited. You can tweak the maximum number of spatialized sources, maximum distance past which the sources will not be spatialized, as well as set the priority of any given source.

The documentation was clear and easy to follow, and the implementation would have been straight-forward had I not encountered a few bugs. So even though I was working with the first official release, it still felt a bit like a beta, and left me feeling like the plugin is not quite ready for prime-time.

On the positive side, the developer has been very responsive and quick to fix any issues, so I’m hopeful that they will tighten it up in no time.

Results

[youtube]https://www.youtube.com/watch?v=gkvfa3lUwv0[/youtube]

The spatialization is convincing as far as azimuth and elevation cues. However, similar to Oculus’ plugin, there’s an unnatural shift between left and right ears, and overall sound quality also seems to be slightly degraded.

You can find performance test results, compatibility and pricing information at the end of the article.

RealSpace 3D Audio by VisiSonics

Version tested: 0.9.9 (beta)

![]() VisiSonics, a tech company founded by the University of Maryland Computer Science faculty, has more than just a 3D audio plugin in the works. At the moment they also have offerings for 3D sound capturing and authoring tools, and future products include tools for analysis of listening spaces, surveillance, diagnostics, and more. The company has the benefit of and exclusive license to the extensive research and patents developed by the founding members.

VisiSonics, a tech company founded by the University of Maryland Computer Science faculty, has more than just a 3D audio plugin in the works. At the moment they also have offerings for 3D sound capturing and authoring tools, and future products include tools for analysis of listening spaces, surveillance, diagnostics, and more. The company has the benefit of and exclusive license to the extensive research and patents developed by the founding members.

For the purpose of this article, I will focus on their real-time 3D audio plugin, RealSpace 3D Audio.

Unique Features

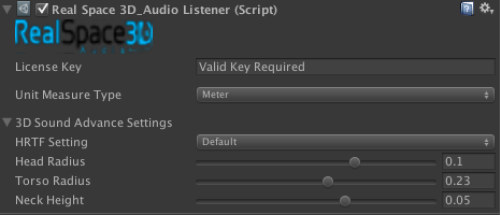

- HRTF settings: in Unity Editor you are able to select from a few HRTF presets, as well as tweak some measurements, such as head and torso size.

- Extensive API: Most options in the Unity Editor are available via API calls.

Upcoming Features

- Occlusion/obstruction

- Exposing HRTF settings via scripting, so that those selections can be made by the player

- Plans to develop their own HRTF database, since current HRTF databases don’t have any information about sound from below listener’s waist level

You can find some videos and interactive demos over at RealSpace 3D Audio demo page.

Implementation

Integrating this plugin was a bit of a chore. While it still follows a similar implementation structure as the others, it breaks a few paradigms in unexpected ways, which hindered my already established workflow for this project.

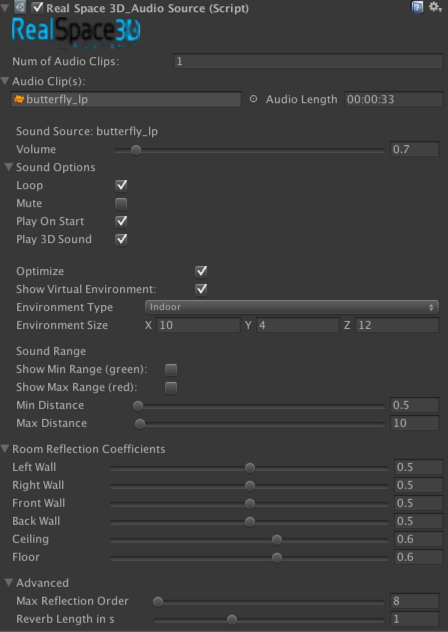

As with the other plugins, there are two main components in play: RealSpace 3D Audio Listener, which gets added to the main listener in the scene, and RealSpace 3D AudioSource replaces the Unity AudioSource component.

RealSpace 3D Audio Listener provides options to tweak HRTF settings, and also set the world unit measure type for correct environment sizing.

RealSpace 3D AudioSource replaces the Unity AudioSource component, however it doesn’t provide all the options of the Unity AudioSource, such as Pitch and Doppler.

Also it changes some of the established concepts of the AudioSourcecomponent, which ended up hindering my specific workflow. I brought up my concerns to the developer, and I won’t go into much detail (it will not be relevant to anyone else), but here are a couple of small changes I’d like to see with this component:

- make the Audio Clip not a required field

- add the ability to dynamically pass in a loaded AudioClip object to the RealSpace 3D AudioSource

These two small changes would have made my life a lot easier.

Another feature that I found a bit counter-intuitive and time-consuming is setting the environment properties for each RealSpace 3D AudioSource. Not only do you need to define the environment properties for each source individually, you have to repeat this for each source any time time you’d like to make a change to the environment. There are a couple other issues with the current handling of the environment, but from my understanding it is getting a complete overhaul in the next iteration, so I will not go into details here.

Lastly, the documentation could use some work. There is a lot of information (the documentation is 44 pages long), but a lot of it feels unnecessary (how to download and install Unity), and the essential stuff is hard to find. I would suggest breaking up the documentation into two or three files: the actual plugin documentation (how to set it up and what each field does), API reference, and perhaps a separate document discussing the demo scene.

Results

Unfortunately, I cannot comment on the sonic results at the moment. I’ve encountered some performance issues, so I wasn’t able to run the full scene without stuttering. Good news is that the developer has been alerted to the performance issues, and they since were able to find and resolve a few culprits. I’m hoping to update my demo app with the working RealSpace 3D plugin in the near future.

You can find performance test results, compatibility and pricing information at the end of the article.

Performance Testing

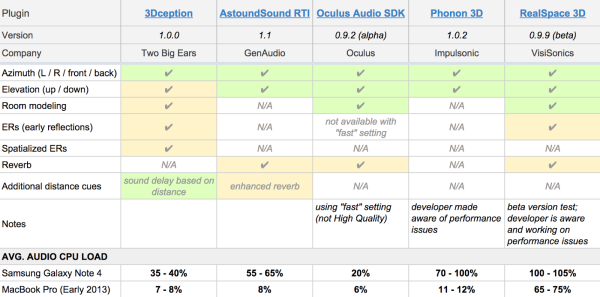

Let me preface the performance test results by saying that I did not attempt any kind of performance optimization on any of the plugins. As you have seen from the screenshots, some of these plugins provide options to trade some quality for performance. I believe you can gain significant performance increases with few audible differences, if used in a smart way.

But for me, the point of this exercise was to get a rough idea on how far I could push things and how these plugins perform “out of the box”, so I left the default settings on most everything.

There are a total of 15 Audio Sources in the scene, and up to 14 voices may be playing simultaneously (the scene is mostly scripted with some random elements). I tested the performance of each scene on a mobile device (Samsung Note 4), as well as my laptop (MacBook Pro 2013) using the Unity Profiler.

Unfortunately, Unity Profiler doesn’t provide a way to get the average or median numbers over time, short of writing a custom profiling script. So I opted to use the “eyeball” method – just testing the app, and watching the Audio CPU numbers to get the rough range. So I’ll be the first to admit that my approach wasn’t exactly scientific.

But it was helpful nonetheless, since I was able to identify performance issues with two plugins and alert the developers.

So without further ado, here is how the plugins performed (click image to go to spreadsheet with additional information).

NOTE: Checkmarks symbolize the features of each plugin in the test setting. Checkmarks highlighted in green are the default plugin features (cannot be disabled or enabled). Checkmarks highlighted in yellow are features that can optionally be disabled, but were enabled in the test app. N/A means that this feature is not available in a plugin.

[UPDATE] Per readers’ requests, I also tested Oculus Audio SDK with HQ setting on Note 4. This resulted in ~60-70% Audo CPU load.

As you can see, Phonon 3D and RealSpace 3D had some issues with the mobile performance, while Oculus Audio SDK had the best performance numbers, followed by 3Dception and AstoundSound.

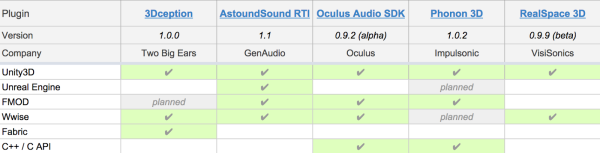

Even More Data

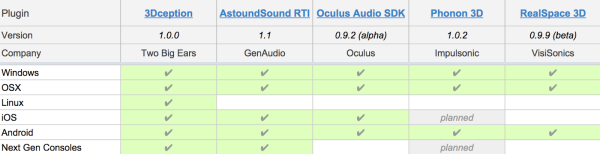

As if this still wasn’t enough information, I also thought it would be helpful to put together some charts of the current OS/platform compatibility and supported audio engine integrations of the plugins. This information is also available as a spreadsheet online, so click the images below to get to the latest information.

Integrations

Compatibility

Verdict

Hear it to believe it (or was it the other way around)?

Congratulations, you’ve made it through the boring parts! (Or more likely you just skipped to the end)

I tried to be as objective as possible, and provide my honest feedback and opinion on the plugins. Still, at this point you might be asking “So which is the best plugin?”

Well, if there is one thing we all learned from #TheDress (and also this interesting bit), is that perception is a subjective and mysterious thing. Our brains use different cues when trying to interpret information, and in different people some cues may be more dominant than the others.

My main goal was to provide tools and as much information as possible, in order to help you make the decision.

So here are the tools again, all in one place:

- Skeletons vs 3D audio – free VR app on Google Play. Download it to your Android device and use it with a VR viewer, such as Google Cardboard

- Playlist with walkthrough videos of each plugin, plus the “Stereo Only” video for comparison

- Online spreadsheet with performance test results and other useful data

And since I am likely one of very few people out there who tried working with all of these plugins, I suppose my opinion might be worth something.

At the time of writing, 3Dception and AstoundSound would be my top picks in terms of sound quality, performance, stability, and ease of use. 3Dception feels like the most mature plugin with lots of advanced features, and AstoundSound has the advantage of working over additional hardware setups. Plus I think that both of these plugins sound great.

The other plugins in the list all have a lot of potential, so I would recommend keeping an eye on them.

Oculus Audio SDK is free to use, but has some work to do in terms of sound quality, user interface, and stability.

Phonon 3D needs to address performance, and then continue to incorporate their three plugins so that they can be used in tandem.

RealSpace 3D has the foundation of great research, but it has work to do in terms of performance and usability.

In Conclusion

This is an exciting time, because we now have several viable options for implementing 3D audio in VR projects. Each developer has their own unique approach to the problem, and each one is passionate about providing the best solution. In any case, competition is great, and it will only push the technology further, which will in turn allow us to create better content, and that’s a win for everyone.

I would like to thank all the developers for their support and for providing these incredible tools. Also, I’d like to wrap up with a wish-list that would be my personal Holy Grail of 3D audio technology, and I’d love to see these features available someday.

- Better room modeling: I really like the current approach of 3Dception to room modeling. Until we get fast geometry-based environment modeling, this is a solid approach, which produces convincing results – I feel like the other developers could take a cue from this approach.

- Even better room modeling: in-engine geometry-based real time reflections. I would love to be able to tag my geometry in engine with acoustic materials, and have the plugin create proper reflection and absorption in real time. Understandably, this is a tough nut to crack, but there is more than one way to crack it, so I’m hopeful that we’ll have a workable solution soon.

- Occlusion / obstruction: real time, and based on world geometry.

- Directionality of sound (sound cone). This is especially relevant for human speech. I know that there is already a way to achieve this with middleware and coding, but I would love to see a robust plug-and-play solution.

- Gain bump: This is a really useful feature in Oculus Audio SDK, and would like to see it implemented in other plugins. Taking this a step further would be to somehow incorporate it with loudness metering.

- Support for microphone input: taking the microphone input, applying the acoustic properties of the environment to it in real time, and playing it back to the user. Again, this is likely already possible, but not out of the box. Would love to see a plug-and-play solution.

- Virtual speaker setup: I’d love to be able to take a multi-channel (say, stereo) sound, still treat it as one sound (Play, Stop, etc), but be able to position “left” and “right” speakers in the world independently.

I hope this resource was helpful, and I welcome any questions, comments, and corrections. Also I would love to hear what is on your wish-list of the perfect 3D audio solution.

Anastasia Devana is a freelance composer and programmer with a special interest in combining the two disciplines. Get in touch at www.anastasiadevana.com or on Twitter @AnastasiaDevana.

When the real developers “get it” they will all realise that you would have to configure the software for each of the listeners ears EVERY TIME someone different uses the “technology”, the downfall with binaural is that everyone’s ear shape is completely different, even a fraction of an inch bigger, or smaller, the pinna folds and parabola, makes a huge difference to the HRTF information that the listener would perceive through their own hearing, and hearing that information slightly different means TOTAL HRTF confusion.

As an avid binaural recordist, I have made some interesting realisations about binaural audio, as we are “blind” to the superficial “how does it work” we have to use our hearing to work out what is really going on to the sound that makes it do what it does when our brain hears it. It is very simple! however, due to those individual ear shape problems we will NEVER have a plug-in that would work straight out of the box for anyone to use and to be perfect, it would need tweaking for the individual. And it is that very process that needs to be sorted before it really works out for the plug-in guys, in the mean time I have perfected binaural playback for me, pity you lot will not hear it as I do, though quite convincing for some, totally HRTF confusing for others (reasons as stated), have a listen to some of my HRTF recordings here:

http://soundcloud.com/binauralhead

To make perfect HRTF recordings for yourself, make a cast of your own ears, and place mics at the canal entrance. It can be done very cheap if plaster cast is used, and cheap CCTV microphones from China do very nicely too.

Boy the grass is getting long in here………..

Seeya

That’s a very good point, and I think eventually there will have to be some soft of configuration step to select the appropriate HRTF for the user. It’s just not an easy fix in terms of user interface.

Ideally, there would be an easy end-user solution for creating their own individual HRTFs. I believe there is is work happening in that direction as we speak.

As a second best option, there could be some kind of calibration step, where a user is presented with several sounds, and has to “guess” where the sounds are coming from. Through this process of calibration the correct HRTF preset would be selected from the database and set as the user preference. I definitely see something like this for “console”-grade VR solutions.

At least for mobile solutions we have at the moment (Google Cardboard, Gear VR), this is probably a bit overkill.

What might be good also is if there was some kind of standard. For example the user goes through the process of calibration, and then their preferred HRTF is stored as a setting on their device. Then 3D audio solutions would use this specific preset in their own implementation.

AES just published a standard for storage of HRTFs and other data:

http://www.aes.org/press/?ID=293

That all said, while individualized HRTF do help, it’s still possible to get a very compelling 3D audio experience even with generic HRTFs, especially if you throw in head-tracking. Begault confirmed that head tracking actually does more to improve front/rear confusion than individualized HRTFs do.

Brian,

In general, I think you’re right, and for the most part generic HRTFs work just fine. But there’s still that subset of people (at least from anecdotal evidence), for whom the generic ones don’t work at all. Personalized HRTFs would help in that case. I think anything we can do to calibrate and personalize the VR/AR experiences is great, and every little bit helps with immersion.

I do remember hearing about the AES standard announcement, so that’s definitely a step in the right direction!

Fantastic post Anastasia! This is a lot of work, congratulations.

A quick comment about measuring performance:

I think I would speak for all the companies listed here about measuring performance in Unity. In most cases, the numbers you see in Unity would not translate to other systems (such as FMOD, Wwise and native apps). All the 3D audio plugins use an API in Unity that has a high performance overhead. For example, the 7-8% for 3Dception on OSX would be about 2.5% in Wwise. This is a huge difference.

This difference would be similar for the other plugins too, but of course the numbers would vary depending on the algorithm. Unity is aware of this and given the improvements they have been making I expect this would be sorted at some time in the future. Until then we usually recommend our customers to use native Unity for smaller projects, but to use a middleware tool for large scale projects.

Great stuff and very valid points about the features you would like to see!

Funny how your comment was approved and my seemingly unorthodox one has been rejected.

Hi Alan,

Contributors to the WordPress have their comments approved automatically for obvious reasons. Other comments have to be approved manually since we get 10 spam comments for every authentic one.

Thanks for pointing it out! :)

Haven’t had a chance to really get into Unity 5 audio system yet, but I hear it’s a big improvement over 4.

Yes, there’s some great new stuff — especially under the hood. Relevant to this conversation:

* Sample accurate scheduling: great for working with VR video and ensuring strong synchronisation

* Audio codec options: ADPCM/Vorbis, very useful for optimising performance

* Background streaming: multi-threaded audio streaming, this ensures that long audio files can be streamed without glitches (a major issue in Unity 4)

* Revamped audio configuration setup: setting hardware options (sample rate, buffer size) was painful in Unity 4. With 5 it can set quite easily and the audio system can be “reset” at any point. Again, for useful for optimising playback and dropping sample rates on lower powered devices

I plan to write about this in detail on the TBE blog soon. We are frequently asked about optimising audio playback in Unity on mobile devices.

Looking forward to that blog post! :)

Fascinating and thorough- thanks for all the research on this, Anastasia! I remember early work being done on 3D audio when I worked at Dolby years ago. My problem with 3D audio on headphones is that Front never sounds Front, it sounds either above me or in the middle of my skull. Some people flip front and back- so there are some subjective perceptual differences, apparently.

I’m curious, are you getting the same effect with every plugin in the demo app (or the videos)?

It could be a difference in perception, or perhaps none of the HRTFs used in the plugins match your unique anatomy. When you were working at Dolby back in the day, did you get your personal HRTFs recorded, by chance?

Excellent article, and it is definitely a ‘must-read’ for anyone venturing into 3D audio for VR/AR.

One technical question… What was the sample rate of the Android implementation?

Many android systems default to 24kHz, and you have to explicitly ‘kick it’ up to 44.1. The lower sampling rate affects both spatialization quality (since a lot of HRTF’s effects are in the higher frequencies) and also of course processor usage.

Thank you, Brian!

I “forced” the sample rate to 44.1k for this app.

Thanks Anastasia for your evaluation. You definitely pointed out some issues to us…some we were aware of and have already addressed and others that we were not but, as we speak are addressing. Bruised ego aside ;) this research was an eye opener and need. We thought we were focused before but, now “game on” we are laser focused in high a$$ kicking mode. :-D

We are looking forward to submitting our next release to you and have you re-evaluate it.

Again, very in depth and impressive article, and kudos to you for putting it together, and we wish everyone currently in the 3D Audio space and those on the fringes much success!

-Rod

Thank you, Rod! I appreciate all the support while writing the article, and looking forward to testing out the next release to RealSpace 3D Audio!

Awesome work and very well written article! Thanks a lot.

One aspect I am curious about, and which you didn’t mention as you are focusing on android/cardboard. Is performance/latency on PC and hardware acceleration like AMDs TrueAudio on cards/APU with the chip present.

If VR is taking off, 3D audio will be key. And performance crucial. So I’d expect TrueAudio style dedicated audio chips to become the norm even on mobile. So the AMD TrueAudio tech might be a glimpse into the future about how much such solutions help.

You wouldn’t have played with these solutions on PC too and might have a qualifying AMD card? And have an insight or three?

I don’t have a Windows PC or the AMD TrueAudio card, but I did test the app on my MacBook Pro, and the results are included in the chart.

I also want to point out that as an audio person I am absolutely for dedicated audio chips on everything! :)

Thank you so much for sharing your investigations!

Alan, I am assuming your negative tone is due to your first comment being delayed in being approved. That was my fault and in no way the fault of the author of this post. Myself and only a few other people who are not Anastasia approve posts and we get to it when we get to it.

I hope in the future all of your comments will be constructive so I can keep approving them.

Hi Anastasia,

This is some incredible work! It’s a valuable resource for the VR community, and puts all the information about 3D audio tools in a single place for developers. We hope you continue to update the demo app and benchmarks as all 3D audio tool developers release updated versions of their tools.

Your feedback on Phonon 3D has been duly noted; we will work on resolving them in upcoming releases.

On another note, it’s actually incredible how far we’ve come with 3D audio. In 1990, NASA’s Convolvotron used hardware acceleration to render 4 binaural sources at a 33 Hz update rate with 32 ms latency. Today, with a single desktop CPU core, we can do up to 200 binaural sources, at a higher update rate (46 Hz) and with lower latency (21 ms). Just imagine the possibilities with dedicated hardware!

Lakulish,

Thank you so much for all the support in writing the article!

I’m definitely planning to keep the app and the spreadsheet up-to-date, and I’m looking forward to new Phonon releases! :)

Rob.. Front/rear reversal is a definite issue with binaural audio. But it occurs not only with HRTF (synthesized binaural) but also just in nature.

At GDC in 2014 I gave a talk on 3D sound where I did a little demo, bringing someone up from the crowd and having them close their eyes. I then snapped my fingers are various locations around them, and had them point to where they thought the snapping occurred. Let’s just say they didn’t score 100%. (the talk was “3D audio: Back to the Future” if you have access to gdcvault).

One of the challenges in binaural 3D sound is actually fighting an (unrealistic) expectation of how good we, in nature, are at localizing sounds in space.

We’ll probably have a special few sessions on 3d sound at GameSoundCon this year

Brian,

This is so true. Since I started working with 3D audio, I’ve been paying attention of how well I can localize sounds in the real world. And it’s actually not that accurate.

I think a lot of our natural sound localization is mixed with the visual cues and our existing knowledge of the world. For example, if I hear a sound of a car or of an airplane through my open window on the 3rd floor, I know that the sounds are coming from above and below respectively. But I think a huge part of that is affected by the understanding that airplanes are in the sky and cars are on the road.

>But I think a huge part of that is affected by the understanding that airplanes are in the sky and cars are on the road.

Exactly! There’s a reason why helicopters and jet flybys are often used in 3D audio demos to demonstrate elevation effects. (the fact they are very broadband doesn’t hurt either…)

Our ears didn’t evolve to give us high-precision localization, but rather to serve as an ‘early warning system’ to alert us to motion surrounding us, especially that might be outside our field of view. once we hear something (say unexpected rustling of grass in a general area behind us), we turn our head and use our visual sense to pinpoint the source of the motion (eg the tiger).

Incredible article! If Realspace had performed, you would find that the interpolation across the sagittal plane is spotty there too (as in the Oculus Audio SDK). There’s some bug in RealSpace that prevents interpolation from happening smoothly when you cross from 359 azimuth over to 1 or vice versa. You hear the volume spike from left to right or right to left as your nose crosses the 0 degree barrier. It’s unfortunate for them that that’s they exact area your nose is most likely to be pointing toward for a majority of the experience.

Hello AJ,

Is it possible for you to send a small sample project where you are experiencing this. I can take a look into it. Please send email to [email protected] w/ a dropbox link or some other method.

Much appreciated,

Rod

Hi Rod,

I noticed the sagittal plane issue in the Unity demo from your website a few months back. I haven’t tried the Oculus SDK but from what I understand, they’re using your HRTFs, yes? This article claims this was happening with the Oculus SDK:

“the sound seems to shift unnaturally between left and right ears with a slight turn of head”

That’s the same experience I had with RealSpace, but only when moving through the forward sagittal plane. It sounded like the nodes weren’t interpolating across the plane, but snapping instead. The ITD is subtle enough that its hard to hear a snap like that unless the nodes are farther apart, but the ILD was noticeably unnatural in either direction as the source crossed the plane.

Can you post the source to this? We don’t test on Cardboard, we use GearVR for our testing, and we’re not hearing any elevation cues, so that’s pointing to a likely compatibility issue which we can work to resolve. At the very least we can test on GVR and see if we’re hearing the same issues.

I’m also slightly concerned that this test is being viewed as somewhat authoritative by some, when it’s really a first pass. It’s a single user subjective interpretation, which doesn’t meet very rigorous standards. This is great for a quick smoke test, but there’s always danger when one (well written and comprehensive!) article ends up as gospel.

Unreal Engine integration is a little confusing. Epic handles integration via third party (Audiokinetic, FMOD, etc.) source code merges, since there is currently no “Unreal plugin” architecture. For this reason anyone that has Wwise or FMOD support automatically has support for Unreal.

Microphone support is typically something that an engine/app would handle. At least for our SDK, actual audio input and output isn’t something we manage since that runs into too many system configuratoion and compatibility issues. We have applications (internally) using recorded voice and it works very well, it’s just up to the individual developer to plumb from mic input to spatialization.

Side comment @ Alan: cast ears with microphones in the canals don’t make perfect recordings, since it’s also material, width and head dependent. In particular head shadowing is a large component of near field recordings. ITD has a very strong influence on lateral resolution, and if you record and/or playback with large variances in ITD it can make a big difference.

Anyway, thanks for doing this round up, it’s great to have people start to look at audio seriously in VR, something I think can all agree on =)

Brian,

Thank you for chiming in!

I’m not comfortable with providing the whole source for download. It contains full versions of plugins, which were made available to me by the developers for the purpose of this article. But I would be happy to take out all the other plugins, and provide you a project with just Oculus Audio SDK.

You don’t actually need the physical Cardboard to test a Cardboard app. All the positional tracking is done using the phone gyroscope, so it will work on most Android phones (including Note 4) without the need for the actual Cardboard “holder”. So you could just download the Skeletons demo app from Google Play Store to your Note 4, and it would work.

You could also lift the USB plug on the Gear VR and place the phone underneath it to get the visual experience along with the audio.

I agree that this is only one article on the subject, and one person’s take on it. Even though I tried being as thorough and objective as possible, this is still not a peer-reviewed scientific research (and doesn’t claim that either). I look forward to more resources coming out: reviews, demos, tutorials, more rigid performance tests, etc.

I’m sure I speak for everyone here when I say that we’re all working towards the same goal: advancing the technology in order to provide better, more impactful experiences. I’m just trying to do my part :)

Great article! I too look forward to the day when, at the very least, some early reflections can be calculated real-time off of the surrounding geometry and it’s surface material.

P.S. For those of us who develop with UE4, it sounds like the Oculus 3D Audio plugin will be integrated into the engine in the upcoming 4.8 release.

Hi Anastasia,

Could you please link/include the solution to the Oculus Audio SDK problem? (The problem that caused the lack of spatialization

Hi Scott,

Sure! Basically, instead of calling standard

audio.Play(), you need to call a corresponding Oculus function.First, go to OSPAudioSource.cs and add the word “public” in front of function “void Stop()”. You will need this in order to call the Stop() function from your script.

Then, in your script get a reference to the Oculus Audio Component like so:

OSPAudioSource ospAudioSource = gameObject.GetComponent ();Then you can call ospAudioSource.Play() and ospAudioSource.Stop() functions from your code to play and stop the sounds, and they will get spatialized properly.

Hi Anastasia,

Great, thanks for that! That’s a really handy “gotcha” to know.

As a profesional musician and novice game designer I can say that this article was a great help (and a very nice read too).

Given how often we see (pun intended) VR focus on visuals, it has been difficult to have an objective look into the different audio software solutions, and how they compare to each other.

The game/interactive composition I’m working on is really focused on positional audio and 3D music sources, so this article will come in handy as a tool to make may own tests, so thank you for the hard work!

The new version of RealSpace3D Audio v0.10.0 is available at http://www.realspace3daudio.com. New workflow and new features. The official notice will be up next week but it is onsite for download.

You can give a try. Much improved from when the article was published.

Awesome! Looking forward to trying it out!

Will do! Thank you for the tip =)

Hi there, very helpful article thanks for the effort involved! Just wondering if you’ve revisited Realspace3D now they have redesigned the package?

I’m working on an updated article (as time allows), since there are new versions for almost all of the listed plugins.

Thanks for the analysis Anastasia. I’ve used or have had demos of a lot of binaural/HRTF processes and have encountered most of the issues pointed out in the threads.

We are looking into everything from subjective user test/set up areas to mobile based head scanning to target the specific user’s head and ear shapes to an HRTF impulse that they most closely match. Looking forward to your research update

Hey,

Awsome article, any news on an up to date version?

See this new article from Chris Lane! :) https://designingsound.org/2018/03/29/lets-test-3d-audio-spatialization-plugins/

Greetings Anastasia! Great work.It is definitely a must see article!

I was wondering about the audio spatialization design and its parameters regarding your benchmark sessions. Any comments?

Congrats!

Hi Anastasia,

thanks a lot for your research and your work writing this article. I’m a post production sound engineer who honestly didn’t know much about VR mixing until recently. But I had to look into this for eventual further mixes for VR movies.

It helped me a lot in understanding, but still I’m wondering, as a sound engineer, it seems that only 3Dception is able to provide a full solution for sound engineers ?

The other plugins are integrated as addons in game programming softwares right ?

I’ve not found any other blog specialized for sound engineers about this topic, and it seems (pardon me if I’m wrong) that all of the replies are from game developers here, or at least people who know how to enter some code lines…

Anyway, thanks again !

I think 3Dception Spatial Workstation is currently the only commercially available solution for working in a DAW outside of Dolby Atmos (which can render down to binaural now…but I don’t know how flexible that binaural render is with respect to head movement/tracking). In the very least, it’s the only one that I’m aware of, Fabien.

Thanks for your reply Shaun, have you tried 3Dception workstation yourself to do a VR mix ? from the plugin in Protools up to the Render App ?

I’ve tried the demo in Protools, works really well, there is even a session template which very useful to understand the output routing, you can automate every parameter of the plugin, and the bounced stereo file is totally accurate to what you ve done in your session.

It seems Google VR SDK (that has it’s own spatial audio) was not an option at that time

You should add it to that list

Great work by the way!

I would also like to add 3DAudio, a VST/AudioUnit plugin that allows you to produce 3D sound for any ordinary pair of headphones all inside you favorite digital audio workstation (DAW) software.

Check out:

http://freedomaudioplugins.com/downloads/3d-audio/

Hi Anastasia,

Thanks for all the great work and attention to detail – this article really helped me dive in to 3D audio a while back. Any updates now that a lot of these companies have been bought up by larger VR players, and any news on new independent developers?

See this new contribution from Chris Lane! https://designingsound.org/2018/03/29/lets-test-3d-audio-spatialization-plugins/

How did you change the spatializer plugins between scenes? I’ve been going crazy trying to design a similar experience!

Thanks!

This was before Unity built in the spatial audio selection into the options. Unfortunately, you can’t do it anymore in Unity.

Nice article!

Recently Steam Audio was released for free after the acquisition of impulsonic.

And 3Dception (twobigears) was acquired by Facebook.