For this month’s topic of Spectrum, I decided to take the word literally, as applied to color, and consider its relationship to sound. The impetus for this decision was a conversation I had with a co-worker, the late sound designer Stillwind Borenstein, about thirteen years ago. He explained an interest that, since both sound and color can be expressed or measured in frequency, someone ought to map that relationship so that one could see what color an A# was or hear what green sounded like. Admittedly, we had several drinks over the course of this discussion and I neglected to ever do the deep dive that Stillwind wanted to.

Over the years I would slowly realize that our revolutionary idea had been described in neuropsychology books, had been accomplished over a century ago and that people continue to refine and examine this relationship. We even failed to make the association between color and sound and two very cool synthesizers we had used; one of which was authored by our co-worker!

To both honor Stillwind’s memory and dive deeper, I wanted to finally take a moment and explore the relationship between color and sound and the many ways the spectrum of one maps to the other.

Synesthesia

Researchers have sought a connection between sound and color for hundreds of years. Indeed, Isaac Newton’s famous seven-color spectrum (ROY G. BIV) is widely accepted now to be incorrect, and it has arisen that Indigo was included only because Newton felt so strongly that the number of colors in the color spectrum had to match the number of notes in Western music in order to be correct.i

There is speculation that Newton had the unique condition known as synesthesia in which the relationship between two or more senses is conjoined: a color may have a particular smell, musical intervals may each have a specific taste, or letters or days of the week may have a corresponding color.ii According to the American Psychological Association, synesthesia affects one in every two thousand people.iii While synesthesia can take many forms, there are thousands of instances where individuals “hear” color, that is, they may see a specific piece of music or a chord or scale as being blue or green. Interestingly the colors are not necessarily uniform across those with synesthesia that equates sound to color: One may see D major as blue, another as green, red, or yellow.

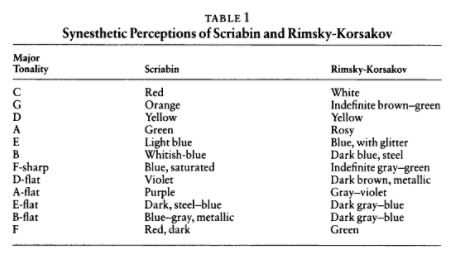

Vast amounts of research and analysis has already been done on composers with synesthesia and how the condition affected both their compositions and their approach toward writing. It is interesting how widely varied these composers’ perceptions are. For example, Rimsky and Alexander Scriabin had many long discussions about their synesthesia and the few similarities and many differences between the colors they heard,iv and as you can see, it’s drastically divergent with the exception of D Major being yellow.

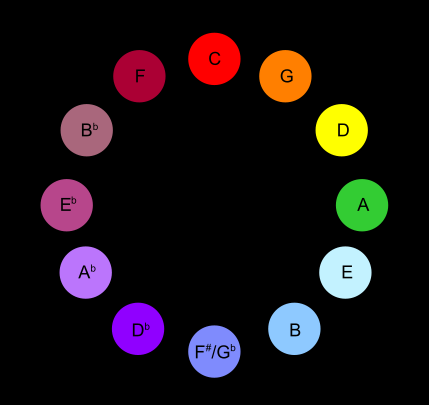

Interestingly, mapping Scriabin’s color associations to the cycle of fifths, we see a near perfect color gradient:

Synesthesia is a fascinating condition and one that, for many, fuses color and sound. But what about the rest of us? The more I began to think about sound and color the more I realized that relationship is already such a huge part of the tools we use daily.

Spectral Representations of Sound

It’s actually a rare day that you’re not interacting with color as it applies to sound. Whether you’re mixing, designing, recording, there’s always a visual representation of what we hear beyond the waveforms created. The level meters in our DAWs, middleware and other tools describe a green to red gradient to denote amplitude. I’m not sure why the green to red gradient is used so frequently as a communicator of sound information. Perhaps it’s just an easy way to see differences. Maybe, it’s so ingrained cross-culturally because of similar elements like traffic lights. Or perhaps in the case of volume, red for clipping equates to the sound being too hot, but in that case I would expect a cool blue for quieter sounds. (Indeed many applications let you adjust the colors to your own liking). In addition to amplitude, coloration has also been around for decades in the means of spectral analysis which has long served as a handy way to visualize the frequency content of sound.

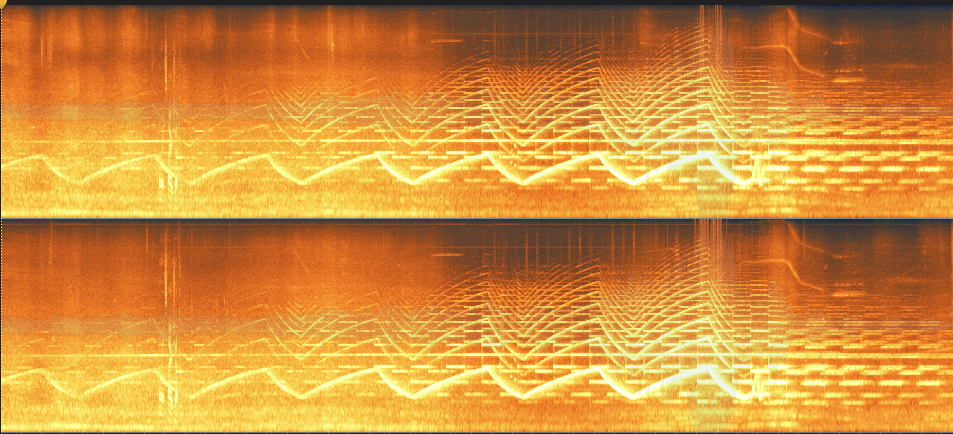

From creative tools like SpectraLayers and Iris, to audio cleanup and repair software like RX, color helps us read what’s going on in our sounds. For example, take this image of a waveform in RX of a fire engine passing by with sirens blaring:

We can see actually see the fundamental and harmonics of the siren across the entire frequency spectrum. Naturally, spectral analysis is exceptionally useful for audio restoration and cleanup because you can see where possible problem areas arise, but it’s fascinating just how much audio information is conveyed via a spectral image of a waveform.

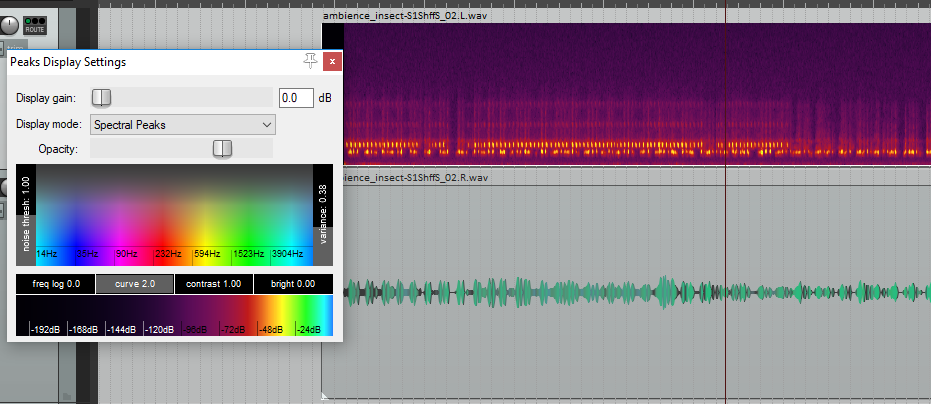

Reaper, the increasingly popular DAW, provides some unique tools to allow you to visualize and edit spectral information from within your tracks. Spectral Edits is akin to a lightweight version of RX allowing you to see a spectral graph of your sounds and make edits to this spectral content, while Spectral Peaks colorizes your waveforms based on the dominant frequency content of the sound across time. You can even shift the color gradient across the frequency spectrum, so if you feel low frequencies sound more red or blue, you can adjust the setting accordingly. Both of these spectral features give us yet more ways to see the audio information behind our waveforms.

Synthesizers

Beyond our everyday use of color in analyzing sound, thinking about sound and color also reminded me of some synthesizers that take color information and convert it into sound.

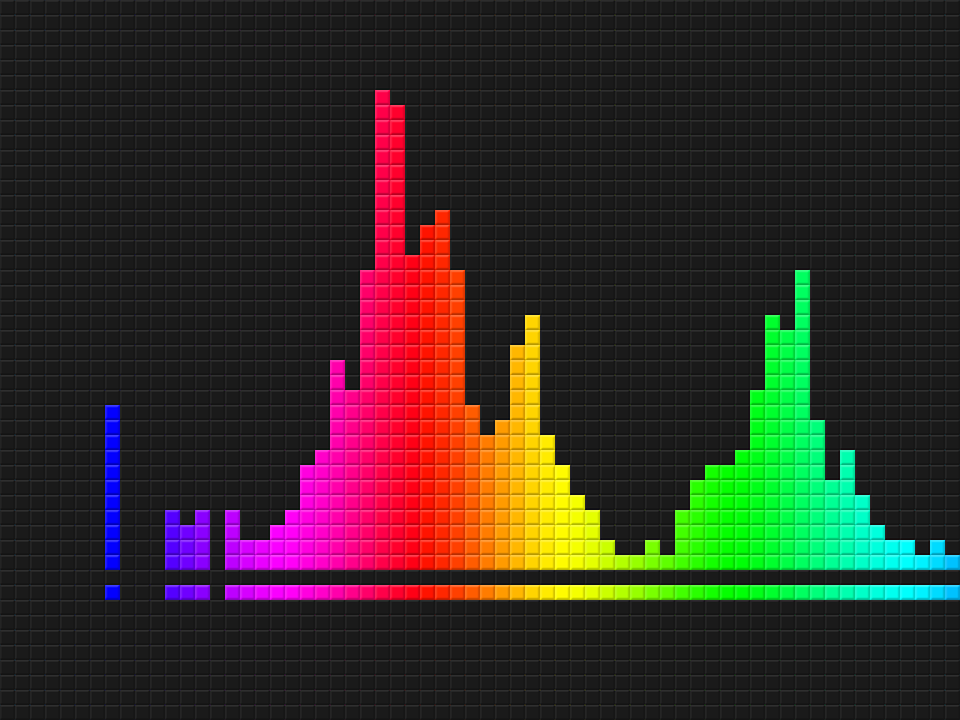

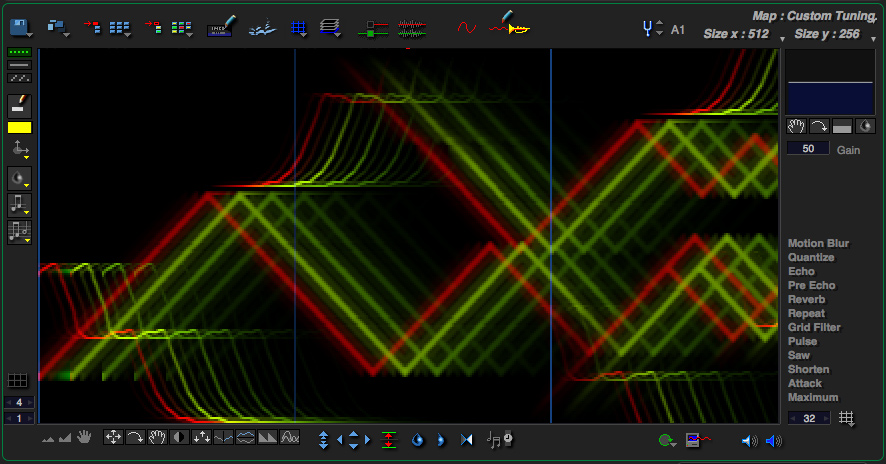

MetaSynth is still kicking for Mac and was (as far as I know) the original color-to-sound synthesizer. I remember first hearing about it and playing with it around 1999 and this novel approach to creating sound, by painting, kept me awestruck for hours. The concept behind MetaSynth was novel but fairly intuitive: sounds are made of pixels. The brighter the pixel the louder the sound. Color is used to describe panning: left is green, red is right, yellow is center. Painting is done on a 2-d grid with the x-axis representing time and the y axis representing pitch. Here’s a decent representative image of what that looks like:

You can also do spectral analysis in Metasynth on an image and convert it into a Metasynth friendly painting. So if you ever wanted to “hear” what your favorite cat picture of the internet sounded like, you’re in luck.

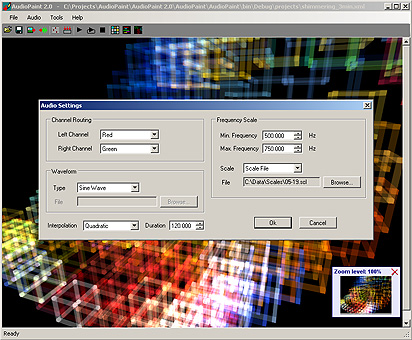

At the same time, Stillwind and I had our initial discussion, we sat next to a programmer who had created a similar program for the PC. Nicolas Fournel created AudioPaint in the early 2000s as a similar experiment with audio and image. Similar to MetaSynth, AudioPaint analyzes pixels and transforms them into sound. As Fournel describes it, “A picture is actually processed as a big frequency / time grid. Each line of the picture is an oscillator, and the taller the picture is, the higher the frequency resolution is. While the vertical position of a pixel determines its frequency, its horizontal position corresponds to its time offset. By default, the color of a pixel is used to determine its pan, the red and green components controlling the amplitude of the left and right channels respectively (the brighter the color, the louder the sound), and the blue component is not used. The action of each component can be modified in the Routing section of the Audio Settings window. Starting with version 2.0, AudioPaint can also convert the color components into HSB values, and use hue, saturation and brightness instead of red, green and blue.”

This article started as a walk down memory lane, but quickly evolved into examining research and then opening my eyes to remember all the tools we use on a daily basis incorporating color to help us understand sound better. It shouldn’t come as a surprise that if we have multiple senses, we ought to use them in concert to help communicate information better. While synesthesia is a unique condition, aspects like coloration of sound via spectral analysis do just that; communicate what we’re hearing in a visual manner, and allow us to ascertain more information about a sound than we may hear alone.

________________________________________

References

i. Peter Pesic, Music and Making of Modern Science, (MIT Press, 2014)

ii. Oliver Sacks, Musicophilia (Knopf, 2007), p.166

iii. Siri Carpenter, “Everyday fantasia: The world of synesthesia,” Monitor on Psychology, March 2001.