Matthew Hines is an audio technologist specializing in post production and audio mastering by day, and a keyboardist by night. Hailing from England, where he cut his teeth editing MIDEM award-winning classical recordings, dialogue and audio restoration for Nimbus Records, he’s called USA home for the last eight years. Freelancing as a sound designer and dialogue editor for independent film soon led him to a role as Audio/Multimedia Producer and Product Manager with iZotope, working with a dedicated team over the last five years to deliver key innovations such the RX Post Production Suite and most recently, VocalSynth, which released today.

Designing Sound: iZotope excels at creating both creative and utilitarian plugins. How does the makeup of the company influence the kinds of products you develop and how do you ensure consistency?

Matthew Hines: Almost everyone at iZotope, regardless of their role, is either a musician, a freelance audio or post engineer, or at least a music and audio fidelity enthusiast.

With that many creatives in one place, it can be a lively office environment, and there’s no shortage of inventive ideas… even as the context switches between experimental, musical sound design tools and the more technical, audio repair innovations you referenced.

Though on the surface, building a feature dedicated to workflow enhancement may be less exciting than the next great vocal effect (see VocalSynth, released at the time of writing), it may in fact be incredibly meaningful to our users. We find coming into work and asking ourselves “what can we push ourselves to do that hasn’t been done before” helps to keep us fresh, both internally and externally, in what it is we bring to the table.

As far as consistency, well, it’s a team effort, but part of my daily challenge as a producer and manager of the products is to ensure that our ideas are channeled into places that make sense, that tell a story from version to version, and also to perhaps establish the parameters within which we foster and encourage ideas in the first place. Which I suppose leads to your next question…

DS: How do you develop ideas for new products?

MH: It’s a never ending, iterative process.

Of course, we’re always on the road, both physically and virtually, listening to and engaging with users and nonusers alike. Typically, we glean from this a mixture of feature requests and nonspecific desired outcomes, both of which we can then investigate and research potential ideas or solutions for.

As mentioned above, we’re all creative people, so we periodically hold a two day event called The iZotope Open. In the runup to the event itself, anyone at the company can submit an idea, which could be anything from a new product to an internal process improvement… there’s no bad ideas right? We then vote on what we’re most interested in, and compelled to work on, divide into teams, and then the hackathon commences. By the end, we all present our work, our proofs of concept. If hackathon sounds like a fun, but ultimately amateurish approach, it really isn’t… ideas from the iZotope Open have become reality! Most recently, we launched DDLY, an interesting threshold-based approach to delay, and the impetus to release that product came about as a result of the proof of concept shown at the Open.

DS: How would you describe VocalSynth and how did the original idea come about?

MH: Though it’s no secret that vocal processing, for both music and sound design, had been trending for a long time, we’d begun to notice an explosion of ways in which vocals were infiltrating productions. If you turn on the radio, you can’t help but notice the vocal is not only the lead, but is being used as a lead synth, as a percussive or chordal element. I think the organic nature of the voice, even when cut up and processed a whole number of different ways, really lends itself to the human ear, even if the audience doesn’t always know “that sound was original a human voice”. I mean, shoot, BB8’s voice in Star Wars isn’t just a carefully curated set of synthesized sounds, it’s a carefully curated set of synthesized sounds run through a Talkbox in order to grab human inflections from Bill Hader and Jesse Schwarz’s mouths. There’s an image. OK, back to the topic:

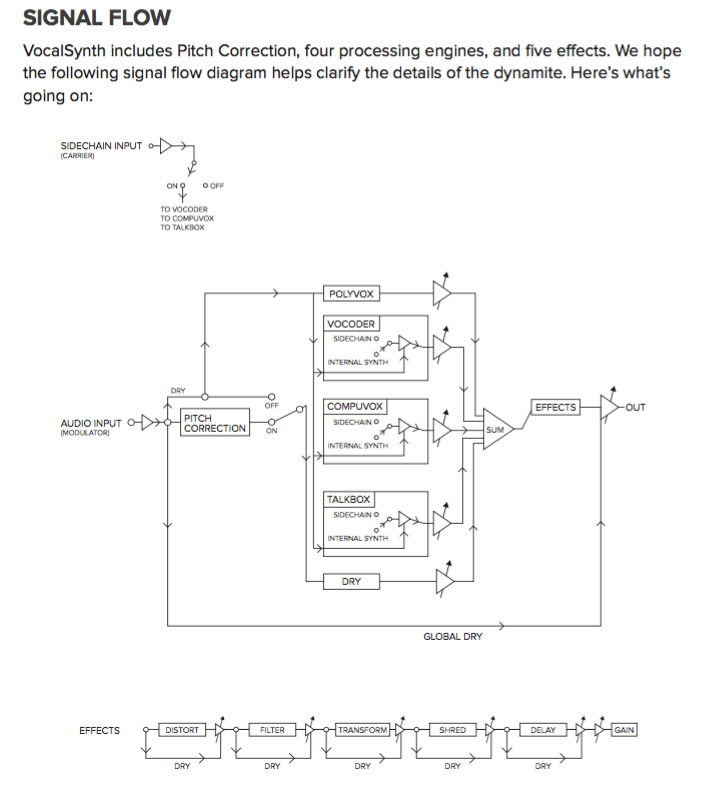

We’d noticed this trend, built a gigantic playlist that included songs from the past 50 years we felt were defined by signature vocal processing, and we then asked ourselves “what tools would we need to get sounds x, y and z?”. This inevitably large list was distilled into the Polyvox, Compuvox, Talkbox and Vocoder modules in VocalSynth, which combined with the effects, we felt would be capable of going after and not only accurately recreating sounds users like, but helping them go out and define something totally new.

Then we went and built the product… starting with hand sketches, prototypes in Max/MSP, then ultimately, code. It’s pretty exciting, and though I’m inherently biased, a product I believe is genuinely unique, and also offers DSP that no one else has ever been able to build, like the accurate Talkbox emulation that requires no tubing. And a pretty cool Vocoder with three modes. And a Compuvox module using Linear Predictive Codec processing for some super fun computerized vocal sounds.

[soundcloud url=”https://api.soundcloud.com/tracks/264411936″ params=”color=ff5500&auto_play=false&hide_related=false&show_comments=true&show_user=true&show_reposts=false” width=”100%” height=”166″ iframe=”true” /]

DS: How do you decide where and when to allocate company resources to a project, what influencing factors are there?

MH: We actually have teams dedicated to the innovation, maintenance and health of different product lines. Of course from time to time, we may help each other out, and some of us are thinking about the broader context and working with multiple teams. Influencing factors could be anything from an idea we really want to release by a specific date, or something else, such as Apple’s El Capitan update, which necessitated us to start to patch for certain issues as soon as we’d received the final beta binary of the OS from Apple.

DS: What does the structure of the iZotope development team look like?

MH: Typically, each team will have a Product Manager (in VocalSynth’s case me), a Product Designer, multiple developers and multiple quality assurance (QA) engineers.

There’s also a Research team. Research spends up to 50% of their time investigating new technologies, and answering the “what can we do that’s never been done” question posed above, and also have a representative on each team, because they spend a lot of time in support of a team’s work, delivering new algorithms. That said, we’ve also had the team write their own DSP, and we’re quite fluid at times… everyone’s in this together.

DS: What phases of development do you go through when working on a product?

MH: There’s an ideation phase, which could involve anything from the iZotope Open, collaborative playlists, digesting research papers, competitive analysis, talking with users, and this basically results in a big idea(s).

We then have to discuss the feasibility of each idea, prioritize accordingly, and if we’re aiming to hit a particular date, understand the scope of the work. The ultimate output of this is more definitive requirements from which people can build and test something tangible.

In theory, everyone is involved from the beginning of the process, and the third phase is a combination of building the product, such as VocalSynth, and releasing the product, which involves making the instructional and promotional content.

[youtube]https://youtu.be/UYilNRZrl_0[/youtube]

DS: Do you suffer from feature creep, are features or entire products often abandoned mid-development?

MH: Yes, we sometimes suffer from feature creep, but if we’ve done our job right the entire way through, this is minimal to none. Feature creep is dangerous, as it can very easily hurt rather than enhance a product. Last minute ideas may not always be fully thought through, and everyone, myself included, can be guilty of this!

Features, and sometimes entire products are occasionally shelved. If this occurs, it’s usually because we’re not happy with the way they sound and don’t yet see a way forward. With a product like RX, I can say from personal experience that we’ve definitely researched audio problems to the point of having faders that you could move and process a sound with, only to discover we couldn’t solve the audio problem as well as we’d like, and so we held the feature(s) back. Sometimes we’ll actually pose the question to our private beta, and have them answer it for us.

DS: Were there any aspects of VocalSynth that were particularly challenging from a research standpoint?

MH: Yes, quite a few! My favorite challenge was the Talkbox. How do we build a Talkbox without a physical compression driver, tube and that specific user’s mouth and lips to shape the sound?

[soundcloud url=”https://api.soundcloud.com/tracks/264411932″ params=”color=ff5500&auto_play=false&hide_related=false&show_comments=true&show_user=true&show_reposts=false” width=”100%” height=”166″ iframe=”true” /]

I can’t give away the secret sauce, but the team spent a lot of time solving this problem effectively, and it was the same intellects behind much of our legendary RX processing!

I know no one ever reads the manual, but I would love it if they did! We outline in a lot more detail than I can fit here how and why we did what we did. Just sayin… RTFM!!!

DS: When researching, do you ever encounter issues related to existing IP or copyright?

MH: Of course, any company must avoid infringing on the patents or protected intellectual property of others. We have a legal team in house, and are extremely diligent about this. Do unto others, right?

If it can’t be avoided, sometimes the answer is to license. iZotope has licensed our audio algorithms to others, but not typically the inverse.

DS: Are your products influenced by hardware, whether as a general medium or specific models?

MH: Not really, no. We may model the behaviors of a particular hardware piece… our EMT 140 plate reverb is a great example (there is no best… because no one plate sounds the same!), but we don’t ever allow our design to become skeumorphic and/or look like the hardware.

It actually pains me to see good sounding products do this, because the large majority of the time, controlling a skeumorphic interface emulation with a mouse is just a horrible experience, which can be so much more elegantly solved in digital, even if the sound is the same. I’ll descend from my soapbox now.

DS: How are you influenced by the nature of software as a development and product platform?

MH: Because of our domain, and the work that we do, I’m forever cursed to be hyper focussed on audio problems or inconsistencies, to the point where it’s hard to be immersed in a radio or TV show, film or video game that has issues. Non audio friends don’t understand!

But that’s not exactly what you’re asking… although limitations do exist (CPU, memory, plugin SDKs etc.) I would say that in many ways software gives us the freedom to explore, rather than be influenced. There are certainly dependencies… particularly with audio software, because as drivers, operating systems, audio interfaces, and other third party codecs, tools or plugins interact with each other and change over time, things can and surely do break.

DS: What happens once an iZotope product is launched?

MH: We drink beer. We run a victory lap around the office, which is generally as loud and as obnoxious as possible. Sometimes it involves trumpets, vuvuzelas, whistles… whatever we have to hand. Did I mention we’re pretty creative?

We then respectfully and more quietly help out the web team by monitoring our website, checking for any performance errors or downtime a launch spike may introduce, and we’re also listening to the feedback from our users as it rolls in.

___

Thank you to Matthew for taking the time to speak with us. You can find out more about iZotope and Vocalsynth (which has a 10 day free trial) at iZotope.com.

[…] Plugin Research and Development at iZotope: An Interview with Matthew Hines […]