I’ve been working on a game project on and off over the past year and a part of the design is of relevance to this month’s theme — animals. The gameplay revolves around creatures of various kinds — some good, some evil, some tiny, some large. I had to conjure a vocalisation system that achieved the following technical and design criteria:

- Actions by the user would directly affect the state (and sound) of the creature

- The player must be able to perceive some sort of emotive response from the creature

- A modular system which would work for various creature types and characters

- With mobile devices being the primary target, it had to be simple, effective and portable

- Low CPU and memory usage, which translates to maximising the design capabilities of the system with little DSP and few samples

Design Considerations:

As with most people, I’ve found creature/animal vocalisations easier to design when using material that consists of either human or animal vocal sounds. It is easier for players (or the audience) to make visual and mental connections if they find something remotely similar to reality. It was important for me to make the resulting design as close to what animals sound like.

I collected sounds that matched the above criteria and then shortlisted them based on recording quality (to ensure maximum quality after subjecting them to DSP mangling), character (sounds that created an image or an emotion in my mind) and frequency content (important when grouping sounds together).

‘Emotion’ is tough to parametrise or quantify. It is a loose descriptive and can mean different things to different people. Instead of going after specifics, I put down a list of questions to help me made decisions:

- What size does the sound convey? (the relative size of the animal)

- Is it irritating, menacing, timid or defensive? (dogs were a good reference for this)

- Does the sound convey speed and energy? (this is related to the previous question)

- Is there enough content to make the creature expressive and not boring? (player-creature encounters were expected to last a few minutes)

- Is the sound distinctive enough? (it is easy to get lost down the rabbit hole of perfection)

Technical Considerations:

I encourage constraints in my work, but I also enjoy having room to wriggle. We decided to use libpd (the ‘packaged’ version Pure Data that can be used as a sound engine), because of its flexibility and rapid prototyping nature. It makes it easier to implement non-standard ideas and workflows, which was what this project needed.

Performance and hardware constrains are important with content designed for mobile devices. Having complete control of the audio system gave me the opportunity to experiment with a variety of techniques and closely link the design and technical worlds. It made no sense to treat them as separate concepts. In my head, technical design is a lot like mixing a film — a combination of careful technical and creative choices to do justice to the project and medium.

Playground:

Over the past few years I’ve found myself getting comfortable with a brute-force design approach. I spend a large portion of my time on a project throwing ideas, sounds and images together until it all begins to make sense. It can be quite rewarding, once past the initial period of frustrating results.

Before constructing complex DSP techniques or piling on TheNextBestPlugin I try to maximise output by playing around with:

- Pitch: Realtime pitch changes. This could be either varispeed (pitch affects time) or a time-stretch like technique. In most cases I prefer varispeed because it also changes the amount of energy in addition to the pitch (more like reality).

- Amplitude: Simple amplitude analyses or automation.

- Time: Reorganising content, trimming samples, granulation or time stretch

- Frequency: Low pass, high pass or bandpass filters

Solution:

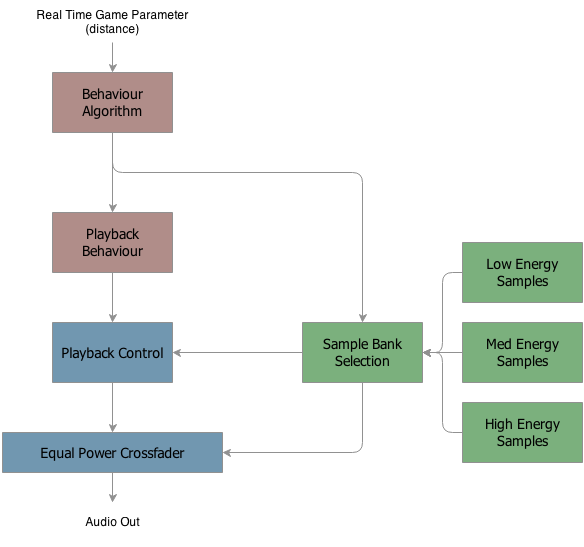

A few days of experimentation resulted in this system:

It consists of three components:

- Behaviour/Character control: This section receives input from the game engine and controls the behaviour of the creature, by manipulating playback controls and sample assignment. In this case, it receives the distance of the player from the creature/animal (could potentially be any other game parameter). As the player gets closer to the creature, it gets more agitated or excited and vice-versa.

- Behaviour Algorithm: This is the ‘heart’ of the whole system and controls the behaviour of the creature and can be completely randomised or controlled. Each creature can be ‘assigned’ a different behaviour.

- Playback behaviour: This translates the output of the behaviour algorithm through a set of transfer functions which is used to control the type of samples played back and their pitch, rate and amplitude.

- Sample Banks and Playback: The very first implementation of this idea used a single sample bank and didn’t result in much flexibility. Instead, I decided to use three different banks of low, medium and high energy samples. These samples work best if they are segregated based on frequency content and vocalisation character. Each sample bank needs a total of 10 seconds worth of samples to work effectively. So, each creature character just needs about 30 seconds worth of mono audio data!

- Equal Power Crossfader: The behaviour algorithm controls the sample bank selection and uses a short equal power crossfader to switch between sample banks during playback

Here’s what the system sounds like, with a mix of human and animal sounds loaded into the bank and a gradual decrease in the amount of energy:

Extending the system:

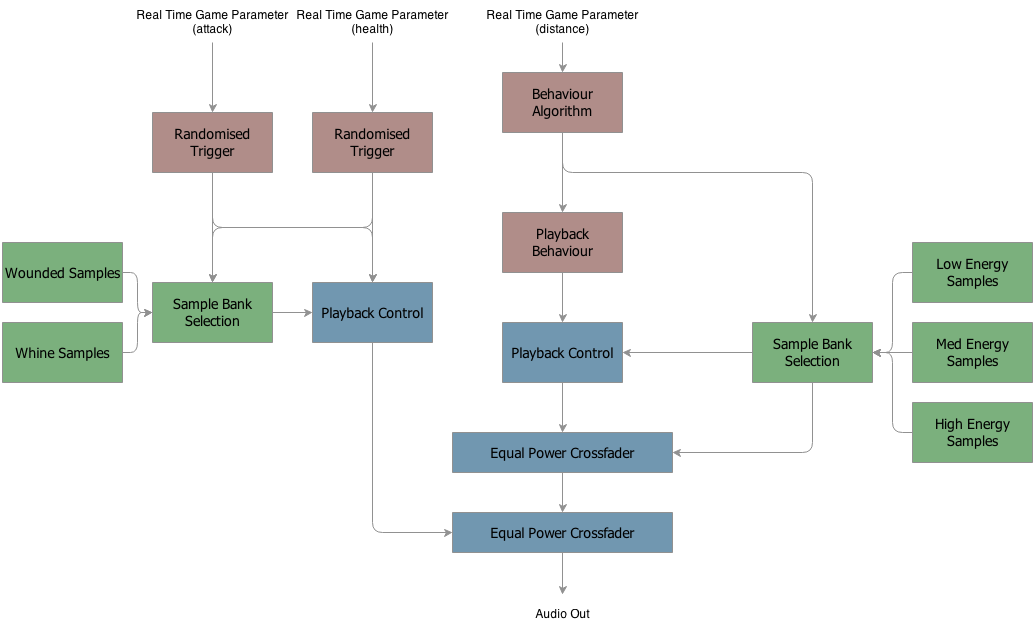

With a few tweaks, the system worked well in the game with about five different creature character types. To increase the level of interaction, I decided to add two more controls: ‘health’ and ‘attack’, as the evil creatures could be attacked and destroyed.

- Creature health: If the creature has 100% health, there would be no difference in how the system functions. As the creature begins to lose health, the system will playback random samples from the ‘Wounded Samples’ sound bank. The probability of playback of these random samples is directly proportional to the loss of health, i.e, a lower percentage of health increases the chance of playback of these samples.

- Creature attack: Every successful attack on the creature by the player, will result in one of the sounds in the ‘Whine Samples’ sound bank to playback.

Here’s a clip of a subdued creature with a slightly low health:

And a clip of the creature being attacked:

Further Work:

In its current form, this system works quite well in the game. It includes enough flexibility and every creature character just needs about 30-40 seconds worth of audio data loaded into the RAM (which equates to a few hundreds of kilobytes if compressed). The system doesn’t include any complicated processes like multi-voice granulation or convolution and takes up a fraction of CPU resources.

Future work will include more options in the behaviour control (controlled randomness, patterns, etc.), possibly more sample banks for finer control and more importantly using the system to create bursts of audio to construct words and sentences of gibberish.

Creature voice creation is the most difficult kind of sound design. I have enormous respect for anyone who gives it a serious try, and this is obviously a serious try. I’ve spent many years fabricating creature voices, and regardless of the methodology used to get there, in the end all that matters is what the results sound like. The main trick with creature voices is to make them sound organic, real, and natural, while also giving them an exotic twist that makes them seem unique and in some sense unfamiliar. There are very nice moments in this sequence, but to me most of it sounds like a perfectly good set of human voice samples that have been made to sound artificial. The most frequent criticism sound designers hear from directors is that a given piece of work seems unnatural, inorganic, synthesized, electronic, etc. In my opinion too much of this sequence falls into that category.

with deep respect,

Randy

Thank you Randy. I hugely appreciate you taking time to leave your thoughts. Quite often I find myself getting caught up in the process rather than the outcome and you have raised a *very* valid point.

Continuing the struggle,

Varun

Very interesting work, have you considered how this system might also be applicable to other scenarios? I’m thinking of stochastic sounds such as adding quasi-random variation to water, wind, ambient birdsong and the like based off of defined parameters. I did an installation work using Max MSP (similar to PD) that selected between two sample pools, natural sounds and manmade and depending on the level of noise in the space created by those in it, it would switch between them creating an increasingly agitated or calming space.

You might be interested in this article regarding some of the work done within Advanced Warfighter 2, a rare case where a audio system, not dissimilar to what you’ve created, actually controls visual components, in this case, fire.

https://designingsound.org/2010/10/audio-implementation-greats-9-ghost-recon-advanced-warfighter-2-multiplayer-dynamic-wind-system/

Audio is usually ‘reactive’ in games, systems like that and yours offer the opportunity to pass data to visual systems as opposed to just handling the playback of audio. Very exciting.

Getting audio and video systems to talk to eachother in games is relatively straightforward, usually it is just about the different departments collaborating — as mentioned in that article.

I haven’t tried this system for other kinds of sounds and it would be difficult (read impossible) to make it work for everything. In my experience wind can be easier to get away with and a lot of it can be accomplished by having good models that control a few filters. Water is difficult, even when recording it with microphones!

It depends on the context though. The perceived quality of a sound changes depending on what it is used for and when.

Very interesting project. The best creature voices or sound always somehow balance the familiar with the unfamiliar/exotic – or so it seems to me. The insectoid aliens in District 9 struck me as taking that balance about as far toward the exotic as you can get – while still successfully communicating relatable emotion. That was excellent work to my ears. But then, that was a feature film with a finite number of script “lines” to be performed in alien language – not a game environment where players may crave much greater variation.

@Randy: Your use of the word artificial made me think of R2D2: Completely artificial sound; not a trace of human voice there – yet we love it (because it works).

Great post – can’t wait to hear more about this project, Varun!

Actually there are human voice elements in R2D2. Ben Burtt used lots of humming and cooing in addition to the synthetic elements. I hope it goes without saying that when I refer to “creature” voices I mean “organic” creatures, not robots. R2D2 is clearly a robot, so there is no burden to make an entity like R2 sound “natural.”

Randy

I’m just starting out having to soon do some creature voices for a computer game. I struggle with it sounding great and authentic. You seem to have put much thought into it and even built a system to intelligently select samples you made.

I won’t have that luxury as I will only be able to trigger sounds for specific events, statically. Meaning: creature was hit, play a random sound of set “hit”. Creature dies, play sound of set “death”… Things like that.

But I tried to spend some time and see how others did creature voices. A lot of samples, also commercial samples seem to be drastically slowed down human voices and screams. Often mixed with some lion growls and others.

Then I also heard about granular techniques to get more freedom than with static recordings that are just slowed down.

Other techniques involve the use of vocoders that are able to impose the performance of once wave onto another. Does anybody know of vocoders that do this well? I experimented with many but it seems I didn’t really understand them well enough yet to be able to predict the result. Most of the time it’s disappointing, I guess because of the source material?

Also a good idea is just envelope following. So one has a monster sound, but you can control it with your own voice. Make a loud voice sound, and the volume envelope is transferred to another sound, maybe a steady lion growl. So you have control over how violent and loud the lion growl is, instead of hacking together many different samples.

A tool that I play with and I’m impressed by is by sound designer Orfeas Boteas (dehumaniser.com). It unites many of the above techniques and adds some synthesised voice sounds to create really great sounding creatures. It was written in Max. Maybe worth a look.

I haven’t found many useful articles about sound design techniques for creature voices. Does anybody have other sources? I would be interested to learn more about it, like the techniques used in Orfeas’ tool.

Varun,

This technique would be an incredible achievement, but I feel like there’s nothing stronger than the human ear.

We have the ability to audition sounds and, as animals ourselves, sense the emotion that our animal vocalization sfx convey.

We can tell if a pig squeal is more ‘angry’ than ‘scared’ or (vise-versa) through our instincts.

And a great ear/editor will be able to mix and mesh these vocalizations on a timeline in a way that could create something genuine, creative, and iconic.

Creature sound design is the most challenging type of design I’ve encountered in my career… But it is a fascinating topic!

Sorry for being so late on this thread (almost a year and a half) but I’d love to continue to discuss it!

E-mail me at [email protected].

Best,

Beau

Hey Beau,

Getting a computer to replicate human choices is difficult — even for simple sound design systems like these, let alone larger AI systems. With more time dedicated to it, this system can be made better (more natural, less mechanic), but definitely cannot replace a human and the happy accidents of creative sound design. Bear in mind this system was built for a computing device of limited technical capability.

With interactive mediums we tend to train/force computer programs to make decisions that sound good. As Randy pointed out, this system is still very far from sounding natural, let alone recognisable and iconic. The control we gain on a timeline is lost when creating a system with some amount of intelligence.

Varun