When embarking on a sequel to one of the premier tactical shooters of the current generation, the Audio team at Red Storm Entertainment, A division of Ubisoft Entertainment, knew that they needed to continue shaping the player experience by further conveying the impression of a reactive and tangible world in Ghost Recon Advanced Warfighter 2 Multiplayer. With a constant focus on creating dynamic, real-time, multi-channel systems to communicate information using sound, they successfully helped to create a world that visually reacts to sound and further anchors the player in the world. Their hard work ended up winning them the 2008 GANG “Innovation in Audio” award for the GRAW2 Multiplayer implementation of an audio system that turns sound waves into a physical force used to drive graphics and physics simulations at runtime.

The Audio Implementation Greats series continues this month with an in-depth look at the technology and sound design methodologies that went in to bringing across their creative vision. We’re lucky to have original Audio Lead/Senior Engineer Jeff Wesevich and Audio Lead / Senior Sound Designer Justin Drust laying out the detailed history of what promises to be the most extensive overview of the widely discussed feat of implementation greatness: the Ghost Recon Advanced Warfighter 2 Multiplayer: Wind System.

Hang on to your hats, and catch the breeze!

The Set Up

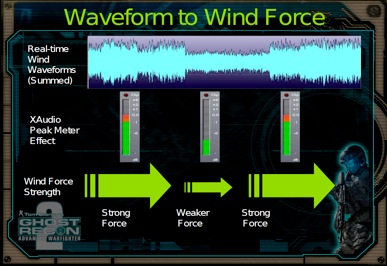

JW: “I think the thing I like the most about the wind effects modeling system we came up with for GRAW2 MP is that the idea behind it is so simple–everyone gets it on the first take. To wit: the amplitude of the currently playing wind wav files are converted into a force that can push particle systems around or into a force that a Havok physics simulation can work with. We add a semi-random direction to the force to keep things looking natural, but that’s the whole shootin’ match in just two sentences. It is astonishingly simply to create complex, difficult solutions, but the simple ones–man, that takes work.

The genesis of the system came from a specific multiplayer map. In this scenario, a C5A Galaxy (one of the largest airplanes ever made) has crashed in the middle of a huge thunderstorm and burning chunks of the thing are splattered the length of the map. Great idea, but with that much intense fire and smoke spread out across 1600 sq. m., we quickly exhausted our basic flame and “burning stuff” audio sources. And truth is told, the particle guys were having their own problems with the fairly strict limits on the number of different particle systems they could deploy. Things were looking and sounding pretty monotonous, instead of dangerous and exciting.

We knew we had a basic output meter provided as part of the X360 XDK, and we knew that the sound of the flame would have to be influenced by the howling wind, so our first cut was to simply attach a Matt-McCallus-optimized version of the RMS meter to the wind mix bins, and then use that output to change the volume of our flame samples. Not bad, but not ready for prime time either. We then created a type of emitter that would allow us to layer specific fire effects at a given place; triggering their play and level based on the wind’s RMS sum. This allowed us to mate a big whoosh with an accompanying wind gust. Once the sound designers started playing around with this, things got interesting.

We were making great progress, but now we had audio that didn’t sound at all like the static, looping particle systems sprouting all over the map. Well…could we change the flames and smoke the same way we were the accompanying sounds? Sure enough, there was a parameter in our generic particle system that allowed for wind to deformation, but of course this hadn’t been used before, and even the Art Director couldn’t be sure if the systems had been constructed with the parameter in mind. Fortunately, the first couple we tried were done that way, and it proved very simple to take the wind amplitude and update the particle systems in real time. At that point, we added the direction component, tweaked it, and suddenly we had huge gusts blowing flames all over the place.

We were really excited at this point, but even I was concerned about how this would go over with the artists–what do you mean everything will play back differently in-game than it does in our tools? How do we debug our work, etc., etc? Surprisingly enough, they loved it. Unbeknownst to us in our little corner of the world, the particle guys had been struggling big-time with issues like short loops and repetition; and their only real tool to combat this was to add more particles or systems to a given effect to make it appear moving and somewhat random–which of course hurt performance. (This should sound familiar to the audio guys out there). And then suddenly someone shows up at their doorstep with a gizmo that dynamically perturbs their systems–in a naturalistic way–for free. We weren’t a threat–we were problem solvers.”

GRAW 2 MP Dynamic Wind System

[vimeo]http://vimeo.com/15822665[/vimeo]

The Execution

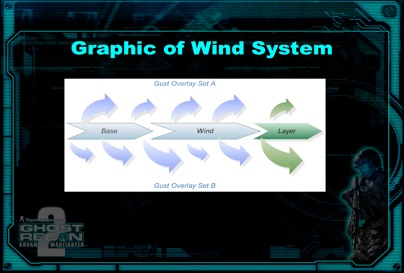

JD: “Building on what Jeff has already explained, our environmental audio design contained three base wind layers, Breezy (being the lowest), Windy, and Gusty (being the highest). These wind levels were setup as zones within the mission space and could be cross faded between each other and respond to elevation; climb a mountain and transition to a higher wind intensity. Each base wind layer also had three overlay layers that would randomly playback to enhance the bases. The base layers were designed to be constant 2D looping stereo files while the overlays (also 2D looping and stereo) contained gusts and swells, providing a realistic alternating wind experience.

These varied wind intensities gave us the ability to calculate different RMS levels via playback which became the fuel for the dynamic wind system. While in pre-production we knew specific maps were going to call for higher wind intensities (such as the Crash Site level which mimics a intense wind storm and the crashed C5-A airplane mentioned above) so we knew in advance that specific levels would call for specific wind intensities.

As Jeff explained, we wanted to harness the wind to allow us to do more with actual in-game audio emitters and help bring the once stagnant multiplayer audio environments to life. For this we created a special emitter class that could be triggered to play or not play based on the RMS output of the wind. To achieve this, each “wind emitter” had mapped RMS values that would trigger specific playback time intervals based on specific RMS amplitudes. For example, increased wind RMS = increased playback frequency. These settings would be set by the sound designer on a per emitter basis within the mission space:

Example: -6 RMS = playback time interval of every 2 – 6 seconds (the stronger the wind = increased playback frequency of the emitter)

Example: -40 RMS = playback time interval of every 20 – 30 seconds (the weaker the wind = decreased playback frequency of the emitter)

Each wind emitter placed in the world had properties like those shown above that could be set based on the structure, location, and type of sound asset. This way we could simulate a low creaking sound of a building settling after a large wind gust by setting the properties to trigger playback at a decreased frequency when the wind was at its lowest amplitude. When the wind picked up, an entirely different sound emitter of a shutter or door rattling could be triggered to play during the higher wind amplitude and more frequently, thus creating the effect of the wind physically moving the shutter or door and responding to the gusting wind.

So now we were not only adjusting the playback frequency to match the wind intensity, but also alternating between sound assets to provide even more immersion.

These audio emitters would then be placed in specific areas of the maps or structures to enhance the environmental audio of specific features. For example, a building could have anywhere from 2 to 3 separate wind emitters (with typically 4 to 8 assets in each) placed on or around it that contained different audio content based on three levels of intensity, High, Medium, or Low.

High emitter content contained more action within the sound assets, heavy creaks, rattles, etc. Low emitter content contained low end groans and settling sound assets, while Medium was the glue that helped bridge the gap between the extreme amplitude sounds being triggered and the lower amplitude sounds. This system resulted in a very immersive and dynamic atmosphere that was all based around the RMS output of the wind assets. When the wind system gained or decreased intensity, so would the effected audio emitters. (We also had a special case “after the fact” emitter that could be triggered to play after a lull in the wind and was typically used on very large structures).”

The Sound Design

JD: “As you can imagine there were a large number of audio assets created in order for this system to work properly. Each emitter group alone would typically contain anywhere from 4 to sometimes 16 assets in order to add more randomization to the system. We spent a lot of time recording and editing sounds that would work within these levels, everything from the obvious fences, shutters, doors, dirt, to even large church bells. Once all of the assets were recorded and implemented into our audio engine, it was then time to create the emitters and set the properties. A great deal of time was spent auditioning the levels during development to make sure the playback intervals were set properly in order to maximize the results of the system, and of course fit within the overall mix.

In turn this spawned creating wind emitters not only for specific structures but graphical effects as well. Fire for example, also had multiple audio emitters that would randomly playback pending the wind settings and RMS. For this we triggered specific fire sound assets to play when the wind intensity was at its greatest, creating a bellows sound when the wind would gust. When a player walked past a burning jet engine or building on fire, the gusty wind overlay would trigger the fire to react to the increased amplitude. Tie this in with the actual fire particle effects growing along with the wind intensity, and voila, it all came to life.”

GRAW2 MP In Game Sound Design Montage

[vimeo]http://vimeo.com/15823814[/vimeo]

The Physics Bonus Round

JW: “It was a short jump from there to swapping out the random tree sway to work with the wind input, and then from there to playing with physics. Since this was 2006, we already had a pretty fair soda can effect to handle the can or two the artists put in the maps, but you only heard it after you actually shot the can. It was pretty simple to attach a sound listener to the Havok object code, which would translate our wind force into the Havok system, and have it act on the attached object. While not at all useful from a game-play or immersion standpoint, it was still great fun to watch a gust of wind knock a can over and then roll it underneath a car.

And as it all turned out, there wasn’t a lot of effort required, and it cost almost nothing at run time to get perfectly synched effects and sound. While pushing coke cans around is pretty trivial, it did show us that the concept was very practical for things like monster’s voices, or other effects that could look very cool if the sound produced could be directly translated into a force that affects the appearance of a game’s environment.

For example, Matt was able to quickly create an effect for helicopters…These were an interesting challenge, because the designers had just added a new game mode called “Helo Hunt,” which was proving to be very popular among the dev team members. Suddenly the bad guys had more ‘copters than Col. Kilgore and the 1st Air Cav… The initial piece we hooked in was a downward force from the blades that would affect particle systems. There tended to be a lot of burning buildings in the MP levels, so watching a helicopter come through a screen full of smoke, pushing the columns around in a fairly realistic way was fun to watch, as was watching the smoke gradually reconstitute over the fire below.

Our levels also had trees throughout and they proved to be good places to find cover. Since we didn’t want you to feel completely secure, we added a force to the blades that would push the foliage around underneath the rotors. Hovering helicopters in a search pattern got a lot more exciting throwing the tree limbs and leaves above your position around while they hovered nearby. And it gave you a lot more information on the helicopter’s position than just the audio alone. Overall great fun to do, but there was a lot of tweaking—our initial cut at the feature made the place look more like a hurricane than anything else…

The Wrap Up

JD: “In conclusion, this seemingly all came about by searching for ways to enhance the Multiplayer environmental audio experience within our levels. Having various wind layers and overlays helped to tie the entire system together opposed to just playing a static 2D wind background for each level. Keeping the system as robust and dynamic as possible was the key to its success. By creating such a dynamic system, it was easy to keep file counts at a minimum and still achieve outstanding results due to all the various dynamic elements involved. The wind had three distinct bases, with an additional three overlays, which would trigger 3D emitters that contained multiple variants, with three additional levels for various wind intensities (High, Medium & Low), which could all be set by the sound designer. It was a robust, but intelligent system, which helped bring life to our audio environments.

Many kudos to the team at Red Storm / Ubisoft for allowing us to experiment with this system and putting up with us when we’d break the build…I promise that only happened a handful of times. ”

JW: “One final note – while we did have quite a few things fall into place for us to be able to implement these systems in the time available, the fact that this all happened at Red Storm was no accident. RSE’s engineers and artists were always ready to try new approaches, and more importantly, were always ready to give audio a chance to shine. I will always be grateful for the strong collaborative environment that allowed us to play with the possibilities. Thanks guys!”

While it has become common to affect audio using parameters coming from various parts of the game engine, like passing materials for footsteps or velocity for physics impacts, it’s not often that we see the audio engine used to manipulate game side data. By harnessing wind volume to drive visual parameters in the game, the GRAW2 MP team not only added character and realism to the different systems, but they also succeeded in bridging the gap between the audio and game engines. With most areas within the game industry reaching for greater and more realistic interactions which utilize every aspect of a games underlying simulations, we could do with a bit more cross engine communication in order to make audio an integral part of the equation. With shining examples like the GRAW2 MP wind system to stand as a testament to what can be done, hopefully the road ahead will be a 2 way street with audio helping to drive us forward into the next generation!

Huge thanks to Jeff and Justin for taking the time to bestow the bulk of this story upon the community.

-damian

very neat article- Well done guys!

indeed a very inspiring article! thanks for putting this out guys.

nice concept, visuals following audio is the opposite to what I would expect in game development

Thanks

Wow, now that was an informative article. Somehow, Audio parameters controlling graphics, instead of the other way around, is extra pleasing.

Wow, now that was an interesting and informative interview. It nice to witness some audio driven graphics for a change!