This months Audio Implementation Greats series returns with the overarching goal of broadening the understanding of Procedural Audio and how it can be used to further interactivity in game audio. While not specifically directed at any one game or technology, the potential of procedural technology in games has been gaining traction lately with high profile uses such as the Spore Procedural Music System. After reading this article it should be obvious that the idea of real-time synthesis and procedural audio in games is something I have a great interest in, and this article should be taken more as a call to arms than as a critique of our current state of affairs. In the current generation of consoles we are deeply indebted to the trailblazers who have gone before us, and I feel that in acknowledgment of the history of game audio we must do what we can to build on past accomplishments and use everything at our disposal to increase the level of interactivity within our medium.

I can’t wait to hear what’s in store for us in the future!

Today’s article is also being released in conjunction with the Game Audio Podcast Episode 4: “Procedural Game Audio” which brings to the table Andy Farnell, Francois Thibault, and David Thall, who all work in different areas of the gaming and audio industry. What starts out as a definition of procedural audio eventually ends up as a speculation of sci-fi proportions. We discuss, among other things, the role of interactive audio and how it can be used to support immersion in games, how to employ already existing systems in order to more accurately reflect underlying simulations, along with suggestions for moving forward with procedural in the future. It is an episode that has been along time in the making, and Anton and I both hope it will ignite a spark of inspiration for those of you who are interested in what procedural has to offer.

With that in mind I encourage you to explore all of the different materials presented: this article, GAP#4, and the collection of procedural related links at the LostChocolateLab Blog.

I look forward to the continuing discussion!

I pains me to have to be the one to break it to you all but, from where I’m standing, the year is already over. As the past months spiral behind us in an unending blur of tiny victories and insurmountable setbacks it’s impossible not to feel like time is rapidly accelerating towards the inevitable end of the year. Having just completed and rolled off an epic product cycle has forced me to immediately downshift and reflect on what we were able to accomplish and, when it comes to bigger picture questions in game audio, what is left to accomplish.

Call it “taking stock” if you like but from where I’m standing, interactive sound still has a long way to go towards delivering on the promises of it’s past successes, and even more work catching up to the current state of affairs elsewhere within the game development pipeline. I’m not prone to complaining, and would rather just get on with the task at hand, but there are times when I feel like audio has resigned itself to sit quietly while the visual aspects of our industry struggle to bridge the uncanny valley.

That’s not to say there haven’t been advancements in game audio in the current generation. As each successive sequel or variation on a theme rushes out the door to greet players, we have quickly made the move towards unifying the quality of sound between: cinematic, fully rendered cutscenes, and gameplay. Where once we had to accept the discrepancies between full frequency linear audio and it’s slowly maturing (and highly downsampled) interactive counterpart, we are now fooling even the best of them into thinking that there is no difference. But in our quest for this unification I feel like we, as an industry, have neglected some of the strengths of our medium, namely: interactivity.

It’s become common practice during “Quick Time Events” (QTE) or linear “In-Game Cinematics” to stream in linear audio to support or replace the scripted action on screen, thus guaranteeing that sound be communicated appropriately every time. While this is in direct support of the Film vs. Game convergence concept, it also effectively removes randomness and variation from the equation resulting in a more consistent but less surprising experience. Every time the sequence is repeated it will sound exactly the same. Every footfall, sword impact, explosion, and dialog line reproduced in stunning 5.1 meticulously as designed each and every time, over and over. If the other big concept constantly bandied about in the industry is “Immersion”, then there is nothing that will break that faster than repetition. I’d rather opt for a more consistently surprising experience than risk taking the player out of the moment.

The point I am trying to make is that, during this race for convergence, it seems that we have left to atrophy some of the fundamental tools and techniques utilized during previous generations of interactive audio. Things that could potentially bring our current quality level in line with our ability to manipulate sound in a way that is directly reactive to the drama unfolding on screen.

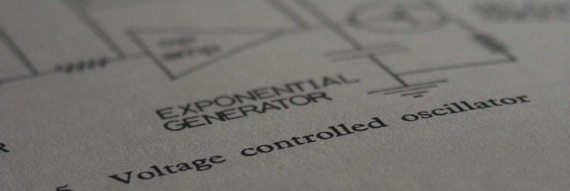

A brief divergence: Once upon a time all was synthesized in the world of game audio, and everything was made up of sounds – skronking, bleeping, & blarping – out of arcade cabinets and home game consoles everywhere (the origins and development of which have been lovingly detailed by Karen Collins in her book Game Sound). From those synthetic beginnings, and through the transition to PC’s and MIDI based sound and music, we arrived at CD-ROM and then consoles that supported the playback of CD-quality sound. For the first time we weren’t limited to the textures created in code and, in the rush to better approximate reality with higher quality sample based sounds, we never looked back.

While no one would argue that this first phase of sound synthesis in video games resembled the sound of the real world, at that time there was a greater ability on the part of the programmer/ sound designer/ composer to leverage gameplay and the game engine in order to dynamically affect the sound being output. Each generation begets it’s own tools built from scratch to support newly unlocked functionality and to support hardware that has never before existed. As each new platform enters the market developers are stuck in an endless loop of constantly evaluating new technology and re-evaluating the old. The road between then and now is littered with implementation, toolsets, and processes that were previously used to connect audio to gameplay. In the beginning, synthesis was the foremost methodology for sound in games but advancements in that area fell by the wayside as sample based technology took over.

However, work on synthesis outside of game audio has sped forward in other areas where resources are more readily available and computational restrictions are less of an issue. There has a much ground covered in the convening years in all areas of synthesis, for example: music production. We have arrived at a point where a quantity of music written for games (and otherwise) is created with a combination of samples, synthesis, procedural techniques, and sound modeling. To a degree, the difference between a real orchestra and a synthetic representation of an orchestra are starting to become indistinguishable. In short, we are no longer bound by the limitations of synthesis outside of games, but in game audio we’ve for the most part abandoned synthesis as a tool to be utilized at runtime.

I’m wondering, as I sit in front of a display crammed with magical audio tool functionality, why I feel like this is the first volume slider (or fader) I feel like I’ve ever seen in game audio that even remotely resembles the bling-d out interfaces of our fellow sound compatriots over in the world of Pro Audio? You know what else is great about it? It measures volume in decibels (dB), not some programmer friendly 0 -1 or 0 -100 scale that has nothing to do with the reality of sound; and I think to myself “Have we finally arrived at the 1970’s of Game Audio?” Which should go along way towards framing our current situation in relation to that of our audio associates outside of games.

While direct comparisons to other disciplines may seem unfair, it’s hard not to look to other disciplines and wish for some semblance of standardization to hang our hat’s on. It would seem that due to the speed at which things change in the games industry, and the constant need for evolution, we have instead opted to lock ourselves away in isolation each working to find the best way to playback a sound. In the rush to move the ball forward individually we have only succeeded in bringing it halfway towards what has been realized for sound outside of games.

During my time with Audio Middleware I have been constantly relieved that we no longer have to tackle the problem of how to play a WAV file. If any kind of standards have emerged it has been through the publicly available game audio toolsets of the current and past generations. The coalescing of all the different implementation techniques and methodologies are represented across the different manufacturers. Several best practices and lessons learned across the industry have been adopted; from abstracted Event systems, bank loading, basic synthesis, interactive music, and in some cases the beginnings of Procedural audio. Middleware is leading by example by providing a perfect storm of features, functionality, accessibility, and support that has helped to define exactly what implementation and technical sound design is right now. This is not to say that proprietary toolsets shouldn’t exist when necessary, but that our drive to stay proprietary is holding us back from working together to raise the bar.

Aside from whatever whizz-bang sound gadgets we might wish for any given project, at the end of the day, the single most important role that audio must play is to support the game being made. In addition to that, If we as an industry are truly attempting to simulate (and manipulate?) parameters based in reality – physics, weather, AI, etc – in our medium, it would make sense that our advances in audio should mirror the randomness and variability of the natural world. Increasing CPU power and exploding RAM spec’s are all steps in the right direction but unleashing that functionality calls for a drastic rethinking of how to keep things dynamic and in constant action/ reaction to the variables happening on the gameplay and visual side of things. One of the ways to further this relationship is by embracing the strengths of our history with synthesis and taking a page from the exciting work going on in procedural audio outside of games.

Let’s pause here for a definition of Procedural Audio:

“Procedural audio is non-linear, often synthetic sound, created in real time according to a set of programmatic rules and live input.” – “An introduction to procedural audio and its application in computer games.” by Andy Farnell

And a more specific definition regarding it’s application in games:

“Procedural audio is the algorithmic approach to the creation of audio content, and is opposed to the current game audio production where sound designers have to create and package static audio content. Benefits of procedural audio can be numerous : interactivity, scalability, variety, cost, storage and bandwidth. But procedural audio must not be seen as a superior way for creating game audio content. Procedural audio also has drawbacks indeed, like high computational cost or unpredictable behaviors.” – from paper entitled “Procedural Audio for Games using GAF”

In an attempt to shed some light on what technologies exist, I have included answers to some basic questions I solicited during my research phase for this article. Three audio specialists who were gracious enough to bring me up to speed on several emerging technologies offer opinions on the current state of Procedural Sound and Synthesis.

Interviewed via email were:

– David Thall (DT)

– Mads Lykke (ML)

– Andy Farnell (AF)

Damian Kastbauer: Where do you see the greatest potential for procedural audio in games today?

DT: Physical Modeling.

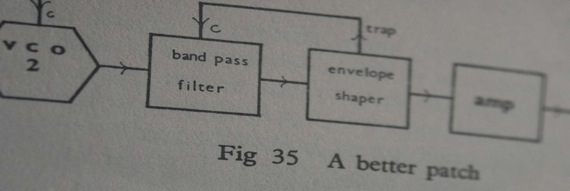

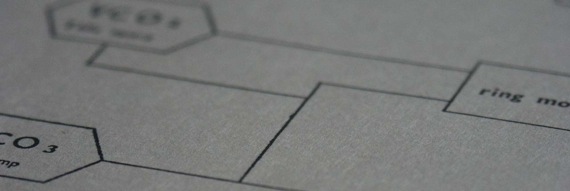

The current video game sound design paradigm suggests that sound designers add ‘effects’ to a scene in some post-processing stage. For example, they might be given the job to sonify an animated simulation of a character or environment. However, there is no reason why sound designers should need to mix ‘canned’ recordings of sounds into an environment that is already in partial to full simulation.

For example, if we are ‘already’ looking at a mechanical simulation of an electricity-producing water mill, why not simulate the sound production using the same model? A sound designer could provide the model with ‘grains’ of sound, each of which would be procedurally combined by the underlying model to generate the sound scene in real-time.

ML: In sound effects based on object information from the 3D-objects (size, shape, surface, motion, interaction with other objects etc.)

That said, I do not think that the resources available for procedural audio in today’s games allow for advanced and detailed synthesis models – like the models used in computer games graphics. I like to compare the current state of procedural audio with the situation of 3D graphics around 1990. There is a lot of work being done in the labs and papers concluding on the promising potential of procedural audio but no one has really proved the benefit of procedural audio in commercial games yet.

In my opinion this has to do with the focus of commercial game developers today. For years the goal of many game developers (and programmers) has been to achieve the most realistic games possible with the present hardware. This focus has caused the more experimental and simplistic audio designs (including synthetic audio designs) to lose terrain for the benefit of sample-based audio. This focus has led to today’s paradox where sample-based audio is the auditive counterpart to the highly detailed modeled computer graphics.”

AF: In terms of sound classes? Engines for racing games, some animal models maybe (especially in lightweight children’s games), vehicle dynamics, environmental and ecological sounds. That’s the stuff for the next couple of years. Generative music systems of course. There are many aspects to procedural audio and many interpretations of the use of that technology. At one end of the scale you might consider offline automatic asset generation a la James Hahn (Audio Rendering), at the other end (of the real-time scale) you might consider behaviouralist interpretations of control (when applied to ordinary samples) as the next step. In fact interfacing problems in the gap between method and physics engine are very interesting as transitional technology right now.

But procedural audio is about much more than interesting synthetic sounds, it’s a whole change of approach to the audio computing model and the real potential is related to:

– LOAD (level of audio detail)

– Sounding object polymorphism and inheritance

– Automatic asset generation

– Perceptual data reduction

DK: What is the biggest obstacle to bringing procedural audio to consoles?

DT: Weak Sound Engine Integration.

Sound engines must be integrated into the larger graphics pipeline, so that tools and runtime systems can be developed that work audio into the ‘specific’ (as opposed to ‘general’) case.

For example, the rhythmic variations in a piece of music should be accessible by the animation and environmental systems. This would allow animation to better synchronize with the dynamics provided by a game’s sound track.

Another example might allow programmers or sound designers to modulate the volume of one sound with the scale of some visual effect, such as an explosion. Something as simple as tool-driven inter-system parametric modulation would increase the procedural variation factor by orders of magnitude.

In a galaxy far far away I think procedural audio will be able to produce extremely detailed and realistic synthetic audio that to some extent can match the present 3D graphics with regards to “realism”.

ML: The biggest obstacle to bringing procedural audio to consoles is the mismatch between the realism of the procedural audio we are capable of producing with the present hardware, compared to the computer graphics of our consoles.

In order to create a game where the player will not miss the usual sample-based audio we need to consider creating games that support a more experimental and simplistic auditive expression.

DK: Do libraries exist that would scale to a console environment? (pd, max/msp, csound, etc.)? Are the CPU / memory requirements efficient?

DT: Max/MSP could easily be modd’ed to work within a game console development environment. The graph node interface is a well-established paradigm for building and executing ‘systems’ such as those that might be architected to solve a procedural audio algorithm. With some work on the GUI-side, both pd and CSound could be extended to do the same. Arguably, all of these environments would be useful for ‘fast prototyping’, which is always a plus.

On the other hand, none of these systems are well-suited to handle the static memory allocation requirements of most game console memory management systems. Software would ‘definitely’ need to be written to handle these cases from within the respective environment.

ML: My experience with consoles is limited although I have some experience with max/MSP and pd. But I have never tried porting some of my patches for consoles etc.

AF: Chuck remains very promising. So does Lua Vessel since Lua already has a bit of traction in the gaming arena. Work on the Zen garden at RjDj is the closest project I know with the stated aims of producing a axiomatically operator complete library aimed at embedded sounding objects for games and mobile platforms.

DK: What examples of procedural audio are you aware of? (current or past)

DT: Insomniac’s sound engine has a walla-generator that builds ‘crowds’ of dialogue from ‘grains’ (or snippets) of dialogue. Various parameters are modulated by gameplay to control different aspects of the walla, such as density over time and volumetric dispersion over space. These are used extensively to increase the perceived perception of scene dialogue and action.

ML: Through my research I have not come across any contemporary examples of procedural audio in commercial games. In stead I have found a lot of inspiration in the good ol’ console games that rely on sound synthesis – like eg. Magnavox, Atari, Nintendo did in the 70s and 80s – before the entire game community went MIDI-berserk in the 90s, which subsequently spawned the sample-based mentality that dominates today.

AF: Best examples, on the musical side, are still those that go back to the demo scene. A demo title like .produkt (dot product) from Krieger 1997 shows the most promising understanding of real musical PA I have seen because it integrates synthesis code and generative code at an intimate level. Disney, EA, and Eidos all have programmers that venture into proc audio.

DK: What work on procedural audio outside of games would you say is currently the most exciting?

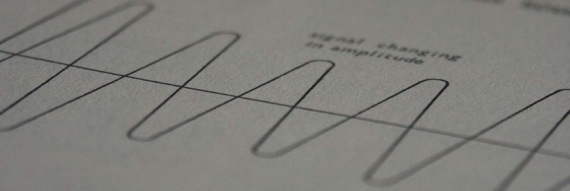

DT: Granular Synthesis of Environmental Audio

Granular Synthesis allows sound designers to easily sample, synthesize and parametrically-generate environmental audio effects, such as wind, water, fire and gas effects, among others, with a level of detail and morphology only hinted at in game-specific procedural audio systems. The fact that sound designers and composers have been successfully using these systems for decades to create easily modifiable ambience shows its greatest potential.

I think the development within virtual musical instruments (like vst instruments) is interesting in relation to procedural audio in computer games. Many virtual instruments run native (without external DSP) so they need to have a relatively small CPU usage, since they are a part of a recording environment, where other plug-ins need the CPU as well.

ML: I am very interested in virtual instruments capable of producing complex but controllable sounds, rather than virtual instruments trying to emulate single instruments like an electric piano to perfection.

We need to consider developing models for procedural audio that are able to produce complex and yet controllable sounds, in order to minimize the CPU cost. If we manage to create such models we can lower the instance count of the models needed to create more complex sounds – and thereby also move away from a procedural sound design that sounds simplistic.

I think that the developers of virtual instruments are 5-10 years ahead of procedural audio designers which is why this field can serve as an inspiration to many of us.

AF: So much happening outside games (it) is amazing. Casual apps on iPhone and Android are moving into the grey area between gaming and toy apps, things like Smule, RjDj and so forth. Combined with social and ad-hoc local networking these can threaten the established ‘musical’ titles, ‘guitar hero’ etc in short order. (An) Italian group around the original Sounding Object research sprint are always on my mind. We need more of that kind of thing. Mark Grimshaw from Bolton is editing a book for IGI available later this year and has some good contributors on the subject of emerging game audio technologies, Karen Collins, Stefan Bilbao, Ulrich Reiter. My own book (Designing Sound) is now with MIT Press. There is some new focus on modal methods with Dylan Menzies work on parameter estimation from shape being a promising transitional technology (taken a long way by den Doel and Pai of course with their Foley-matic)

DK: What area of procedural audio are you most interested in?

DT: Geometric Propagation Path-based Rendering

Geometric sound propagation modeling, though not a new concept, would benefit game audio immensely if it were procedurally generated in real-time. This would include reflection, absorption and transmission of indirect sound emissions into geometric surfaces, diffraction and refraction of sound along edges, and integration over the listener’s head and ears. Done correctly next-gen, this could increase perceived immersion immensely. Nuff said!

ML: At the moment I am mostly interested in virtual musical instruments. But I am also very interested in procedural audio in computer games because it is on “the breaking point”.

AF: The bits I haven’t discovered yet. :)

To get another earful of Procedural Audio for Games, head over to the Game Audio Podcast Episode #4 and listen to experts working in the field weigh in with their experiences and opinions. If you’re still itching for more, I’ve posted a round-up of related links over at the LostChocolateLab Blog which should help fill in the gaps. Please drop by to explore additional Procedural Audio articles, video’s, and white papers that I’ve collected during my research.

I was inspired recently by a recent keynote made by Warren Spector where he called this moment in game development a “sort of renaissance“. I feel the same way about game audio, in that there is so much change happening, and so many breakthroughs being made, that we really are in a golden age. By taking a moment to reflect on the past, widening the scope of audio for games, and staying true to the strengths of interactive I think we can travel the next section of road and ride off into the sunset. Saddle up partners!

hurray. the babies have finally broken out of the womb. it was a difficult birth but they look big fat & fit :D

tl;dr;iai

Wow, yet another article that should be required reading. Thanks Miguel!

I based my Masters dissertation on application of procedural audio to video games, check out a video of it!

http://www.youtube.com/watch?v=-a9Ai0odHvI