In Part One we took a look at some of the fundamentals involved with orchestrating the sounds of destruction. We continue with another physics system design presented at last years Austin Game Developers Conference and then take a brief look towards where these techniques may be headed.

UNLEASH THE KRAKEN

In Star Wars: The Force Unleashed we were working with two physics middleware packages: Havok Physics, and Pixelux’s Digital Molecular Matter (DMM). In addition to the simulation data that each provided, we also needed to manage the relationship between both. While Havok has become a popular choice for runtime physics simulations, the use of DMM spoke to the core of materials and provided each object physical properties enabling – in addition to collision’s – physically modeled dynamic fractures and bending. In some ways tackling the sound for both systems was a monumental undertaking, but there was enough overlap to make the process more pleasure than pain.

Before Jumping into the fray, I just wanted to take a moment to echo a couple of things that were touched on in the companion this article; specifically, that collaboration and iteration are the cornerstones of a quality production when it comes to systems design. Collaboration, because the stakeholders involved usually include people across all disciplines; from programmers to sound designers, modelers to texture artists, build engineer’s to game designers. Iteration, because the initial vision is always a approximation at best and until things get moving, it’s difficult to know what the eventual shape things will take.

While simultaneously reigning in and letting loose the flow of creativity ebbing and flowing across the development team, there is nothing more important than the support of your colleges. Leveraging the specialties of different people helps to bring new idea’s to situations in need of a solution. Your greatest asset as a team member is to recognize and respect the uniqueness of your co-workers and stay open to the constantly shifting requirements of the game. Good listening and better communication will improve the productivity of meetings, and reinforce the fundamental desire of everyone – to craft the best player experience possible.

DIGITAL MOLECULAR MAGIC

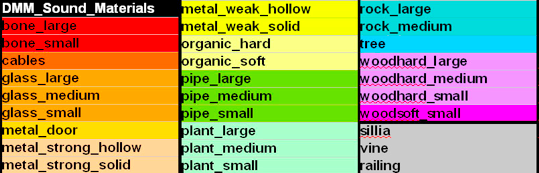

Starting with Digital Molecular Matter, Audio Lead David Collins worked closely with Pixelux to identify the core components that could be utilized in bringing sound to the simulations. Prototypes were created offline in pre-production driving toward the best way to score the sounds of the dynamic physically modeled objects being created by the art team. With a list of over 300 types of DMM materials, we chose to abstract a group of about 30 that would cover all of the sound types and object sizes. These DMM Sound Materials were added as a “Sound Material” property to the meta data for each DMM Material type. This was the first step in defining the sound an object would make when calculations regarding collisions, fractures, and bending where concerned.

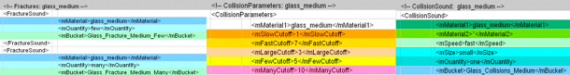

Behind each of these Sound Materials were a set of parameters for speed (fast/slow), size (small/medium/large), and quantity (one/few/many) – in addition to specifications of sound interaction between surface types – that enabled us to specify different thresholds for each and scale sample content across the different values. The content itself – abstracted Sound Cues (or Events) – were defined for use by the DMM Sound System using “Sound Buckets” which essentially specified the sound content that would be used for a given parameter’s action when triggered.

In this way we were able to appropriately employ the sound of different sized collisions and fractures based on the number and type of actions requested by the system. Behind the Sound Cue referenced in the Bucket for each sound type we had the usual control over file, pitch, and volume randomization in addition to 3D propagation min/max distances and priority – which became crucial to reigning in the number of instances of a Sound Cue during a given request from the system.

We also had bending information to deal with; specifically for metal, wood, cables, and organic vines. When the system determined that bending of a DMM object had begun, it would start a loop that would continue as long as a minimum threshold of force was being applied to the object. While looping, the system also played several single element bend “sweeteners” when spikes in the amount of bending occurred. The best example of this can be heard when wrestling one of the giant doors between area’s in a level.

For an addition summary of the DMM audio system, check out Jesse Harlin’s fantastic overview in Game Developer Magazine from September 2008.

BRING IT TO THE TABLE

We adopted a different approach to handle data coming from the Havok side of the physics simulation – where we had a greater level of detail between objects that were throw-able and caused impacts, across the different environmental material types.

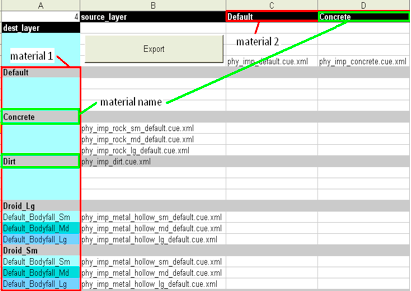

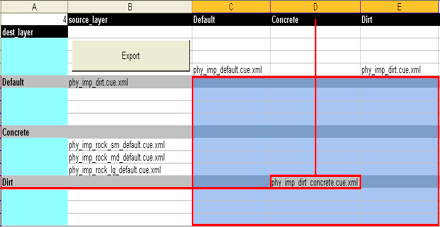

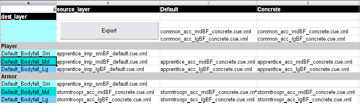

One of the often used audio techniques of Physics integration in the current and previous generations is the look-up table or matrix that is used to define material actions and their interactions. Using a spreadsheet format as the starting point for the system, surface materials can be arranged along the top and far left side of the sheet. At the point where a row and column intersect the Source Material to Destination Material sound interaction can be specified, usually as an audio file or an abstracted reference to a group of files with additional properties for randomizing pitch and volume values – what we were calling a Sound Cue.

We took this methodology one step further by enabling the additional layering of Sound Cues for the Source and Destination objects. This allowed us to not only specify a Sound Cue for the specific interaction between materials, but also a default sound for the inherent object or material type. In this way, a single collision between a metal barrel and the dirt of a forest floor could incur the following impacts: 1. Metal Generic (Source Layer) 2. Dirt Generic (Destination Layer) 3. Metal Barrel on Dirt Explicit (Source + Desination Layer)

Let’s take a step back and look at how each of those things are handled within the lookup table.

MATERIAL 1 & MATERIAL 2

“Material 1” (Column A) is used to define the material type of the actor being used. (ex. A metal object would be tagged with the “metal” material) The material name is defined at the top of each material section. The size of the material selection can be adjusted using the modifier adjustment in the top left corner cell (A1). “Material 2” (Row1) is used to define any other material types used within the game environment.

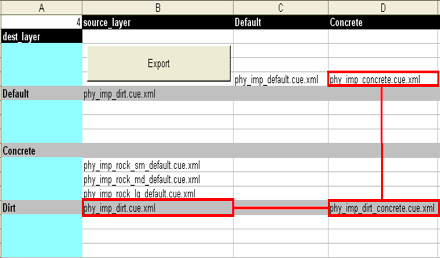

SOURCE LAYER

The “Source_Layer” (Column B) is used to define a set of sound content that will play every time an object – with “Material 1” defined as it’s material – impacts a surface with any “Material 2” in the game. The “Source_Layer” has a multifunction ability: If there is an entry in the first row of a material type (ex. phy_imp_dirt) then all levels of impact will register as the same “size” and “weight”; otherwise. If the first entry in a row is left blank, you can then slot 3 sounds that will react to the size and weight of an impact as specified in the Threshold tab (sm/md/lg).

DESTINATION LAYER

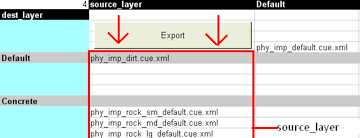

The “Dest_Layer” (Row 2) is used to define a set of sound content that will play every time an object whose material is defined in the “Material 2” (Row 1) is impacted by an actor with any “Material 1” (Column 1) in the game. The “Dest_Layer” has a multifunction ability: If there is an entry in the first row of the “Dest_Layer” then all levels of impact will register as the same “size” and “weight”, If the first entry in a row is left blank, you can then slot 3 sounds that will react to the size and weight of an impact as specified in the Threshold tab. (sm, md, lg)

SOURCE + DESTINATION LAYER

The source_layer + dest_layer provides a look-up table where a sound is played specifically between a “material 1” and “material 2” impact. In the following example, when an actor with a material of dirt impacts a concrete surface the phy_imp_dirt_concrete content will play.

COLLISION’S COMBINED

In this example we are playing a combination of the 3 sounds when an actor with a material of dirt impacts a concrete surface.

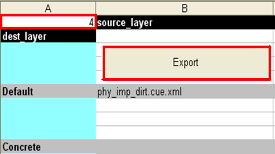

MODIFIER AND EXPORT

The modifier defines the number of rows between each material as a way to prepare the values to be exported into game ready data. The export button is used to convert the spreadsheet to an efficient XML file that will be used by the game engine at runtime.

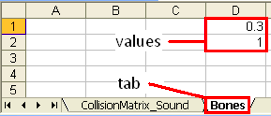

THRESHOLD

Threshold is used to define the “size” and “weight” values that are used to transition between the 3 slots defined for an object with sm, md, lg.

BODYFALL

We were able to extend the use of our matrix system to incorporate our player and non-player character (NPC) bodyfall collision’s which were handled using a combination of Havok Physics and Natural Motion’s Euphoria.

INTO THE FUTURE

While there has been some laboratory work done in the area of Synthesizing Contact Sounds Between Textured Objects by the GAMMA research group at the University of North Carolina at Chapel Hill, this approach has yet to cross over to games at runtime. In place of true synthesis, the industry is currently invested in a sample playback methodology which requires a multitude of discreet sound files that are used as a representation of a given visual. Whereas once upon a time the game industry was embroiled in the hardcore synthesis detailed at length in Karen Collin’s excellent “Games Sound”, the change to sample playback has caused the synthetic muscle of game audio to atrophy. On the horizon is mounting a recombination of the power and flexibility of synthesis and procedural audio techniques, and the fidelity of linear sound content. Beginning in 2008 with the release of Sound Seed Impact and their Sound Seed Air suite of tools, Audiokinetic is leading the charge in audio middleware towards a return to synthesis that aims to add creative options that leverage the increased CPU and reduces the dependency on predetermined sound content stored in RAM.

With everyone in game audio engaged in battle for the resources needed to achieve an exponential level of quality in the current generation, we need all of the creative tools and tricks at our disposal to accomplish this goal. I’m a fan of anything that expands upon the growing possibilities of interactive audio in a way that puts control in the hands of people who are actively looking to push the boundaries of what is possible. Where it goes from here is up to the people making choices about how we move forward as an industry and where the focus continues to be.

Until next time!

Art © Aaron Armstrong

Another wealth of information Damian! Thanks as always, I look forward to part 8.