The audio director’s role in games is very similar to the role, defined by Walter Murch, of the ‘Sound Designer’ in film, whereas the role of ‘sound designer’ in games, has a very different implication. (Although to be honest, I still think the perception of a ‘sound designer’ in film, to a wider audience, is that of someone who creates cool sound effects, rather than someone who directs the sound experience with as much responsibility as the art director or production designer) The ‘audio director’ is responsible for the entire soundtrack, from dialogue, ambience, sound effects and music. Not only this, but also working with the audio programmers on the desired technical requirements and direction of the audio and the wider design and art team in augmenting and leading feature design. The role requires expertise in each and every area of the soundtrack, and of course an understanding of what will and won’t work and how the balance of all the elements will sit together in the final mix. The role of the audio director changes dramatically throughout production depending on what is necessary at different stages of production. Writing design documents and preparing preview material, testing out implementation with placeholder content, directing and liaising with composers or voice directors, doing production Foley, dialogue editing, running batch processing on huge amounts of data, as well as tweaking and integrating features into the audio and game engine directly. Scheduling and project management skills also play heavily into the role as organizing quite large teams of audio staff (internal and external) can be a challenging task on a large console project.

I’d actually like to say a little about the role of sound designer in games and how that has changed, as I think the audio director’s role has perhaps stayed the same over the last few years, whereas the act of designing sound for a game has gone through significant changes with the arrival of new technologies. The title of ‘sound designer’, and I guess the role, has changed somewhat significantly over the past 10 years or so to encompass the implementation stage as a crucial part of the sound design process itself. By that I mean that creating the sound effects and then creating and setting up the rules and behaviors under which those sounds will be played back in the game are two parts of the same ‘sound design’ process. The days of creating sound effects that sound great on in an ‘offline’ context which are then played back in the game with little or no further manipulation are very much in the past for game sound designers working on console titles. The term that tends to be used currently for this role is ‘Technical Sound Designer’, and I find this terms far more useful a description. I believe the term was coined by Gene Semel, and it implies that implementation and design of systems of playback is an integral part of that sound design process. When we bring in a sound designer on contract to work for a couple of months on sound defects, implementation is probably 80% of their role and we advertise that role as ‘technical sound designer’.

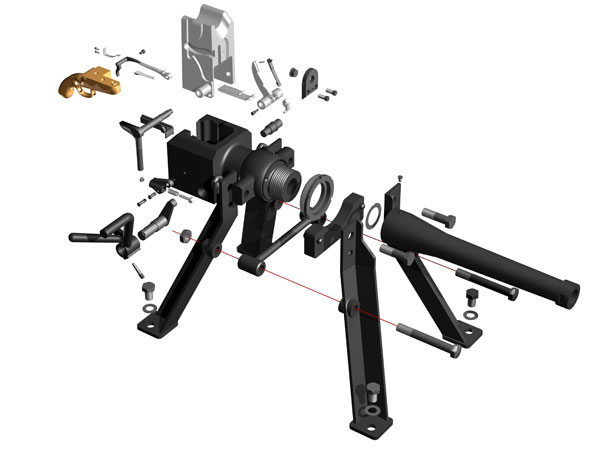

If I can give you an example of a weapon sound, in the old previous-generation days you’d probably just mix together several different effects, the bullet casing sound, the Foley, the tail and various other components in a sequencer and then render those out into a single mono gun shot sound effect which would then be triggered in the game each time a shot was fired. Today, with more emphasis on dynamic and interactive aspects of sounds, and greater ability to play more sounds simultaneously, that same gunshot may be broken out into an initial shot sound, with a separate tail sound or perhaps even the in-game reverb would be used to generate the tails at run-time. In addition, there may be several separately triggered shell casing sounds for different ammo types or the bullet casing falling on different surfaces, and there may be separate gun Foley and trigger clicks all added as separate triggers to the gun firing logic in the game. The firing shot sounds may also be completely different samples for distant enemy gunfire as opposed to close up enemy gunfire, and they may mix together over distance curves specified by the sound designer. This ‘exploded’ view of what was previously a very ‘film-sound’ technique of mixing together the sounds required into one sound for the purposes of implementation requires that a lot of thought and design is put into triggering such sounds. The end result in the game will read as a single sound effect, when in reality the game engine can be playing back many separate sounds, which can all have separate pitch, volume, distance falloff and DSP settings, to make up that single component. The advantage this brings to the game and to the audience is a weapon that feels more dynamic and reactive, and very importantly, less repetitive and fatiguing to the player.

This image is an example of an exploded view of a gun, in interactive design it helps to think about the sound in this way, while always keeping in mind the effect of the final ‘mixed’ version of the sound. Interactive music, ambience and practically every other component of game audio can be thought of in this way too, as an ‘exploded’ view of the many separate individual sounds and tracks that make up a finished sound. The ‘mix down’ happens of course in real-time from the game’s audio engine rather than being the print master mix down that occurs when preparing a final film soundtrack.

So, more and more in games the act of implementation is becoming a part of the sound design process. Run-time is the point at which the various sounds come together, this of course has implications for being able to tweak and tune content at run-time too, and having audio tools that allow this, and also allow the replacement of sound effects while the game is still running, is becoming increasingly important to this process. The implementation is the point where the amount of variants for each sound is specified, the variance in pitch and volume of the files are determined, the maximum and minimum distances for how far away the sound will be audible and how much of the sound is sent to any environmental reverb that may be present in the game. It is increasingly difficult to be able to create sound effects for a game like this, particularly a laundry list of effects like I used to do in the Vanishing Point days, without having a knowledge of how those sounds will be implemented. The implementation changes the requirements for the sounds, rather than the other way around.

Brining this back to the role of the audio director, I think that an understanding and intimate knowledge of these implementation processes is essential in being able to make high-level decisions about how to help improve the sound in any game. Being able to suggest time and memory saving ways of implementing sounds, music or dialogue that may have a similar feel, but use significantly less resources, is often a skill called upon in the closing stages of game development.

Written By Rob Bridgett for Designing Sound.

[…] for games, which is a somewhat nebulous term. It’s defined pretty well by Rob Bridgett in this article. Essentially, I try to serve as a bridge between sound content and programming/engine-side […]