In part one of a two part series on physic sounds in games we’ll look at some of the fundamental considerations when designing a system to play back different types of physics sounds. With the help of Kate Nelson from Volition, we’ll dig deeper into the way Red Faction Guerrilla handed the needs of their GeoMod 2.0 destruction system and peek behind the curtain of their development process.

SYMPHONY OF DESTRUCTION

Physics, the simple pleasure of “matter and its motion through spacetime”.

In games we’ve reached the point where the granularity of our physics simulations are inching closer and closer towards a virtual model of reality. As we move away from the key-frame animated models of objects breaking, and the content swap of yesteryear, towards full scale visual destruction throughout our virtual worlds, we continue to increase the dynamic ability of objects to break, bend, and collide in relation to our experiences of the physical world around us.

“It is just inherently fun break things, and the bigger the thing is the more fun it is to break. It can be a stress relief or just give a feeling of power and control. We worked extremely hard to create a virtual sand box for the player to create and destroy as they see fit, we just hope it gives them the same pure joy they had as a small child kicking over a tower of blocks. “ Eric Arnold, Senior Developer at Volition (CBS news)

Because of the joy involved in seeing the reaction of objects when force is applied, there is developing the potential to derive great satisfaction from the realism of these simulations in games. Piggybacking on the work of people doing the hard thinking about how to manage the visual response and “feel” of this technology, audio has the ability to use information from these systems in order to attempt a similarly pleasing sound accompaniment to the visual display of physical interaction. Call it orchestrating the symphony of destruction if you will, there’s nothing finer than the sound emanating from the wreckage of a virtual building.

Hooking into these systems is no small task, in part due to the tremendous amount of data being constantly output; object to object collisions, velocity, mass, material type all being calculated at runtime to a level of detail necessary to display an unfolding level of realism on-screen. Sorting and sifting through this data becomes one of the main focuses early on in production in order to gain an understanding of how sound can be designed and played back in reaction and relation to these variables.

CONSTRUCTING CONTENT

When it comes to creation of these sounds – in which the smallest impact to the largest fracture must be represented with enough variance to discourage repeatability within a short amount of time – the asset count for any given material type can easily spiral out of control.

Some considerations when defining the assets include scaling based on: number of collisions, object size, object weight (mass), the speed at which an object may be traveling (velocity), and material type. It’s with these parameters that we can begin to build an abstract audio system in order to switch and transition between the different sampled content sets and gain the ability to apply parametric changes to the content based on information coming from the simulation. These changes could include: applying a volume or pitch reduction based on the declining value for velocity, or changing between samples based on the number of collisions at a given time.

“As all of this is going on we also play audio and video cues to let the player know which areas are getting close to breaking. Beyond making the world more believable they serve as a warning system that the structure is unstable and could collapse on the player’s head if they aren’t careful and hang around too long. This small addition took the system from a neat tech demo to pulling the player in to the game world and generating very real chills as they flee from a creaking, groaning building while tendrils of dust and debris rain down around them. “- Eric Arnold (Eurogamer)

RED FACTION: GUERRILLA – CASE STUDY

In an expose’ on the physics of Red Faction: Guerrilla, Senior Sound Designer Kate Nelson lays out the fundamental systems design and decisions that went into orchestrating the sounds of destruction:

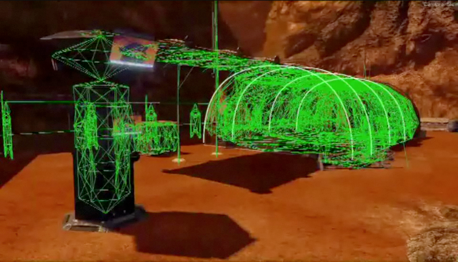

“’Players love to blow stuff up’ was a popular phrase echoing in Volition’s halls throughout Red Faction: Guerilla’s development. GeoMod 2.0 technology made real-time destruction an exciting focus of the game design team. For that reason, one of the primary goals for the audio team was to ensure that when players ‘blew stuff up’ they experienced satisfying and immersive audio feedback. Overall, designing the destruction audio system was a rewarding challenge.”

THE ROAD TO RUINATION

Everything to do with audio for the destruction system is piggybacked on top of the underlying physics simulations being run by engineering. Because there are so many stakeholders involved with bringing this to life on screen and through the speakers, it’s usually an exercise of collaboration to the highest degree in order to find commonality between disciplines, and pave the way for elegant design.

“Once art/programming/design had a good prototype of what behaviors were expected, our audio team spent a good amount of time picking the destruction system apart and narrowing it down to the basic core components that affected audio:

— Every building and object in the game was made up of different materials, and those materials each referenced a core “destruction” material

- All things artists made out of metal, regardless of texture, could be thought of as “steel”

- All things artists made that were rocks and concrete could be combined into “concrete”

— Each material was engineered by artists and programmers to break in a specific way:

- Concrete would break into smaller pieces of concrete which would range in size but had consistent shape

- Steel would break into smaller pieces of steel which would range in size and vary in shape (sheets, poles, and solid chunks)

— Each material and shape combination would react differently in the environment when tossed around:

- Concrete blocks impacted the ground, rolled down hills, slid across flat surfaces, etc.

- Steel sheets impacted the ground, slid across flat surfaces, but would not roll, etc.

Leveraging the the core commonalities in order to appropriately simplify things from a sound perspective led to a greater understanding of the content needs and allowed a greater focus and level of detail on a few types of materials. When every game has to temper the fidelity of it’s systems – be it ambient, footsteps, or physics – with the availability of CPU resources and RAM, choosing where to allocate resources can be a constantly shifting management game that relies on constant gardening to make sure every sound type get’s what it needs to survive and be heard.

“From these observations and with keeping in mind that we had to keep our asset count down, we were able to extract a basic idea of the kind of audio assets we would need to create and trigger:

[Material type] + [shape] + [size] + [destruction event]

Examples:

- Steel + Sheet + Large + Impact

- Concrete + Solid + Small + Roll

“Once we identified the kind of sounds we wanted to produce with the destruction engine, we found the best way to be able to communicate our vision was by capturing footage of the game and adding sound design that illustrated the kind of support we wanted. This video was key in selling our concept to the project producer and explaining to our programmers what we needed to hear and why – nothing says “this is why it’s fun” better than witnessing the moment itself.”

FUNDAMENTAL FACTORS

Working closely with Eric Arnold and senior audio programmer Steve DeFrisco, the audio team (including Kate Nelson, Jake Kaufman, Raison Varner, and Dan Wentz) drove towards a system that was able to identify the four destruction factors they had identified for content.

— Material type (destruction sound material) was added to objects, environments and materials by artists or members of the sound team.

— Shape was determined after destruction – in real-time – by code that measured each material piece after destruction.

— Size was determined at the moment the destruction event triggered, based on the mass and velocity of the object:

- A X kg piece of concrete traveling at Y velocity impacted with Z energy value

- Audio designers specified which Z energy value triggered a large, medium, or small sound event for each destruction material.

— Destruction Events were calculated in real-time determined by the action of the material piece:

- Upon colliding with something, an impact would trigger

- if the piece continued to move in a horizontal fashion and was turning on its axis, a roll would trigger

The combination of systems and content design forms a mighty Voltron of destructive power and sound mayhem which can be heard resonating through every destructible element in Red Faction: Guerrilla. Once everything was up and running with sounds being triggered appropriately, the complicated task of applying sound voice limiting and additional sound finesse helped to reign in the multitude of sounds escaping from every impact.

Examples of other key features:

- Volume attenuation was applied based on the velocity of destruction objects.

- Playback of destruction events was controlled in order to not overwhelm the player.

- An additional material layer could be specified if needed to differentiate between surfaces that were struck.

Additional thoughts:

- Adjustments to Mars gravity during development made tweaking size energy values interesting!

- It was necessary to strike a balance between having enough destruction assets to provide necessary playback variety, and having so many assets that we blew our memory allotment.

- It took time to determine how many destruction sounds should be allowed to play at once to provide the necessary destruction feedback for the player without swamping the overall soundscape.

It can be said, that with the escalating demands across departments in game development, the single greatest asset on the side of quality continues to be the ability to quickly develop and implement systems that can then be iterated upon as we all work towards simulating models that meet and exceed expectations. The development of any complex system absolutely depends on this iteration in order to dial in the important aspects which will help to sell the desired effects.

UNTIL NEXT TIME

Tune in for Part Two when we look at the systems behind the physics in Star Wars: The Force Unleashed and look towards the future beyond our current reliance on sample playback technology.

Art © Aaron Armstrong

Interesting article! I had much the same challenges designing the sound for the small, meaningless, addictive and satisfyingly destructive 3-minute game, “Office Rage”. Check it out at:

http://www.dr.dk/spil/office_rage/

(You’ll need the “unity player”, which installs easily in your favorite browser.)

Thanks!