When designing audio we are often thinking of time across a large variety of units: samples, milliseconds, frames, minutes, hours and more. This article is inspired by a conversation I had with Andy Farnell about a year ago at a pub in Edinburgh, right before a sound design symposium, where we discussed about time and the role it plays when it comes to designing audio.

Like most other audio designers out there, I started twiddling the knobs and sliders well before I had an understanding of the underlying DSP. It was eye-opening experience to realise that almost every single DSP effect is related to time. So let’s start looking at a few common DSP tools used in everyday sound design and analyse how time and the precedence effect plays a role, starting from hundreds of milliseconds all the way down to a single sample.

Precedence Effect

The precedence effect is a psychoacoustic effect that sheds light on how we localise and perceive sounds. It has helped us understand how binaural audio works, how we localise sounds in space and also understand reverberation and early reflections. From Wikipedia:

The precedence effect or law of the first wavefront is a binaural psychoacoustic effect. When a sound is followed by another sound separated by a sufficiently short time delay (below the listener’s echo threshold), listeners perceive a single fused auditory image; its spatial location is dominated by the location of the first-arriving sound (the first wave front). The lagging sound also affects the perceived location. However, its effect is suppressed by the first-arriving sound.

You might be familiar with this effect if you’ve done any sort of music production or mixing. Quite often a sound is hard panned to one of the two stereo speakers and a delayed copy (10-30ms) of the sound is hard panned to the other speaker. Our ears and brain don’t perceive two distinct sounds, but rather an ambient/wide-stereo sound. It is a cool technique for creating a pseudo-stereo effect from a mono audio source.

The first 30 seconds in the video below shows an example of the precedence effect in action. The delayed signal smears the original signal with phasing artefacts after which it seems to split from the original signal and become a distinct sound of its own.

Echos And Reverb

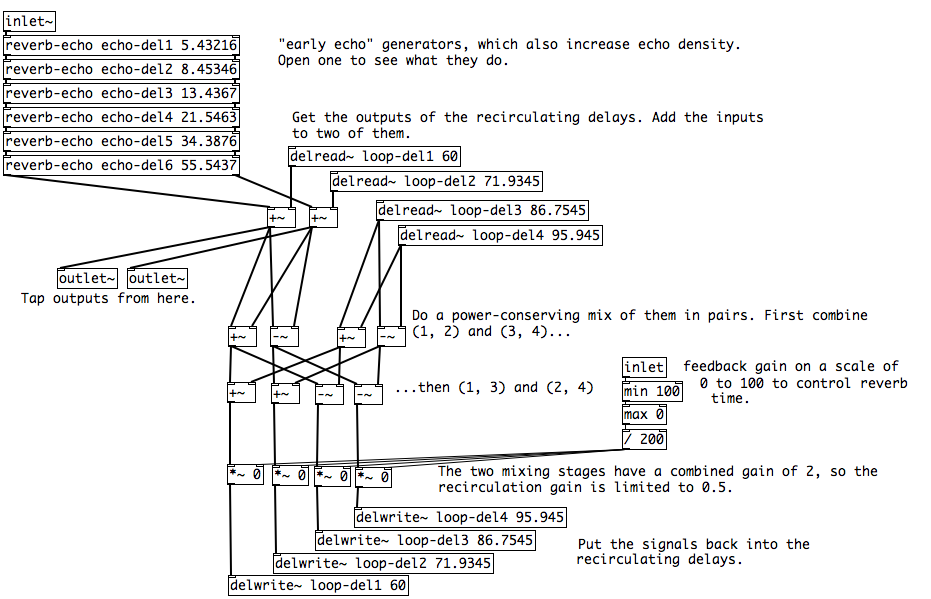

Echos are distinct delays. Reverberation is made up of early reflections which are delayed sounds that arrive first at the listener (right after the direct sound) followed by a tail that consists of many such delays diffused into a dense cluster. Artificial reverbs are quite often approximated using networks of delays that feedback into each other (convolution reverbs behave a differently).

Depending on the size of the space, early reflections would usually make up the first 80 milliseconds or so. The late reverberation, as we know, can last many seconds.

Phaser

Dialling down from hundreds of milliseconds, phasing and chorus effects are only an extension of the precedence effect. When the time delay time is modulated, usually in the tens of milliseconds, we end up with a phaser effect (a chorus and phaser are similar in theory, the major difference being in the choice of delay times, amongst other modulation parameters).

00:30 – 00:50 in the video below shows an example of this.

Panning

We now take amplitude panning for granted, but there was much experimentation into panning techniques in the early days of stereo (the precedence effect was first proposed in the 1940s). With amplitude panning, we can approximate positions of sounds in a stereo or surround stage by changing the amplitude of the sound across the array or matrix of speakers. In reality our ears and brain use a combination of factors to localise sounds: inter-aural time difference, inter-aural level difference, reflections off the body, early reflections, reverberation, amplitude and more.

We could construct an alternate stereo panner using only time differences across the speakers, rather than amplitude differences (Tomlinson Holman’s book on this subject, “5.1 Surround Sound: Up And Running” is worth reading). This is done by delaying the sound coming off one of the speakers between a small range, as little as 0 to 1ms. I’ve always found this effect to be more convincing. This technique hasn’t been used much because it is quite easy to end up with some nasty phasing issues if the listener isn’t in the sweet spot. Although, time-difference is one of the many factors used in binaural synthesis and panning.

00:55 – 1:17 in the video below shows an example of this.

Filtering

We take filtering for granted these days, with filters being just a click away. All DSP filters are made up of delay lines, ranging from a single sample (such as first order IIRs or simple low/high-pass filters) to multiple samples (FIRs). A simple low-pass filter can be created by delaying a feedback signal by one sample and cross-fading between the delayed+feedback signal and the original signal. The cut-off frequency of the filter is nothing but that cross-fade value. Here’s a quick example implemented using gen~ in Max.

The [mix] object is a cross-fader. A value of 0 sent to its third inlet results in the just the signal at the first inlet being output, while a value of 1 results in only the signal at the second inlet being output. A value between 0 and 1 results in a proportional mix between the two signals.

01:19 – 1:44 in the video below shows an example of this.

And, I’ll leave you with this (thanks @lostlab):

The word ‘psychoacoustic’ is used lots lately but I do think we all could live without it. All those phenomenons described in this (and other articles) are ‘acoustic’ – what’s ‘psycho’ about that. I would go so far and say that ‘psychoacoustic’ is something that does not exist. Every acoustic phenomenon is ‘psycho acoustic’.

Of course, great article, and very educational!

While some of the factors described in this article relate exclusively to acoustics, not all of them do. The precedence effect that Varun opens the article with occurs exclusively within our perception. Psychoacoustics may seem like something you hear too frequently around the web and in forums lately, because people use the term in incorrect ways. It is the study of how our brain interprets sounds on a mechanical/fundamental level. Most people tend to think of it as how we interpret sound in terms of meaning or emotion. So, you’re argument that it does not exist is correct in that latter interpretation, but it is very much a legitimate field of study. It’s all down to the correct usage of the term.

Working with acoustics and teaching psychoacoustics I’d say I’m happy that psychoacoustics appears more often in discussions. The field of psychoacoustics deals with how we interpret, perceive, and react to sound and sonic environments and as is stated above it is definitely a legitimate field of study. While the field of acoustics is the overarching field of studying sound and vibration, it focuses on the study of the physics behind sound and sound transmission starting from a vibrating object (for example vibration of vocal chords that produce speech) that is transmitted through a medium (usually air) and interacts with other objects (spatial boundaries such as room walls floor, furniture, or human beings etc) from that we specify sound with factors such as frequency, time duration and sound level. We also characterize sound transmission in space through factors such as reverberation time etc. As reverberation is mentioned – reverberation time is a way of determining how long sound lasts in a room (defined as the time it takes for sound to decrease 60 dB in level from a steady state) while reverberance is connected to how we perceive the resulting sound field.

The field of psychoacoustics focuses on making connections between the sounds physical qualities or characteristics with how we perceive them. One classic example of a psychoacoustic study is to study how changes in perception (given a hypothesis or a narrowed focus of study) changes with a given change in sound characteristics such as pitch shift (frequency variation) or change in sound level.

Psychacoustics definitely deals with meaning making and emotional effects, in fact this is a specific field of study within psychoacoustics called emoacoustics (yes, it is true). Example here:

http://omicsgroup.org/journals/emotional-responses-to-information-and-warning-sounds-2165-7556.1000106.php?aid=7643

Worth mentioning is that psychoacoustics is ONE way of studying sound perception where common methodologies are listening tests with questionnaires. There are other fields as way that employs other methodologies.

Finally – great work Varun!

I guess what I was trying to say is that one can not separate between the thing itself (acoustic) and its perception (now called psychoacoustic). How does that go? So, is ‘Music’ now ‘psycho’ or are you going to separate it into two parts, one just being ‘acoustic’ and the other ‘psycho-acoustic’? Are ‘meaningless’ noises (frequencies that cover or don’t cover human’s hearable range and one happens upon, like wind, waves, clinks and clanks, cars running by etc) acoustic or psycho acoustic phenomena?

The only thing wrong with the term psychoacoustics is that it elides the difference between physiology and the ‘mind’. But it’s certainly the case the sound we perceive is not identical with the totality of acoustic phenomenon going on at any moment. The limited frequency range of human hearing (compared to creatures such as bats, dolphins, dogs, and elephants) assures that in itself. The phenomenon of ‘masking’, discovered in psychoacoustic research, really works, has no basis outside the human mind-body, and has proven to be worth billions in audio compression patents.