Robert Thomas and Joe White from RjDj, known for crafting interactive sound-musical worlds on iOS devices, were kind enough to spend an afternoon sharing their thoughts on interactive soundscapes and music, technical and creative limitations, Pure Data, procedural techniques and their latest app ‘Dimensions‘. You most definitely would have heard of their ‘Inception App‘, released over a year ago.

DS: Tell us about RjDj

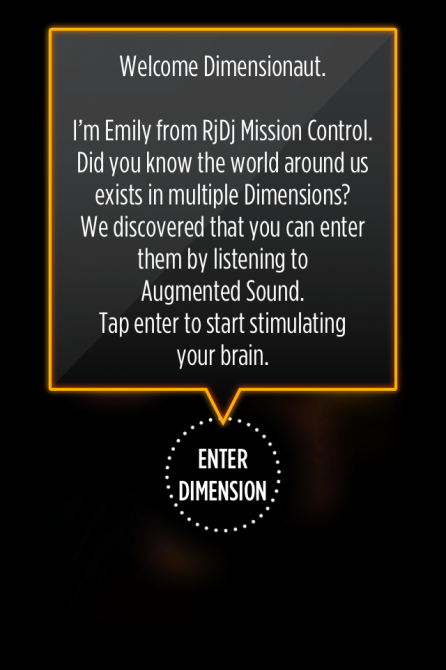

Robert: RjDj was formed in 2008 but was actually initially conceptualised as far back as the 1990s. The first app that was released was very unusual for that time because it was doing things with sound from the microphone and using the accelerometer in ways that was not really supported in the SDK. Since then we have done a wide range of different apps, which are about exploring interactive music or reactive music or augmented music and that has gone from RjDj to Rj Voyager through working on projects like the Inception app to Dimensions, the latest one, which is veering more in to the world of games. RjDj was formed by Michael Breidenbruecker, who is one of the cofounders of last.fm and he is the CEO and driving force behind the company. My role is more in terms of music composition and looking after the sonic side of things, and Joe is also working in that area and a bit more oriented toward the reactive infrastructure of how we make music happen in relation to events. He also works a lot more on sound design that I do.

DS: Most of what you do is in Pure Data (Pd). Do you begin the composition and design process in a regular DAW and then migrate to Pd?

Robert: We use a conventional DAW of some kind and export bits that get reassembled in real time based on whatever rules are appropriate for that situation. Much of Dimensions was done in Cubase and some of it was done in Logic and then exported as tracks which are some times stacked one on top of each other and fade in and out based on different user interaction. There are individual hits that get algorithmically put back together on the fly – drum patterns and things like that, and layers of reactively triggered synthesis.

Joe: In our earlier projects we were trying a lot more to reconstruct all the music inside Pd but we obviously had performance hits and we had to optimise it all. In Dimensions, we created all the assets in the DAW and mixed it as well as we could so that we could concentrate on how it played back in Pd. How we construct the assets in Pd is just as important, as it needs to be simple while achieving the same sort of experience.

Robert: In 2009 we did a project that was extremely ambitious. Almost everything in there was algorithmically put together in the mix. It was all stemmed material or individual tracks that were put back together in Markov states and various trees of possibility and based on the user behaviour and all kinds of things. We found it very difficult to achieve a very good level of quality if we were that ambitious with the structure. Also, a lot of the end listeners couldn’t understand the level of complexity we were producing in terms of the structure and the variants of the music. For them it was just music. Now we concentrate on making one very obvious reactive thing over more linear music.

DS: What sort of technical limitations are you up against?

Robert: We have to impose our own limitations because Pd is so open that it is easy to get completely lost in creating some hugely intricate and complex reactive system that no one is every going to understand and you can get very very deep in that and lose sight of the over all picture. It is very dangerous – in my opinion.

Joe: It is too easy to go down that route and just think about making the architecture and not creatively make things.

Robert: The other limitation that we have, which is getting better all the time, is the kind of CPU power and memory limits of the devices. Until Dimensions we have been working at 22KHz to give us more CPU top end to achieve more ambitious real time effects.

DS: Do you filter out the top end to simulate what it might end up sounding like?

Robert: I try to not rely on creating musical sensations that I know will rely on a lot of high frequencies. Its still very frustrating when we down-sample. We try to get as much out of the sampling rate that we work at. We have also written some specific externals for Pd which allows us to playback mp3s or compressed files.

Joe: I think the beautiful thing is that its just a temporary sort of limitation. As these devices get increasingly powerful we can do more stuff and eventually run at 44.1KHz.

Robert: The next app is at 44.1KHz and it will be much more ambitious as well in terms of effects. It always gets bigger with each app.

DS: Along with these technical limitations do you impose creative limitations? How do you know where to stop?

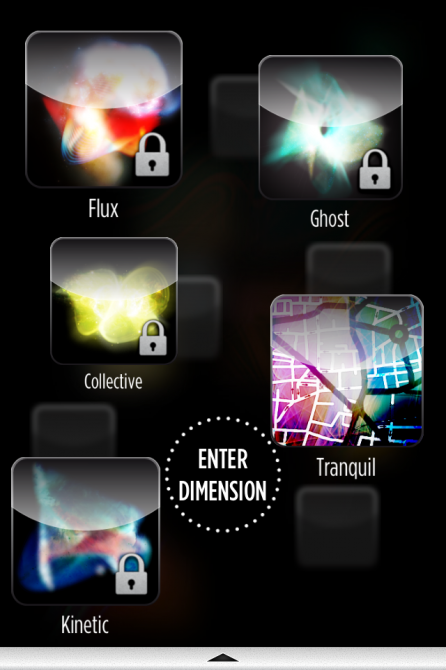

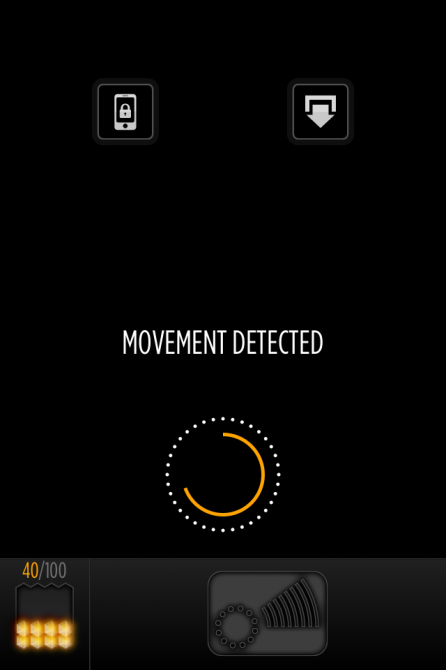

Robert: In Dimensions, there are soundtracks for different parts of the listeners life. For instance, there is the tranquil dimension and we know through the microphone that it is going to be quiet in that scenario. Each one of these factors is the driving force of how we approach it creatively. For tranquil, the brief for the whole of that dimension was creating something that reacted to that type of audio environment and enhanced a sense of tranquility. Equally, in the kinetic dimension, which is all about movement, we know that the user is moving through accelerometer analysis. It is basically targeting a piece of music to push all of the listener’s emotional buttons to make them feel that they are in a kind of action sequence and reacting in a very fine way to different levels of the accelerometer. That is a very powerful brief as a composer and it means that you know that you can create a very dynamic experience for the listener. In many ways its like writing music for games, except, we have the additional hard task of doing analysis to determine what the game events are.

Joe: In any sort of interactive medium, it is important that you have a feedback control that is very easy to understand for the user. In a lot of what we do, the majority of the user’s interaction is with sound. We have to make it very clear in what they hearing that they are affecting a certain part of the track. We have made our own dynamic mixing system to fade assets in and out.

DS: There are a lot of effects on the microphone, like pitched delays and granulation. Were these decisions made before hand?

Robert: Normally what we are trying to do is to find something that transforms the listeners perception in to feeling like whatever the brief is. In the ghosts dimension for instance, it works with audio analysis to decide when an audio event happens and then records that and generates a reverse reverb in real time. There is also one that records in to the buffer and then stretches out that buffer as it plays it back. I don’t think these days we think about CPU limitations very much. For instance, in the second level in Dimensions, there is one called the unstable dimension which has got every type of conventional effects unit in it, all stacked on top of each other and doing all kinds of glitchy sort of effects in real time to the microphone. There is also a new version of the collective dimension which captures a sound in to a buffer and then starts scratching back and forth as if it is like a turntablist on reality. That is the more crazy end of what we try and do on the fly. We do use some very simple effects – even just normal reverbs and delays some times. What is interesting from an engineering/producer point of view is that most people who have never used a studio or used any sort of music production tools find a delay line an incredibly unusual experience.

Joe: We also have the problem that we can never predict what the input from the microphone is going to be. A bus could come by and destroy everything in the tranquil dimension. We have to make sure that we can handle that correctly and it sounds okay.

DS: You obviously use the accelerometer and microphone as sensors, along with the GPS for the map. Anything else happening under the hood?

Robert: We determine a lot of situations that people are in by cross referencing things like the time of day or the GPS combined with weather data of that location. If they are in a sunny environment we put them in to the sunny dimension. A lot of the time it is about triangulating between different sensors to work out whats going on. When they are inside that dimension we will have very specific bits of analysis we do to try and achieve a certain effect.

DS: Any 100% procedural techniques used in the game?

Joe: In the previous update we changed the artifacts so that they are procedurally generated with subtractive synthesis. It was a lot better, because beforehand we were using one sample and all the artifacts sounded the same. Now we can actually generate a load of different sounds. There’s also a lot synthesis work in different dimensions.

Robert: It is all a big mixture really. The common thing with our approach is deliberately doing that so we can achieve the highest quality. In RjDj for instance, I have done quite a few scenes that are entirely procedural. It is very interesting work to do. But when we are designing very specific experiences like Dimensions, we want the best of all worlds.

DS: Do you reprocess the samples to create variation? Any volume/pitch randomisation or granulation?

Robert: One interesting example of that is the sound of the Nephilim, it changes as it gets nearer to you. That is one sound in a table in Pd which is played back in a granular way. There are quite a lot of things that we do in music where we will have samples and play them back at different pitches and we will affect them or algorithmically create rhythmic structures out of them.

Joe: In the kinetic dimension there are five different patterns based on the accelerometer levels and it switches between them quite easily and adds in random variations.

Robert: There is an improvisational level in the programming of the drums so that they are algorithmically improvising. We also have on top that a glitching set of effects that are adding repeats and pitching and other effects. I forget what is in there after a while [laughs]

Joe: It almost doesn’t matter how you have done anything, if it sounds good it is good. You can use any technique, although, it is a lot cooler if it is all procedural [laughs].

DS: What’s next?

We are doing another app – I can’t really talk about it at the moment. It relates a lot more to detecting what is going on in people’s lives and thinking about how content could fit in to those situations. I’m not quite sure when we are going to start talking about that publicly, but it is not a game. We are still very interested in the type of direction we have been going with Inception and Dimensions, which is a holistically designed experience in sound. We are also very interested in music and making music fit in to people’s lives in interesting and new ways.

Joe: There seems to be a lot of similarities in the stuff we are doing and the game world and how they deal with interactive music. A lot of the paths that they have gone down are very similar to what we mess around with.